Making It Up in Volume: How the AI Infrastructure Boom Echoes the Telco Frenzy of the 90s

From cheap bandwidth to cheap AI: how overinvestment in infrastructure fuels innovation.

In March 1997, Gary Winnick, a former bond trader, launched a new startup called Global Crossing. Channeling the Field of Dreams mantra "Build it, and they will come," Winnick and his team set out to construct a telecommunications network to circle the globe.

Just over a year after its founding, Global Crossing went public in August 1998, raising $399 million in its IPO and peaking in 1999 with a valuation of $47B—less than two years after launch.

Yet that blazing trajectory ultimately proved unsustainable. The trillion-dollar telecom infrastructure boom was becoming a speculative bubble detached from reality. And for Global Crossing, the day of reckoning came in the fourth quarter of 2001 when it lost a staggering $3.4 billion on just $793 million in revenue. Within weeks, the company filed for bankruptcy.

Global Crossing's spectacular rise and fall encapsulated the hubris and hope that defined that era—a cautionary tale as we look at today's AI infrastructure boom, where companies are making massive investments eerily reminiscent of the late 90s telco frenzy. In this article, we'll draw parallels between these two eras to examine how such speculative build-outs are a natural part of any transformative technology's adoption lifecycle.

A Digital Manifest Destiny

Global Crossing's demise exposed the mania underlying the dot-com era's infrastructure overbuilding. Yet, in those heady days of the late 1990s, unbridled expansion seemed almost inevitable. Two key forces propelled this telecommunications gold rush mentality.

First, the Telecommunications Act of 1996 removed restrictions that had been put in place after the 1984 breakup of AT&T's monopoly. This deregulation opened the floodgates for new competition.

At the same time, the dot-com boom was taking hold, fueled by rising internet adoption and the rollout of broadband to homes - boosting speeds from sluggish 56k dial-up modems to blazing fast always-on access up to 1.5 Mbps. As businesses raced to establish an online presence, a growing chorus promised that the internet represented an existential threat to every company. The Economist's 1999 special report "The Net Imperative" led with a bold prediction from Intel CEO Andy Grove that within 5 years, all companies would be internet companies "or they won't be companies at all."

In this heated environment, WorldCom stoked the mania by claiming that Internet traffic was doubling every 100 days—far outpacing the reality of traffic doubling annually. But facts were no match for the fever gripping the market. Buoyed by easy money from eager investors, companies like Level 3, Qwest, and the ill-fated Global Crossing raced to build massive internet infrastructure to meet the supposed tidal wave of web traffic growth.

What followed was one of the biggest overbuilds in history. Companies laid over 80 million miles of fiber optic cables crisscrossing the United States. The overcapacity was staggering—even four years after the dot-com bubble burst, 85% of those fiber lines were still dark in late 2005. The glut caused bandwidth costs to plummet by 90% as supply outstripped demand.

The AI Boom: Echoes of the Telecom Revolution

Just as the Telecommunications Act of 1996 kicked off a deregulatory feeding frenzy, a 2022 paper from OpenAI titled "Scaling Laws for Neural Language Models" set off the modern AI gold rush. The researchers found that making language models bigger led to giant performance gains. This discovery kicked off a new era of model-building mania, requiring ever-larger datasets, more computing power, and significant capital.

The money swiftly followed. Venture funding for generative AI startups shot up 5x in 2023 compared to the prior year, topping a staggering $21.8 billion across 426 deals. Thirty-six generative AI companies have already achieved unicorn valuations over $1 billion. However, this figure only represents the publicly disclosed venture investments. In addition to this, corporations are pouring billions more. The vast majority of this capital deluge has poured into AI infrastructure—the digital shovels and picks fueling the model-building frenzy.

This infrastructure build-out is unfolding on two key fronts: tech giants and deep-pocketed corporations racing to train their own proprietary AI models, and a rabble of newer players emerging to host and serve up the ballooning array of open-source models.

Building Proprietary Models: A High-Stakes Gamble

In the race to catch up with OpenAI's groundbreaking GPT-4 models, companies are betting big on training their proprietary models. Some, like Anthropic, are banking on novel approaches like constitutional AI. Others, like Bloomberg with its financial dataset, are wagering that unique training data will give them an edge. However, as recent history has shown, success in this high-stakes game is far from guaranteed.

Take the cautionary tale of Inflection AI. Founded in 2022, the company raised a staggering $1.5 billion in just over a year, clinching a $4 billion valuation. But less than a year later, Inflection is effectively folding, with its founders jumping ship to launch Microsoft's AI division. Then there's Stability.AI, which burned through nearly $100 million in AWS infrastructure costs after raising $100 million in seed funding.

For every high-profile flameout, there are countless more training initiatives quietly fizzling out behind corporate walls. I wrote previously about the launch of BloombergGPT in April 2023. Billed as a groundbreaking tool for financial analysis, the model was touted as outperforming lesser AIs. The pitch from GPU salespeople has been seductively simple: train your models on your proprietary data, and you'll leave your competition in the dust. Yet after millions sunk into development, BloombergGPT underwhelmed, lagging behind GPT-4 and even ChatGPT on financial tasks. Tellingly, media mentions of the project have been scarce since its debut.

These stories underscore the harsh realities of the AI arms race. Even with war chests in the hundreds of millions or billions, success is not assured. The technical challenges of model training at scale are immense, and the costs can be staggering.

The Shovel Sellers: Serving Open-Source Models

As the AI gold rush gathers steam, a new breed of prospectors has emerged: the shovel sellers. These infrastructure providers are racing to stake their claim in the booming market for serving up language models. With a proliferation of powerful models now freely available, the demand for GPU horsepower to run them is skyrocketing. And a host of companies, from startups to tech giants, are angling to cash in.

The economics of GPU hosting are a far cry from traditional server rentals. A top-of-the-line GPU box can cost 26 times as much as a vanilla server. But thanks to their knack for parallelization, they can crunch far more data in the same timeframe. For hosting providers, the key to recouping these hefty hardware investments lies in maximizing utilization - packing as many requests as possible onto every GPU.

This dynamic is fueling a price war among infrastructure players vying to serve popular open-source models like Mistral. Mistral.AI, the company behind the model, initially priced hosting at $0.65 per million tokens. But now you can use Mistral from various hosting providers for a lot cheaper. Together.ai ($100M funding) and Perplexity ($70M funding) will run Mistral for $0.20 / 1M tokens, and Anyscale ($99M funding) will run it for $0.15 / 1M tokens. The incumbent cloud infrastructure providers are not standing by idly either. Amazon just announced a $150B investment in enabling their data centers for AI.

The AI Infrastructure Overbuild: Paving the Way for Tomorrow

As we've seen, the current AI boom bears a striking resemblance to the telecom boom of the late 1990s. Just as the telecom industry anticipated the coming explosion of internet traffic and invested heavily in infrastructure, today's tech giants and startups are pouring billions into building the foundation for the AI-powered future.

The clearest evidence of this investment frenzy can be seen in the skyrocketing revenues of Nvidia, whose powerful GPUs have become the de facto standard for AI computing. In just one year, Nvidia's revenue has nearly tripled, reaching $61 billion. Sequoia Capital estimates that in its first year, the generative AI industry has already produced $3 billion in revenue—but that figure pales compared to the investments being made to fuel future growth.

But will this boom end in a bust, like the telecom frenzy did? Only time will tell. However, regardless of the short-term outcomes for individual companies, this massive investment in AI infrastructure is a natural and necessary part of the technology lifecycle.

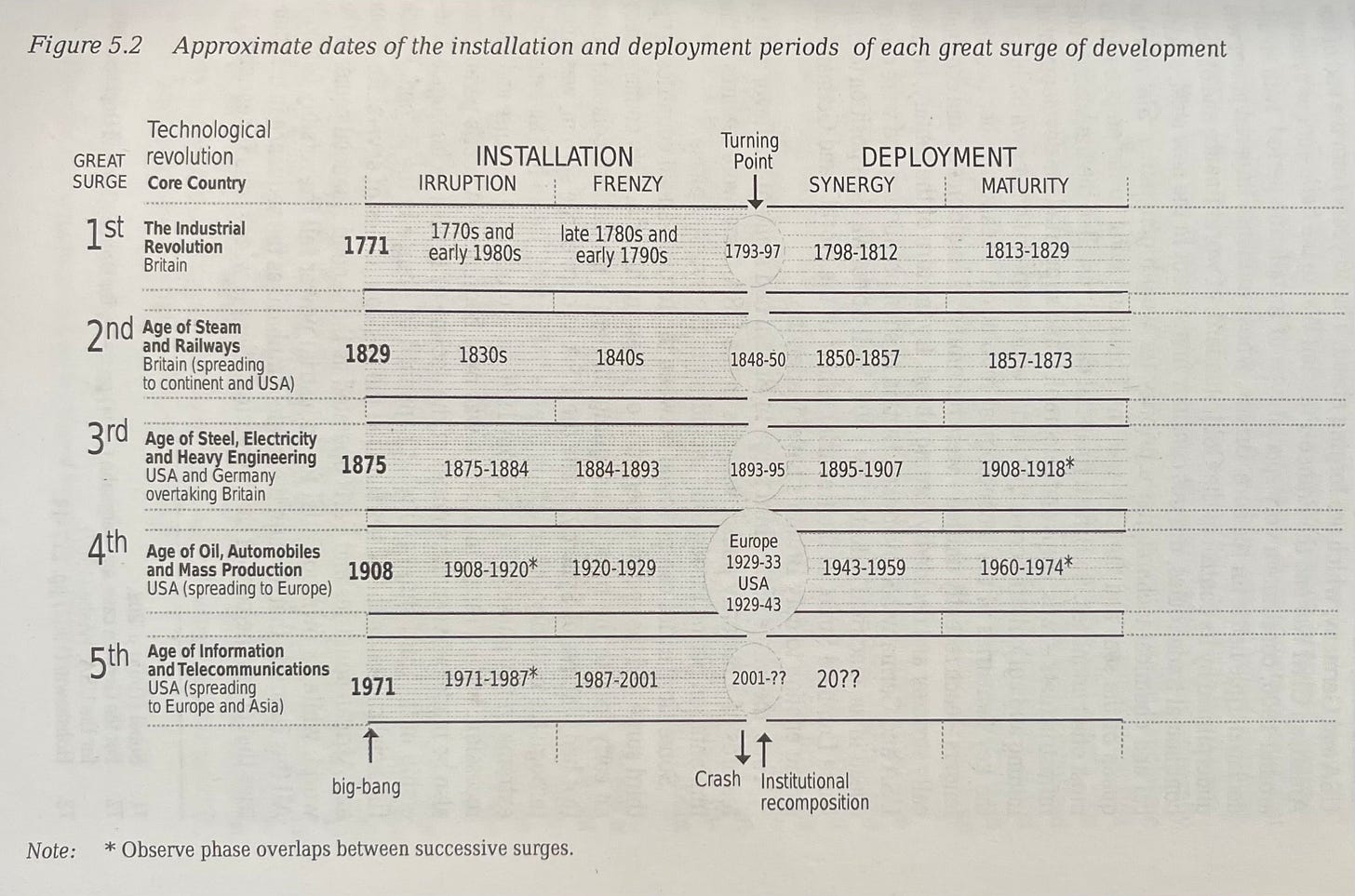

As economist Carlota Perez explains in her book "Technological Revolutions and Financial Capital," there is often a gap between the installation phase of a new technology, when infrastructure is built out, and the deployment phase, when the technology becomes widely adopted and integrated into society. This gap is typically marked by a burst of the speculative bubble that formed around the new technology during the installation phase.

We saw this play out in the early 2000s, as the dot-com bubble burst and many telecom companies went bankrupt. But the overinvestment in broadband infrastructure during the boom years had a silver lining: it made bandwidth cheap and abundant. This, in turn, enabled the rise of new, innovative businesses that would have been impossible just a few years earlier.

Take YouTube, for example. When Chad Hurley, Steve Chen, and Jawed Karim founded the company in 2005, the idea of hosting and streaming user-generated videos for free seemed crazy. Video was notoriously expensive to serve, and most experts believed no business could afford to shoulder that cost. But thanks to the glut of cheap bandwidth created by the telecom overbuild, YouTube was able to turn that crazy idea into a reality. Just a year after its launch, Google acquired the company for $1.65 billion. In 2023, YouTube generated $31B in revenue for its parent company, Alphabet.

The AI boom may well follow a similar trajectory. Some of today's infrastructure companies may falter, but the investments they're making now could lay the groundwork for a new generation of AI-powered businesses. The excess GPU capacity created by the current frenzy—which may end up being the Aeron chairs of this era—could make running large language models cheap enough to enable applications we can't even imagine today.

Just as the fiber optic cables laid by companies like Global Crossing in the late 1990s now carry data across the globe, enabling everything from video streaming to telemedicine, the AI infrastructure being built today could become the foundation for transformative technologies that reshape our world in ways we can hardly fathom. As we've learned from past technological revolutions, sometimes you have to overbuild to lay the groundwork for the future.