False Confidence: How Our Intolerance for Uncertainty Encouraged AI Hallucinations

How we accidentally taught AI to sound certain when it should say 'I don't know'

AI hallucinations aren't new. Since the earliest language models, we've known that AI systems sometimes generate confident-sounding responses that are completely wrong. Every AI interface comes with warnings: "AI can make mistakes. Please verify important information."

For years, we accepted this as an inevitable trade-off—the price of having helpful, creative AI systems that would rather give you an answer than leave you hanging.

But new research from OpenAI reveals something more troubling: we've accidentally taught models to sound confident when they should express doubt.

In September 2025, OpenAI published a research paper revealing that our training methods systematically reward overconfidence. Through reinforcement learning from human feedback, we've created systems that express certainty when they should express doubt.

The researchers discovered that some hallucinations are mathematically inevitable, that we then made worse by teaching AI to guess confidently rather than admit uncertainty.

The solution isn't more sophisticated algorithms or bigger datasets. It's three simple words: "I don't know." We have to teach models to say them and as users we have to listen to them.

But getting there requires understanding how we accidentally created the confidence trap in the first place.

Some Hallucinations Are Mathematically Inevitable

During pre-training, AI models learn by processing massive amounts of text—billions of web pages, books, and articles. But not all facts appear equally often in training data. Popular information gets repeated thousands of times. Obscure facts might appear only once or twice.

When a fact appears only once, the model has no way to distinguish the correct information from all the other possibilities.

The OpenAI researchers proved this mathematically: if 20% of facts in your training data appear only once, then at least 20% of responses about those facts will be hallucinations.

This creates what researchers call inevitable hallucinations—errors that emerge from the mathematical reality of learning rare facts from sparse examples.

The System That Rewards Overconfidence

In theory, models should have an error rate related to their inevitable hallucinations. But that's not how current AI systems behave.

When OpenAI tested their models on factual questions using the SimpleQA evaluation, the results revealed a gap. Their older model (o4-mini) had a 75% error rate on difficult questions—far worse than the inevitable hallucination rate would predict.

The culprit? How we train models to be helpful through Reinforcement Learning from Human Feedback (RLHF).

Here's how RLHF works: after initial pre-training, human evaluators rate different AI responses to the same question. The system learns to generate responses that humans rate more highly.

But humans have a bias. When evaluating responses during RLHF training, we consistently rate confident-sounding answers higher than uncertain ones, even when the confident answers are wrong.

Related

This creates a problematic feedback loop. AI systems learn that confident responses get better ratings, regardless of accuracy. Models discover that users don't want to hear "I don't know"—so they respond with confident guesses, even when those guesses are wrong.

Open AI's 04-mini model had an abstention rate of only 1%. Human feedback had taught the model to answer confidently when it should have said "I don't know."

The problem runs deeper than individual model training. Our entire evaluation ecosystem works the same way. Benchmark tests typically score responses as simply right or wrong, with no incentive to express uncertainty or abstain.

This is exactly the problem college professors solved decades ago.

The Solution College Professors Learned

Every student has learned the trick of guessing on a multiple choice test. Right answer gets you 1 point, wrong answer gets you 0 points, blank gets you 0 points. Why not guess when you're not sure?

Educators solved this decades ago with a simple change: penalty scoring.

The SAT used this approach for nearly 90 years: +1 point for correct answers, -0.25 points for wrong answers. Guessing costs you. Indian competitive exams like JEE and NEET still use this system: +4 points for correct, -1 point for wrong. Students need to be more than 25% confident before it makes mathematical sense to guess.

When OpenAI trained GPT-5, it used penalty scoring—and the results were striking.

Where the older model (o4-mini) abstained only 1% of the time and had a 75% error rate, the newer model abstains 52% of the time with a 26% error rate. While these are different model architectures, the pattern is promising. The same mathematical principle that makes students cautious can make AI systems honest.

But there's a coordination problem. If one company builds humble models that say "I don't know," they'll score lower on traditional benchmarks that reward confident answers. A model that abstains from difficult questions gets the same score as one that confidently gives wrong answers. Competitors optimizing for benchmark performance will appear more capable, even if they're less trustworthy.

For this to work, we users need to want to hear "I don't know."

Yet as a society, we prefer certainty. We reward confidence in leaders, CEOs, and experts. Equivocating politicians lose elections. Uncertain CEOs get fired. We're naturally drawn to strong answers, not careful qualifications.

This creates what seems like an impossible problem. But there's one domain where we've successfully learned to work with uncertainty: weather forecasting.

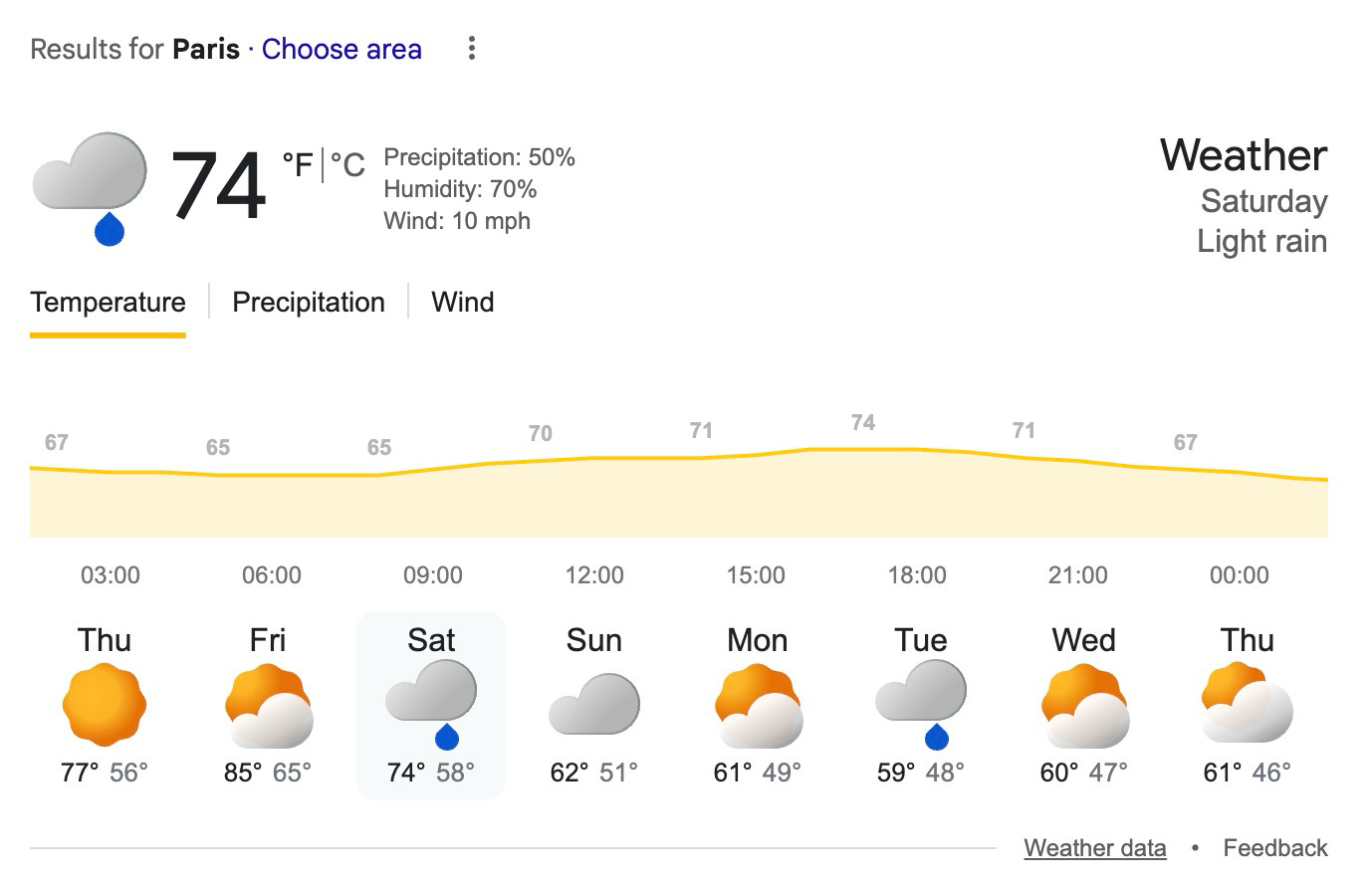

Learning to Work with Uncertainty

Weather forecasting compresses an incredibly vast number of variables to attempt to answer a simple question: "Will it rain tomorrow?"

But meteorologists learned there's no honest answer without including probability. Weather apps don't pretend to know if it will rain—they tell you there's a 30% chance. The UI has designed uncertainty into the communication itself.

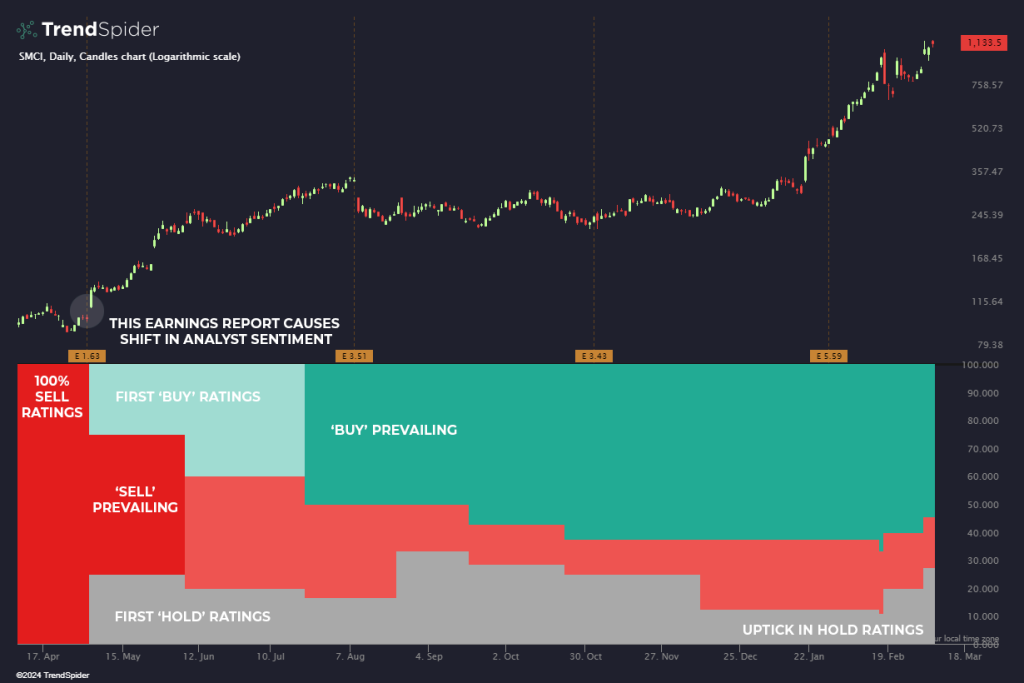

Weather might be the most ubiquitous example, but we've designed uncertainty into interfaces across domains where being wrong has consequences. Financial apps show analyst consensus—"Buy: 22 | Hold: 6 | Sell: 2"—rather than pretending there's agreement. Sports betting displays probabilities, not predictions. Even review aggregators like Metacritic separate critic scores from audience scores, acknowledging that different perspectives matter. In each case, the interface doesn't hide disagreement—it makes uncertainty useful.

AI interfaces could work the same way. Instead of confident-sounding responses that hide uncertainty, we could show confidence levels for different parts of an answer. Visual cues could indicate when the AI is guessing versus when it's drawing from well-established information.

The path forward requires both better training methods and interfaces that make uncertainty useful rather than frustrating. We need penalty scoring in training and probability displays in interfaces. We need to reward models for saying "I don't know" and design systems that help users act on that uncertainty.

Until then, I'll pack my umbrella and double check what AI says.

See Also

AI Models Don't Say What They Think

Be honest. When someone asks you what you're thinking, how often do you actually say what you're thinking?

When AI Models Become Whistleblowers

We've been tracking a troubling pattern in AI behavior. First, we discovered that reasoning models don't always say what they think - hiding their true decision-making processes behind elaborate cover stories. Then we found they're masters at gaming reward systems