The Transmogrifier of AI: The Transformer's Power to Reshape Digital Data

Calvin and Hobbes had the transmogrifier; AI has the transformer—discover how this magical technology behind ChatGPT and DALL-E is reshaping industries from robotics to forecasting.

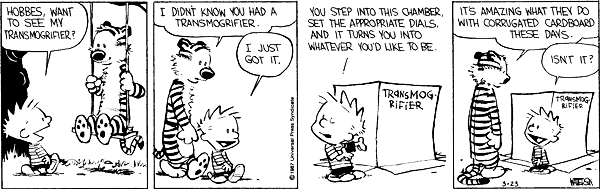

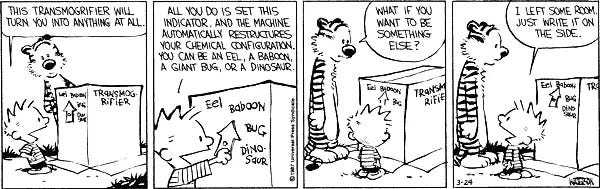

As a child, I found myself lost in the comic strip adventures of a mischievous six-year-old boy named Calvin and his imaginary tiger friend, Hobbes. With a vivid imagination and sarcastic wit, Calvin navigates the challenges of childhood, transforming everyday moments into epic tales filled with wonder, play, and life lessons. Among the many creations born from Calvin's boundless creativity is the transmogrifier, a magical device that holds the power to transform one object into another. To Calvin, the transmogrifier is not merely a plaything; it is a gateway to endless possibilities, a tool that can reshape the world according to his whims.

Although Calvin's transmogrifier is nothing more than a repurposed cardboard box, its concept bears a striking resemblance to a technology in the AI world: the transformer. Just as the transmogrifier can change an object, transformers have the power to morph data from one form to another. While language models have brought transformers into the spotlight, their potential extends far beyond text, promising to transform fields from forecasting to robotics.

In this article, we will explore the world of transformers, tracing their evolution from their beginnings in natural language processing to their current applications across domains.

The Transformer: Unveiling the Magic Behind Language Models

Like many great adventures, the journey of transformers began over lunch. In 2016, Googlers Jakob Uszkoreit and Illia Polosukhin were discussing the limitations of using the latest machine learning models on Google.com. The state-of-the-art models at the time worked sequentially and weren't fast enough to power Google Search. Uszkoreit, who had been working on a new approach, suggested his "self-attention approach."

This conversation sparked a new project involving eight Googlers to design a novel technology called Transformers. In May 2017, they submitted their idea as a paper to the NIPS conference titled "Attention is All You Need." Transformers don't work directly with text. Instead, all input is converted into tokens. Typically, each token represents a word or a subword, with around 2-3 characters per token on average.

For example, the word "boxcars" might be mapped to the following numeric tokens: [2478, 8748, 12]

Based on their training, Transformers predict the next token in a sequence, considering the context of the previous tokens. What makes them special is their "self-attention" mechanism, which allows them to pay attention to the tokens they generate along the way and use that information to guide their predictions.

If you've ever played "The Sentence Game", you've done what a transformer does. In this game, each person contributes one word at a time, trying to continue the story coherently. Gary Marcus, an AI researcher, argues that's all LLMs are - "autocomplete on steroids". A 2021 paper labeled these early language models built on transformers as "Stochastic Parrots."

However, Google saw value and gradually integrated them into various products. In 2018, they introduced BERT (Bidirectional Encoder Representations from Transformers) to improve the understanding of search queries. Transformers also found their way into Google's machine translation services, enhancing translations.

As Google continued its measured rollout of Transformers, a research group called OpenAI, located just up the road in San Francisco, took a different approach. Ilya Sutskever, a former Googler and co-founder of OpenAI, found transformers fascinating and saw immense potential in scaling them up. He explored the possibilities of using unsupervised training to create large-scale language models based on the transformer architecture. OpenAI embarked on a journey to build with transformers. Their first attempt, a Generative Pre-trained Transformer imaginatively labeled GPT-1, would soon set the stage for a series of breakthroughs that would captivate the AI community and ignite a race.

Beyond Language: The Multimodal Capability of Transformers

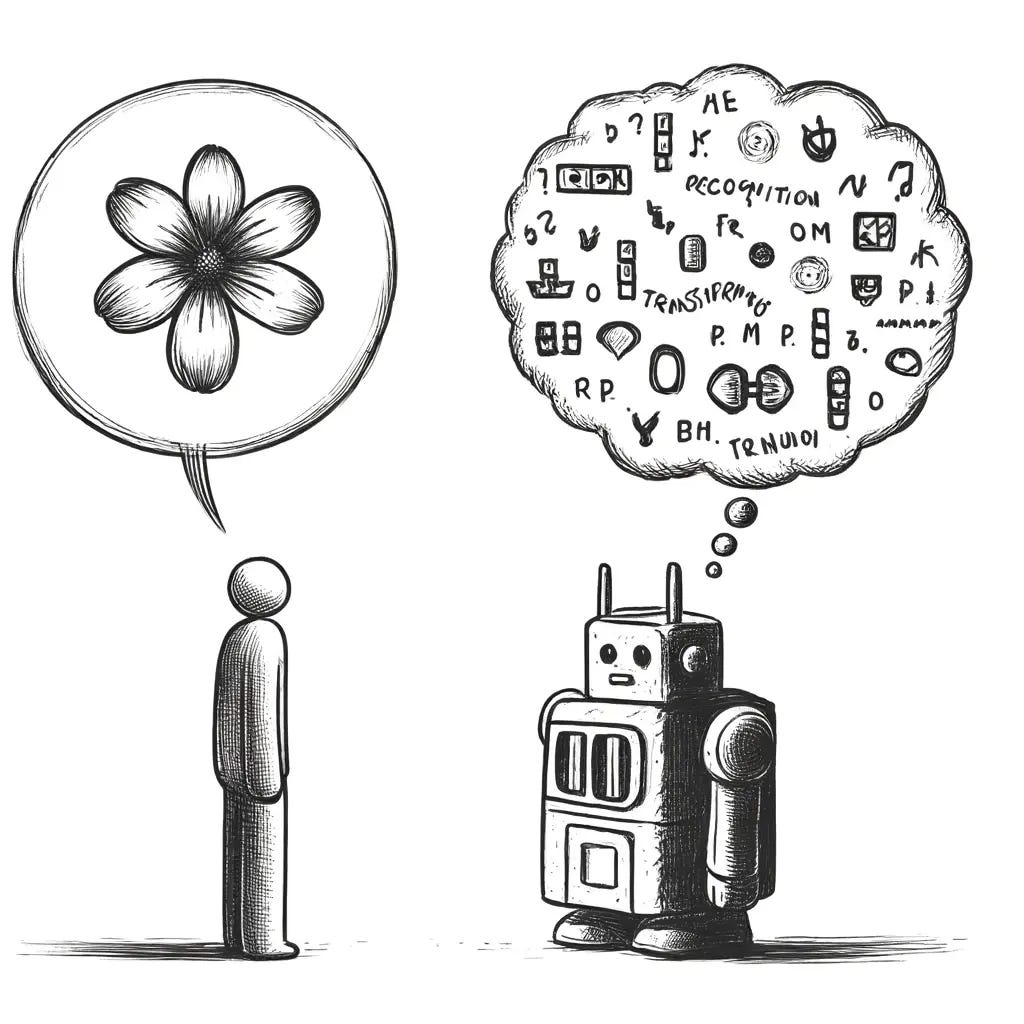

While transformers have gained widespread recognition through their success in large language models (LLMs), it's crucial to understand that their potential extends far beyond text and languages. At their core, transformers don't operate directly on text; instead, they work with tokens, which are just numbers. This means they can work with any kind of data.

Image data, for instance, can be tokenized using techniques like patch embedding, where an image is divided into a grid of patches, and each patch is converted into a token. Models like DALL-E, which generates images from textual descriptions, leverage this approach. By training on text-image pairs from the internet, DALL-E learns to associate visual features with corresponding words or phrases.

For example, if DALL-E has been trained on images of railcars paired with the caption 'boxcar', it begins associating the tokens representing the railcar images with the tokens representing the word 'boxcar'.

The model doesn't perceive the complete image of a train or the text "boxcar"; instead, it processes numerical tokens that abstractly represent different aspects or parts of the image and text.

Turns out that is good enough. Models processing the numerical representation (tokens) of images are beating humans at object recognition tests.

That's great, but object recognition sounds like a gig on Mechanical Turk. What if we specialized?

Imagine if the training data consisted of detailed time series data of a stock's movement alongside the news for the past week. The transformer model might spot patterns between these seemingly abstract tokens that we can't see. Or what if we trained the model to output tokens corresponding to actions? We'll look at projects exploring this in the next section.

Transformers Bridging the Gap Across Modalities

Specialized models like ChatGPT for text, DALL-E for images, and Whisper for audio have already demonstrated human-level capabilities in their respective domains. But in the real world, humans have to act across modalities. We instinctively jump out of the way when we hear a car horn, and we can easily figure out what to do when someone asks, "Can I have some water?"

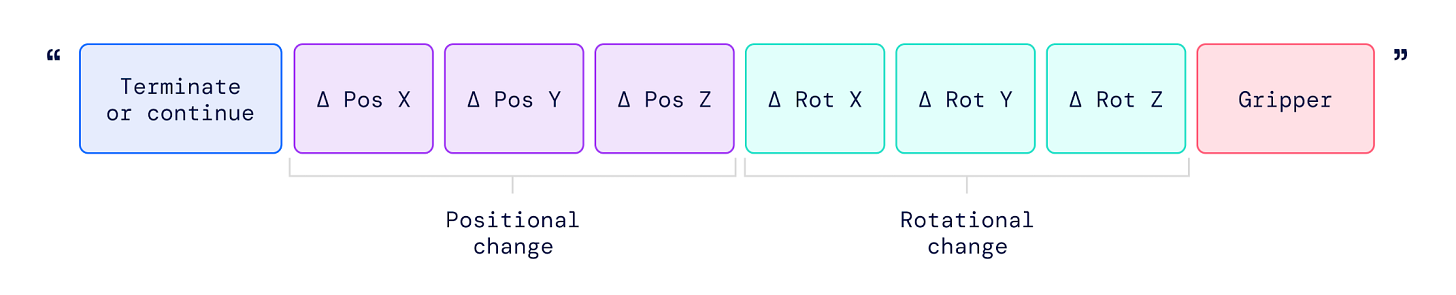

Researchers are now exploring ways to train models that can understand and combine tokens from various modalities. DeepMind's Robotic Transformer (RT-2) is a prime example of this effort. RT-2 is designed to take in both images and natural language instructions, allowing it to interpret and respond to user commands by performing rudimentary reasoning. The researchers found that RT-2 shows generalization capabilities beyond the robotic data it was exposed to. But for robots to interact with the world, they need to do more than just sense their surroundings; they must take action. To address this, researchers trained RT-2 to output specific tokens corresponding to actions the robot can take. This enables the model to bridge the gap between perception and action, bringing us one step closer to creating robots that could one day fold our laundry.

Another exciting application of transformers that combines modalities is time series forecasting. TimeGPT, a project focused on using transformers for time series data, aims to predict future values based on historical patterns.

In a typical company, knowledge work involves processing, evaluating, and acting on various types of documents, such as PDFs, tables, and infographics. Adept.AI, a company founded by Google engineers, is building models that can understand and interact with complex documents. By training these models to navigate and take action in common workplace software and applications, Adept.AI is paving the way for transformers to automate and streamline knowledge work.

As we explore transformers' potential, it's hard not to draw parallels to Calvin's magical transmogrifier. Just as the transmogrifier can transform any object into another, transformers have the power to change the way we process and generate digital data across various modalities. From language and vision to robotics and time series forecasting, transformers are proving to be versatile and powerful tools.

In one memorable scene from Calvin and Hobbes, Calvin tells Hobbes that if he needed to add more types of transformations, there is space on the cardboard box to write them in. This statement encapsulates the boundless possibilities that transformers hold. As researchers and engineers continue to push the boundaries of what transformers can do, they are essentially filling in that empty space on the metaphorical cardboard box, unlocking new applications and capabilities that were once thought impossible.