The Opposable Thumb Moment: How Tool Use is Changing AI

The evolutionary leap that's turning knowledge into action, from early humans to modern AI

2.6 million years ago in East Africa, an early human picked up two rocks. With one stone cradled in their palm, stabilized by a partially opposable thumb, they struck it precisely with another rock.

Flakes of stone fell away, revealing a sharp edge—a cutting tool that instantly multiplied their capabilities. A human with this simple stone tool could now process meat in minutes that would take hours otherwise, access nutritious bone marrow otherwise unreachable, and craft other tools in turn. This multiplicative advantage—one human with tools could outperform ten without—created overwhelming evolutionary pressure. Those with better thumb opposition and finger dexterity could create better tools, securing an advantage that accelerated both technological and biological evolution.

Today, we're seeing something similar with AI. These systems are having their own 'opposable thumb moment' as they gain the ability not just to know things, but to manipulate their environment through deliberate tool use.

From Locked Box to Open World

When large language models first emerged, they were impressive but limited. An early analogy was to think of them as a smart person trapped in a room, only knowledgeable about what they knew before entering. Ask ChatGPT about current events, and it would apologize for its "cutoff date" - the moment it was locked in the room.

From the beginning, the goal was clear: give our "person in the room" a way to reach beyond those four walls—to access current information and interact with the outside world. This challenge sparked the first wave of solutions: developer frameworks like LangChain and others that acted as intermediaries between the AI and external resources.

At my company, Boxcars, we built one of these frameworks for Ruby developers. These frameworks worked by creating structured patterns for the AI to follow when requesting external information or actions. They would parse the AI's natural language output, translate it into API calls to services like search engines or databases, and then feed the results back to the model. Essentially, we were building makeshift arms and hands for our room-bound intelligence.

But these early solutions had significant limitations. The frameworks required developers to anticipate every type of interaction the AI might need and code those pathways explicitly. The AI couldn't discover or learn new tools on its own—it could only use what developers had specifically built for it. Like the first crude stone tools of early humans, these integrations worked but were inflexible and often unreliable. We quickly realized that for true tool use to work, the capability needed to come from within the models themselves.

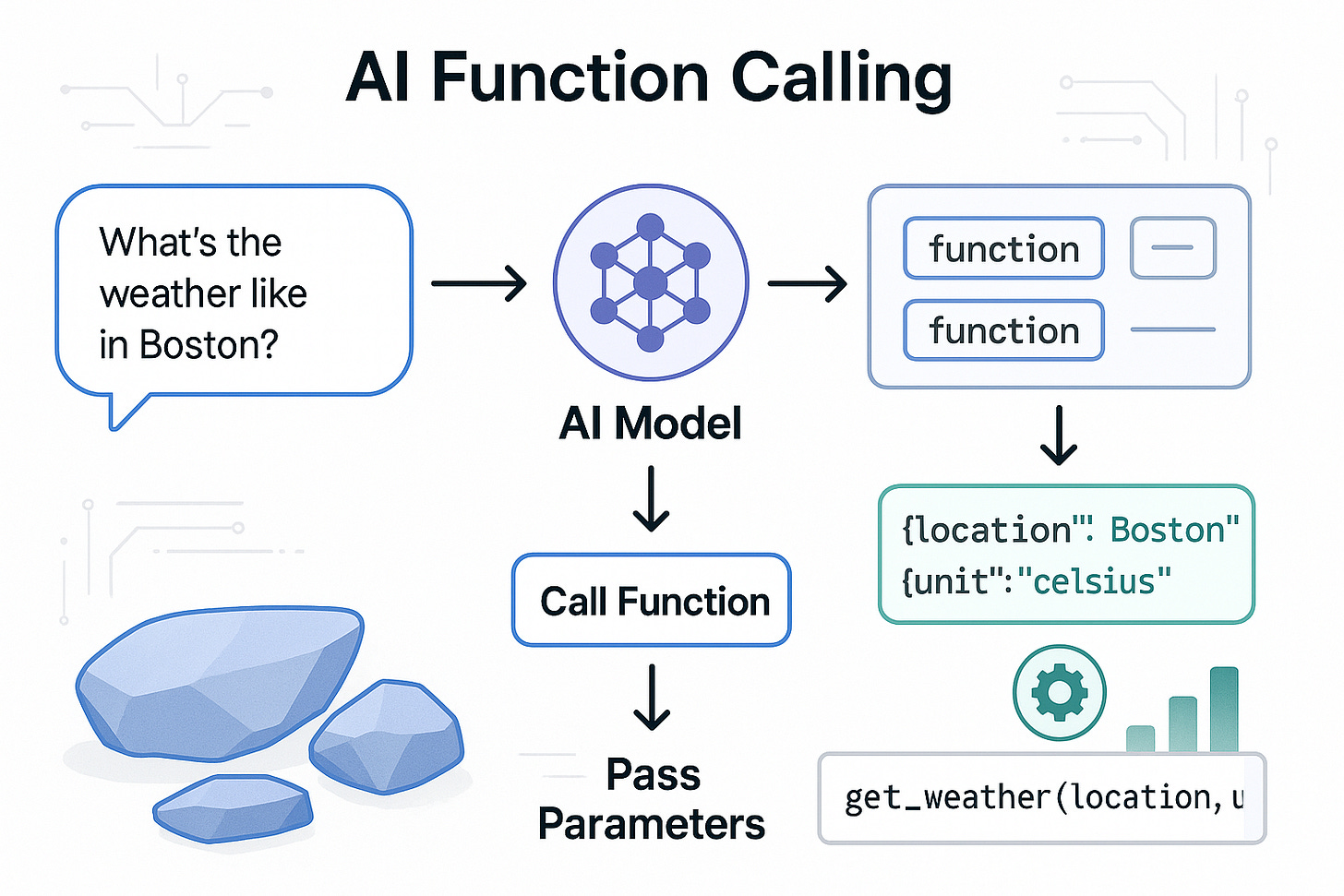

Models Add Function Calling

The model builders were already working on this problem. Rather than relying on external frameworks, they began training their models to natively understand and use tools. By June 2023, OpenAI had launched native function calling. This allowed developers to define functions that the model could call when appropriate, giving it a structured way to take actions beyond just generating text.

This was still very much a developer-oriented feature. It wasn't something the average end user could easily set up. You needed to understand both the model and the tools you wanted it to use, then write code to connect them.

Here's what a simple function call to get the weather might look like:

functions = [{

"name": "get_current_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {"type": "string", "description": "City and state, e.g., San Francisco, CA"},

"unit": {"type": "string", "enum": ["celsius", "fahrenheit"]}

},

"required": ["location"]

}

}]It's like early humans learning to craft specialized stone tools - each tool required specific knowledge. Just as early humans needed to understand the properties of flint to create a cutting edge, developers needed to understand API specifications to create function calls. Each new tool required its own crafting process from scratch.

Turns out this can be powerful. Last year I was working on a project by using a model in a chat window. I was able to work faster, but it was on me to copy bits of code and give it to the model to help it understand what I was building. Fast forward to this year, I use a VSCode plugin named Cline that wraps models with simple function calls (read files, list files, edit files, run commands, etc). With just these simple function calls, the models can see the code I am writing and make changes alongside me. To say I've become 100x productive would be to put it mildly.

But even with native function calling, we still faced a problem: every developer had to reinvent the wheel, creating their own function definitions and implementations. What we needed was standardization—a universal way for models to discover and use tools without requiring custom code for each integration. This is where Anthropic's announcement in late 2024 came in: MCP (Model Context Protocol).

MCP: The Universal Tool Handle

In November 2024, Anthropic announced the Model Context Protocol (MCP), a step forward in how AI systems interact with tools. Anthropic described it as a "USB-C port for AI applications," a fitting analogy for what it accomplishes.

MCP represents a shift in AI tool use. With earlier function calling, developers had to both specify what a tool does and implement how it works. MCP separates these concerns: the specification of what a tool does is standardized, while the implementation can vary. This is similar to how USB-C defines a standard connection, but what's on the other end of the cable can be anything from a hard drive to a monitor to a phone charger.

This separation of concerns creates a powerful ecosystem effect. Once the standard is established, anyone can create tools that work with any MCP-compatible AI system. The AI doesn't need to know the implementation details; it just needs to understand the tool's capabilities and interface.

The ecosystem took off quickly. Within months of MCP's release, developers had created servers for web search and browsing (Brave Search MCP server), file system access (allowing Claude to read and write files on your computer), image generation, database queries, calendar management, email integration, task management with Things, and Obsidian note-taking integration, among others. The MCP GitHub repository quickly became a hub for developers to share and discover new tools. By spring 2025, the community had created over 4,000 MCP servers according to PulseMCP, spanning many domains of digital functionality. Cline added MCP support shortly after the protocol was released, and I quickly found myself plugging in various MCP servers.

What's notable about MCP's impact is that even Anthropic's competitors have recognized its value. In March 2025, OpenAI announced it would adopt the MCP standard for connecting AI models to external data and tools, according to a TechCrunch report. Google followed suit, creating a moment of convergence among AI leaders. This cross-company adoption effectively establishes MCP as an industry standard for AI tool use, similar to how USB became the standard for physical device connections.

What makes this particularly powerful is the modular nature. MCP is like the development of standardized tool handles in human history. Just as standardized handles allowed early humans to swap different tool heads (axe, hammer, spear) onto the same shaft, MCP creates a universal connection point that allows AI models to use a wide variety of tools without needing custom integration for each one.

Perhaps the most remarkable aspect is that Cline can write its own MCP servers. I can ask it to create a new tool, and it will write the code to implement that tool as an MCP server. This is like giving a human not just tools, but the ability to craft new tools - a meta-capability that expands what's possible.

When humans developed the ability to create tools specifically designed to make other tools, it triggered an explosion of technological advancement. The same pattern is emerging with AI systems that can create their own tools. The feedback loop of capability enhancement accelerates with each iteration.

A2A Protocol: Coordinating Tool Users

While MCP was making waves in late 2024, Google was working on a complementary approach. In early 2025, they announced the Agent-to-Agent Protocol (A2A), designed to work alongside MCP but solving a different problem.

If MCP is about connecting AI to tools, A2A is about connecting AI to other AI. Google explicitly designed A2A to complement Anthropic's MCP, creating a more complete ecosystem for AI agents.

The relationship between these protocols is best understood through an analogy. Imagine we're in ancient Egypt, tasked with building a pyramid:

MCP is like the standardized tools and techniques that individual craftspeople use - the chisels, hammers, measuring instruments, and pulley systems. Each worker needs these tools to perform their specific tasks effectively.

A2A, on the other hand, is like the communication system between different specialized teams. The stone cutters need to coordinate with the transporters, who need to coordinate with the architects and the builders. Without effective communication between these teams, even the best individual tools won't result in a completed pyramid.

This is what A2A enables - it allows AI agents to communicate with each other in their natural language, rather than through rigid tool interfaces. One agent might say to another, "I need help analyzing this dataset," and the second agent can respond with clarifying questions about specific patterns or preferred analysis methods.

The protocol supports ongoing back-and-forth communication and evolving plans. Just as building a pyramid required constant adjustment and coordination, complex AI tasks often need multiple agents working together, adapting their approach as they go.

What makes A2A powerful is how it enables specialization. In human history, tool standardization allowed for specialized craftspeople, while language allowed these specialists to work together on projects no individual could complete alone. The same pattern is emerging in AI - MCP enables specialized tool use, while A2A enables collaboration between specialists.

Google's approach recognizes that complex problems require both good tools and good coordination. Tasks that were previously too complex for a single AI agent become possible when multiple specialized agents can collaborate effectively.

AI Using Computers Like Humans

The frontier of AI tool use is now moving toward systems that can use computers the way humans do—through interfaces designed for people rather than machines. Instead of creating specialized AI tools, this approach teaches AI to use the tools humans already have.

In October 2024, Anthropic introduced computer use capabilities for Claude 3.5 Sonnet. This allows Claude to interact with computers by looking at screenshots, moving a cursor, clicking buttons, and typing text. The AI counts pixels to determine how far to move the cursor to click in the right place.

Just three months later, in January 2025, OpenAI released Operator, powered by their Computer-Using Agent (CUA) model. Operator can use its own browser to perform tasks like filling out forms, ordering groceries, or creating memes. It combines vision capabilities with reinforcement learning to interact with graphical user interfaces.

Google DeepMind joined the race with Project Mariner in December 2024. Built with Gemini 2.0, it can understand and reason across everything on a browser screen, including text, code, images, and forms.

When AI can use computers like humans, it can potentially perform any digital task a human can perform. It can research, write, design, code, communicate, and create using the same software we use.

The Tools Make the Intelligence

We're witnessing AI's opposable thumb moment in real-time. The progression from function calling to MCP to computer use represents an evolution in AI's ability to interact with its environment. Each step increases both capability and autonomy.

It's still early days, though. Computer use remains clumsy compared to human operation. MCP has security risks and buggy implementations. But function calling was just as unreliable two years ago, and those problems proved solvable.

By 2027, we'll likely have AI systems that can discover tools, coordinate with other agents, and build custom tools when needed.

When early humans developed tool use, it changed their evolutionary path. A species that might have remained just another primate became dominant on the planet.

Let's hope AI uses tools for good.🤞🏽