The Automation Revolution No One Saw Coming: From Niche Computers to Ubiquitous AI

Charting the unforeseen evolution from an estimated total market of just 5 computers to AI assistants for everyone, this article traces technology's relentless growth.

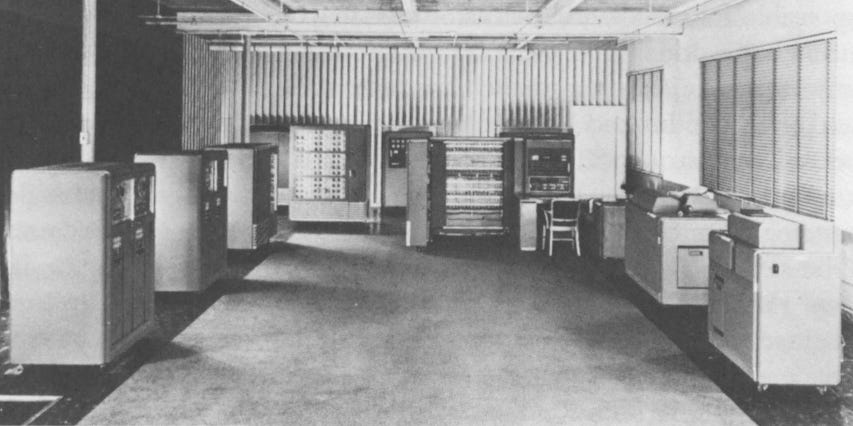

In 1952, the dawn of the computer age was marked by both tremendous potential and imposing limitations. That year, IBM unveiled its pioneering 701 Electronic Data Processing Machine, a hulking multi-part system that incorporated revolutionary technologies like magnetic tape storage. At an IBM shareholders meeting in 1953, company president Thomas Watson Jr. made a statement that would prove short-sighted, though understandable for its time. "I think there is a world market for about five computers," he reportedly said.

Watson's limited view of the 701's prospects reflected the economic realities of early computing. The system carried an exorbitant $16,000 monthly rental fee, equal to $163,000 in today's terms. This pricetag restricted its use to only the most complex and valuable calculations that could justify the investment. Early adopters included Douglas Aircraft and the US Weather Bureau, who applied the 701's cutting-edge capabilities to problems worthy of its cost. For most entities, purchasing one of these pioneering machines was an impossible extravagance.

Wright's Revelation: Scaling Up by Doubling Down

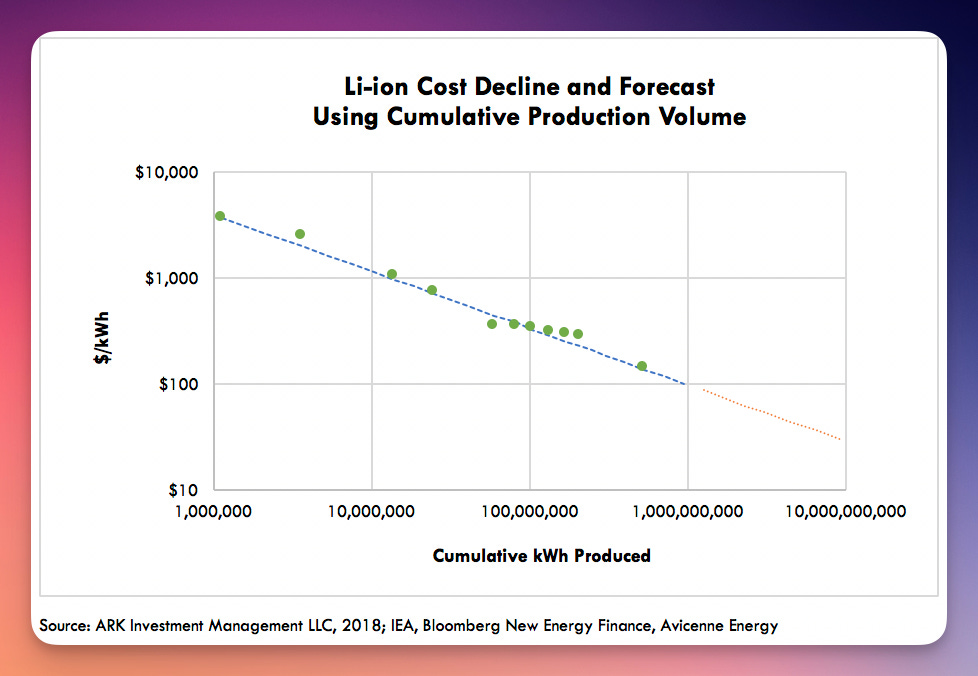

What Watson failed to foresee was the predictive power of Wright's Law, first detailed by engineer Theodore Wright in 1936. While studying airplane manufacturing, Wright discovered a constant relationship - for every cumulative doubling of units produced, costs fell by a consistent percentage. Specifically, Wright found labor costs declined 10-15% each time airplane production doubled.

In his paper "Factors Affecting the Cost of Airplanes," Wright laid out this systematic cost reduction that would become known as Wright's Law or experience curve effects. He determined that as organizations "learn by doing," producing more units drives down the cost per unit in a predictable curve. This cycle meant computing power would rapidly scale up as prices dramatically fell. The 701 launched computing on a trajectory where expanding production enabled exponentially greater capabilities at exponentially lower prices.

For a first-person view into 4 decades of technology shifts check out the series:

The Software Scaling Cycle: Plummeting Costs, Soaring Demand

The cost-capability cycle that Wright uncovered would continue to play out across new frontiers of technology. As hardware became increasingly affordable thanks to scaling production, the bottleneck for progress shifted to software. In the early decades of computing, software was painstaking to construct by hand and prohibitively expensive to develop.

But just as hardware costs had fallen, innovations began driving down the cost of software development. In 1970, the US Census Bureau estimated there were only 450,000 IT professionals. Yet by 2024, Statista projects there will be 28 million software developers worldwide. This explosion in trained talent was accompanied by increasingly powerful tools for building software, making the process radically more efficient. Low-code and no-code platforms like Airtable, Zapier, and Coda now enable business users to customize solutions without coding expertise.

The compounding effects of cheaper hardware, abundant developers, and easy-to-use tools fueled a global software market that reached $610 billion in 2022. Much as Wright predicted, producing more software drove down costs and expanded capabilities in a virtuous cycle

Software's Story is Just Getting Started

The software industry's explosive growth may seem impressive, but it pales compared to the total available market for automation. This boundless potential stems from software's unique capacity to augment human abilities and enable the previously impossible.

At its core, software automates tasks and processes, executing them faster, cheaper, and better than manual methods. But it also opens up entirely new realms of opportunity by allowing people to do things they simply could not do before. Take weather forecasting, which humans first attempted in 1922 using paper calculations. It took six weeks to produce a 6-hour forecast. Not until the 1950s did computers like ENIAC enable usable forecasting.

Software continues to push the frontiers of the possible across every domain. Though the software industry tops $500 billion, its true total addressable market is unfathomable when considering the potential to optimize the 77% of US GDP from services. Just examining professional services, categorized as NAICS code 54 by the Bureau of Labor Statistics and encompassing technical services like legal, accounting, architecture, engineering, and consulting, illustrates software's vast runway. This sector alone employs 10 million Americans and generates $2 trillion in revenue,

Accelerating Into the Future

Software's relentless march has brought us to the cusp of another breakthrough - this time enabled by artificial intelligence. While software automated basic tasks, AI researchers pushed neural networks to achieve far more complex capabilities. From handwriting recognition to code generation, AI models now excel at feats once thought impossible.

Unlike IBM's president in 1953, who infamously predicted a tiny market for computers, we now grasp technology's potential to scale in capability as it drops in cost rapidly. Problems unsolvable yesterday become possible tomorrow. Rather than underestimating the future, visionaries like Bill Gates foresee new frontiers like AI assistants for all. The coming times promise to be exciting as AI unlocks new realms of opportunity.