The AI Revenue Mirage: Today's Premium Features Are Tomorrow's Freebies

When success today doesn't guarantee tomorrow in the world of AI

Let's say you've built an AI product that grows to over a million paid users and is growing rapidly—you're set, right? Think again. Building an enduring business on a rapidly commoditizing AI tech stack is harder than it looks, and even tech giants like Microsoft and Google are facing this challenge head-on.

Last year, in Microsoft's earnings call, CEO Satya Nadella painted an optimistic picture of AI's revenue potential. He pointed to Github's success as a harbinger of things to come—their Copilot service had amassed 1.3 million paid subscribers and was driving 40% year-over-year revenue growth. This wasn't just about Github. Microsoft quickly followed with Copilot for Office 365 at $30 per month, while Google launched Gemini for Google Workspace starting at $20 per month for business users. Wall Street analysts were ecstatic, projecting $1.3 trillion in additional revenue for SaaS companies by 2033.

The premium pricing strategy seemed like a sure-fire way for tech companies to monetize AI. After all, if developers were willing to pay for Copilot, wouldn't knowledge workers pay for AI assistants in their daily tools? The market's enthusiasm suggested we were entering a new era of AI-driven revenue growth.

But last June, I wrote an article exploring a different future. Looking at the history of digital technology, particularly WiFi's journey from premium service to free amenity, I thought that AI would follow a similar path to commoditization. The premium pricing wouldn't hold.

The Signs of Deflation

Less than a year later, that prediction is already coming true. Microsoft has bundled their Copilot Pro ($20/month) directly into their personal Office 365 license. Google quickly followed suit, integrating Gemini into Google Workspace plans with just a $2 price increase. Most tellingly, Github Copilot—the product that started this whole trend—is now available for free with usage limits, or just $10 per month for unlimited use. The race to zero is happening faster than anticipated.

The Digital Deflation Engine

To understand this rapid commoditization, we need to understand a fundamental truth about digital technology: it's inherently deflationary. Peter Diamandis captures this in his 6D model of exponential growth. The key insight is that once something becomes digitized, it inevitably leads to demonetization.

Why? Because digital technology has a unique property: the cost of incremental production approaches zero. Unlike manufacturing physical goods, where each new widget requires new materials and energy, digital products can be replicated essentially for free. The CPUs powering AI computations, once provisioned, are a sunk cost. Each additional query or generation adds minimal marginal cost.

This pattern has played out repeatedly in the digital realm. Music streaming services transformed $15 CDs into unlimited listening for a fraction of the cost. Digital photography eliminated per-print costs, making it possible to take thousands of photos without worry. Cloud storage turned gigabytes of space, once sold at premium prices, into an essentially free commodity. And now we're seeing it happen with AI at an unprecedented pace.

The Value Migration

This rapid commoditization of AI capabilities doesn't mean there's no money to be made. Just as WiFi's commoditization created opportunities for businesses built on the assumption of ubiquitous connectivity (think Netflix, or Zoom), AI's commoditization will enable new business models we haven't yet imagined.

The key is understanding where value migrates. In the WiFi era, value shifted from providing access to building services on top of that access. With AI, we're seeing a similar pattern emerge. Value is moving away from the base models themselves and toward other areas: specialized fine-tuning for specific domains, novel interfaces and interaction patterns, integration and workflow automation, and the competitive advantages that come from proprietary data and training sets.

The Prediction Accelerates

What's surprising isn't that the commoditization is happening—it's the speed. The forces of digital deflation, combined with unprecedented investment and open source collaboration, are compressing what took WiFi a decade into perhaps 2-3 years.

Here's that article in full, which explores this pattern in depth.

From Premium to Commodity: The GenAI Trajectory Through the Lens of WiFi

If you were to believe the current hype, Generative AI is set to revolutionize SaaS, with projections of $1.3 trillion in new revenue by 2033. It's a tantalizing prospect that has CEOs and investors seeing potential. Early signs seem promising.

Take Microsoft, for instance. In their 2024 Q2 earnings call, CEO Satya Nadella reported that "GitHub revenue accelerated over 40 percent year-over-year, driven by all-up platform growth and adoption of GitHub Copilot, the world's most widely deployed AI developer tool." He revealed that GitHub Copilot had amassed over 1.3 million paid subscribers, up 30 percent quarter-over-quarter, with more than 50,000 organizations using GitHub Copilot Business to boost their developers' productivity.

At first blush, GenAI seems like a total boon for all SaaS providers. The strategy appears simple: add a layer of AI on top of existing products and watch the revenue surge. However, before we get carried away with visions of endless profit streams, it's worth taking a step back and considering the long arc of technology adoption.

History shows us that sustained pricing power for new technology is not guaranteed. What starts as a premium offering can quickly become a table-stakes feature, eroding its price point and competitive value. To understand this trajectory, we need look no further than another breakthrough technology: Wi-Fi.

So how does technology transition from a premium offering to a commoditized feature? To understand this process, we'll examine it through the lens of Geoffrey Moore's "Crossing the Chasm" framework, which outlines the stages of technology adoption from Innovators to Laggards.

By applying this framework to the evolution of Wi-Fi and drawing parallels to the current AI boom, we can gain insights into the potential future of AI monetization. We'll explore how each stage of adoption brings unique challenges and opportunities, from the initial excitement of early adopters to the pressure of commoditization as the technology reaches the mainstream.

Early Adopters: The Pioneers of Premium Pricing

In the technology adoption lifecycle, early adopters are visionaries who see the potential of new technology before it becomes mainstream. These individuals and organizations are willing to pay a premium for cutting-edge solutions that promise a competitive advantage or significant improvement in their operations. They're not just buying a product; they're investing in potential.

The story of Wi-Fi's early days illustrates this phenomenon. In the early 2000s, Wi-Fi was transitioning from a niche technology to a mainstream innovation. The adoption of the 802.11b standard in 1999 laid the groundwork for widespread Wi-Fi use in offices and homes. The technology got a significant boost in 2003 when Intel unveiled the Centrino chipset with built-in Wi-Fi support, making it easy for PC manufacturers to include wireless capabilities in their laptops.

However, having Wi-Fi-enabled devices was only half the equation. Business travelers, the quintessential early adopters in this scenario, were eager to use their new capabilities but faced a scarcity of access points. Enter T-Mobile USA, which in 2002 launched an ambitious Wi-Fi hotspot network.

T-Mobile's pricing reflected the premium nature of the service:

• Pay-as-you-go: $6 for a one-hour pass

• Day Pass: $9.99 for 24 hours of access

• Monthly subscription: $29.99 for unlimited access at any T-Mobile hotspot

These prices may seem high by today's standards, but for early adopters, the ability to connect to the internet while on the go was worth the cost. The potential was so promising that one analyst predicted U.S. hotspots would generate almost $3 billion in revenues from 13 million users by 2007 (ah! the quaint days at the turn of the century when TAMs could be less ambitious!).

Fast forward to today, and we see a similar pattern emerging with Generative AI. Just as Wi-Fi promised to revolutionize mobile connectivity, GenAI is poised to transform how we interact with and create digital content.

Consider the case of Jasper.ai, founded in 2021 following the launch of GPT-3. Jasper's Boss Mode Plan, priced at $119 per month, exemplifies the premium pricing strategy typical of the early adoption phase. Despite the high cost, Jasper saw remarkable growth:

• Reached $1 million ARR (Annual Recurring Revenue) within 3 months of launch

• Hit $10 million ARR by July 2021, just 6 months after launch

• By October 2022, secured a $125M Series B funding, valuing the company at $1.5B

This rapid growth and high valuation underscore the willingness of early adopters to pay a premium for cutting-edge AI capabilities. For these pioneers, tools like Jasper offer a potential edge in content creation and marketing, justifying the high cost.

In both the Wi-Fi and GenAI examples, we see how early adopters drive the initial phase of a technology's market penetration. Their willingness to pay premium prices not only provides crucial revenue for further development but also validates the technology's potential, paving the way for broader adoption in the next phases of the market lifecycle.

Early Majority: Bundling and Strategic Partnerships

As a technology moves beyond early adopters and into the early majority phase, companies often employ bundling strategies and strategic partnerships to expand their user base. This phase is crucial for crossing the "chasm" between early adopters and the mainstream market.

In the case of Wi-Fi, T-Mobile's approach exemplifies this strategy. Recognizing the need to reach a broader audience, T-Mobile forged partnerships with high-traffic businesses to create a network of premium Wi-Fi access points. This move was not just about expanding coverage; it was about integrating Wi-Fi into everyday locations where people already spent time.

Starbucks became the poster child for this strategy. In 2002, the coffee giant announced plans to equip 1,200 of its stores with T-Mobile HotSpot service. By 2004, this number had grown to over 3,400 locations across the United States. This partnership was transformative for both companies. For T-Mobile, it provided instant access to millions of potential users in a comfortable, familiar setting. For Starbucks, it added a new amenity that could attract and retain customers, potentially increasing visit duration and frequency.

Other companies followed suit. Boingo Wireless partnered with major airports, reaching travelers at key connection points. Barnes & Noble teamed up with AT&T to offer Wi-Fi in its bookstores, creating a new draw for customers to spend time browsing and reading. These partnerships helped normalize the idea of public Wi-Fi, pushing it from a niche service for tech-savvy business travelers to an expected amenity for the general public.

Fast forward to today, and we see similar strategies unfolding in the world of Generative AI. OpenAI's approach with GitHub Copilot serves as an example.

In June 2021, OpenAI and Microsoft announced a preview of GitHub Copilot, an AI-powered coding assistant built on OpenAI's Codex model. This tool was strategically integrated into Microsoft's Visual Studio, one of the most popular integrated development environments (IDEs) used by millions of developers worldwide.

The impact was immediate and substantial. A GitHub survey found that developers using Copilot completed tasks 55% faster than those who didn't. An overwhelming 77% of users said they wouldn't want to give it up. In a telling statistic, most developers preferred having access to Copilot over free lunches at work, with 88% choosing Copilot over monthly free lunches. Perhaps most strikingly, 30% of developers said access to Copilot would influence their choice of employer, highlighting its perceived value in the job market.

These statistics underscore how quickly Copilot moved from a novel tool to an essential productivity enhancer for many developers. By October 2023, Microsoft was tracking towards more than $100M of Annual Recurring Revenue (ARR) from Copilot.

Microsoft and OpenAI's strategy didn't stop there. Recognizing the potential, they expanded the Copilot concept across the product suite. They introduced Copilot for Microsoft 365 at $30/month per user, aiming to add AI capabilities (and additional revenue) to their vast existing customer base.

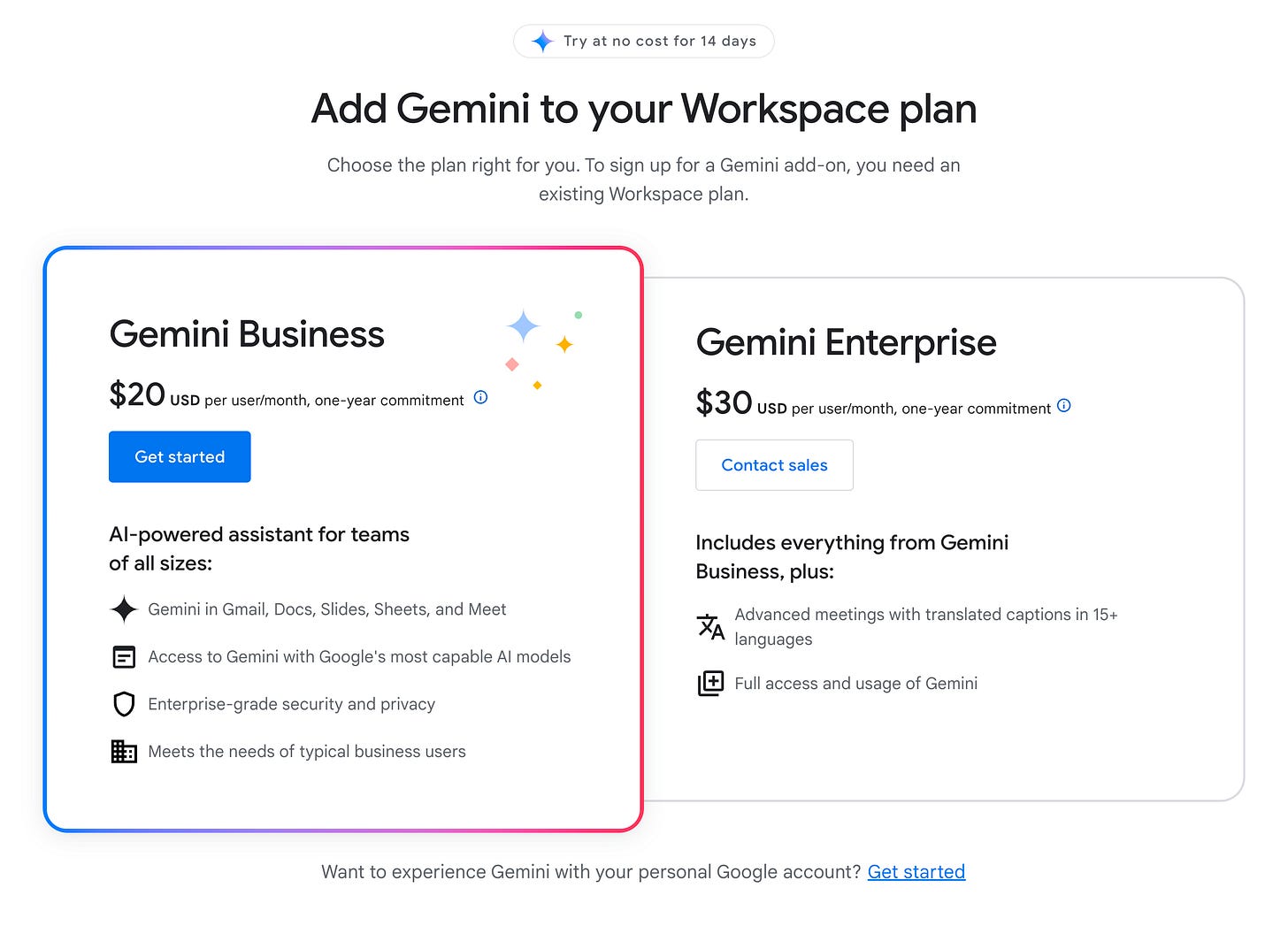

Google has adopted a similar strategy, pricing its Gemini AI model as an add-on for existing products, ranging from $20 to $30 per user per month. This approach not only provides additional value to current customers but also creates a new revenue stream without the need to build an entirely new user base.

As we observe these strategies, it's clear that both the Wi-Fi and AI industries are following a similar playbook in their early majority phase: leverage existing user bases, bundle with popular services, and position the technology as an essential productivity tool rather than a luxury add-on. This approach is crucial for bridging the gap between early adopters and the mainstream market, setting the stage for widespread adoption and, eventually, commoditization.

Late Majority: The Beginning of Commoditization

As a technology reaches the late majority phase, it transitions from a premium offering to an expected feature. This shift often marks the beginning of commoditization, where the technology becomes so ubiquitous that it's no longer a differentiator but a standard part of the service offering.

In the case of Wi-Fi, this transition became evident around 2008. By this time, Wi-Fi had become so integral to the coffee shop experience that Starbucks, once a pioneer in offering premium Wi-Fi services, decided to change its strategy. In a move that signaled the shifting landscape, Starbucks ended its exclusive agreement with T-Mobile and partnered with AT&T to offer two hours of free Wi-Fi daily to Starbucks Rewards Program members. This shift mirrored the evolution of cell phone plans, which initially metered usage by minutes and charged for overages. The hospitality industry also adapted, with many hotel chains offering free Wi-Fi as part of their rewards programs.

The commoditization of Wi-Fi accelerated with initiatives from tech giants like Google and Facebook. Google's Station project and Facebook's Express Wi-Fi program aimed to bring free or affordable Wi-Fi to developing countries. These initiatives weren't just altruistic; they were strategic moves to expand internet access and, by extension, increase the user base for their core products.

In the U.S., internet service providers like Comcast took a different approach. In 2014, Comcast began offering routers to its home internet customers that broadcast both a private signal and a public "xfinitywifi" signal, effectively creating a vast network of free Wi-Fi access points for their customers.

As we turn to the world of Generative AI, we see similar patterns emerging. SaaS companies are adopting strategies reminiscent of the Wi-Fi commoditization playbook to introduce AI features to their customers.

Buffer, a social media management platform, offers its AI features on all plans, including its free tier, but limits free users to 100 uses. This strategy mirrors the "freemium" model that became popular during the late stages of Wi-Fi adoption. Box, a cloud content management service, provides a certain number of AI credits with each plan, charging for usage above that threshold. Intercom, a customer communications platform, offers AI features at all product tiers but charges a $0.99 resolution fee per incident resolved with AI.

These varied approaches reflect the evolution of AI from a premium feature to a table-stakes one. Companies are realizing that locking AI up behind a premium package isn't ideal. For instance, Buffer likely calculated that the long-term value of customers using their AI product outweighs the potential revenue from charging for a premium AI package that only some customers would buy.

Just as we saw with Wi-Fi, companies are experimenting with different models to introduce AI capabilities to their user base, seeking the right balance between monetization and widespread adoption. However, if history is any guide, this phase of metered or tiered access to AI features may be temporary. Cell phone plans that originally metered by minutes eventually moved to unlimited usage. As we'll see in the next section, to capture the laggards, companies will likely build AI into their products for free with no limits, further pushing AI towards commoditization.

Laggards: When Free Becomes the New Normal

In the technology adoption lifecycle, laggards are the last group to embrace new innovations. They're not so much choosing to adopt as they are swept up in a world where the technology has become ubiquitous. Starting in 2008, Apple started eliminating ethernet ports on their laptops making WiFi essentially mandatory.

By 2010, the Wi-Fi landscape had shifted again. Starbucks, once a pioneer in premium Wi-Fi services, transitioned to offering completely free Wi-Fi for all customers. This move signaled the final stage of Wi-Fi commoditization. Paying for Wi-Fi became a quaint vestige of a bygone era, like using a paper map or blowing into a game cartridge.

The concept of premium Wi-Fi didn't disappear entirely; it just migrated to niche markets. Today, you'll find it on airplanes and in luxury hotels, where the captive audience still allows for a premium model. Meanwhile, T-Mobile, once synonymous with Wi-Fi hotspots, repurposed the "hotspot" branding for other products and services in our now Wi-Fi-saturated world.

In the realm of Generative AI, we're witnessing a similar, albeit accelerated, progression towards free access. The most visible example is in AI-powered search, with tech giants like Microsoft and Google racing to integrate AI capabilities into their search engines.

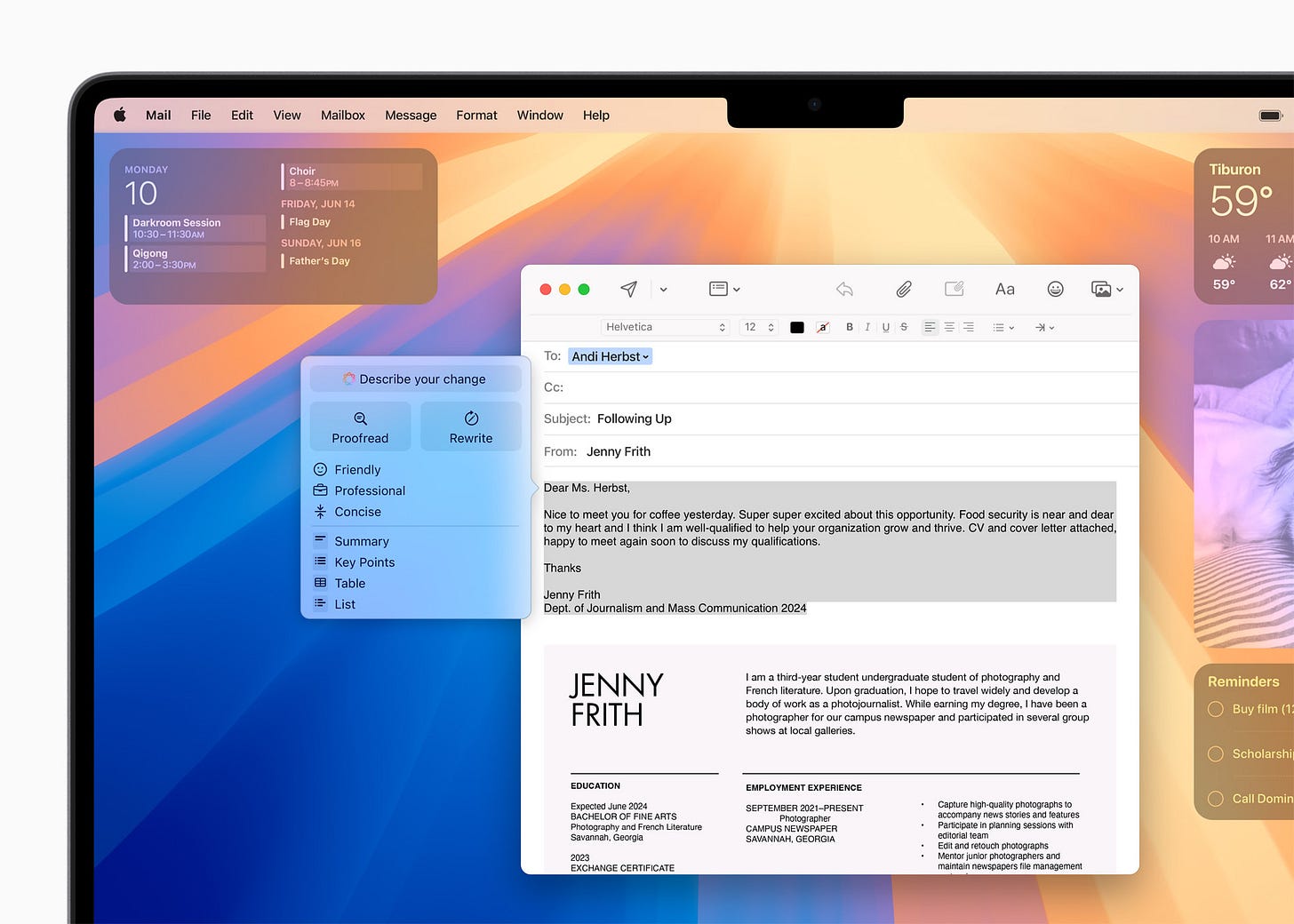

However, perhaps the most intriguing approach comes from Apple. Rather than joining the race to build the most powerful AI model, Apple is speaking directly to the laggards by seamlessly integrating AI capabilities into their operating system. They've even rebranded it as "Apple Intelligence," deliberately avoiding the term "Artificial Intelligence" to make it feel like a natural extension of their ecosystem rather than a novel, potentially intimidating technology.

Apple's strategy is particularly clever. They've developed smaller, on-device models, which allows them to sidestep the thorny issues of data privacy and server expenses that plague cloud-based AI solutions.

By raising the minimum bar of what an OS can do for free, Apple is essentially daring Microsoft and Google to follow suit. However, Apple also has a strategic advantage: their AI isn't actually free. It comes with a hardware tax - it's only available on iPhone 15 Pro and higher models.

This strategy isn't limited to Apple. SaaS providers are also adopting similar approaches. Dropbox, for instance, is developing Dash, an AI-powered search feature that will be available to all users for free. Canva is building its own text-to-image model on the open-source Stable Diffusion model, giving it flexibility in pricing AI features.

The viability of this strategy is largely thanks to the burgeoning open-source AI ecosystem. The development of open-source models like Mistral and Meta's Llama 2 is democratizing access to powerful AI capabilities. These models, while smaller than behemoths like GPT-4, can still perform a wide range of tasks effectively. Their open-source nature allows companies to fine-tune them for specific use cases without the need for massive computational resources or data sets.

The Inevitable March to Zero

As we've seen through our journey from Wi-Fi to GenAI, the arc of technology adoption follows a pattern. What begins as a premium product, like Jasper's AI writing assistant, evolves into a value-add package such as Microsoft's Copilot for Office 365. But the progression doesn't stop there. As the technology matures and becomes more integral to the product's functionality, it inevitably becomes bundled in, first with some consumption limits, but eventually as a standard feature.

What's particularly fascinating about GenAI is the speed of this transition. While Wi-Fi's commoditization wave took about 8-10 years to fully materialize, we're seeing a much faster progression with GenAI. Just four years after GPT-3's launch in 2020, we're already witnessing the early signs of free, all-inclusive AI access in everyday systems. This accelerated timeline reflects not only the rapid pace of AI development but also changed consumer expectations in our increasingly digital world.

So what should we expect moving forward? For customers of SaaS products, this trend promises more options and potentially lower prices as AI features become standard offerings rather than premium add-ons. The integration of AI into everyday software will likely lead to enhanced productivity and new capabilities that we can't yet fully imagine.

For SaaS companies, the implications are more complex. As we've seen with Apple's strategy, the first mover to "free" AI can force the entire market to respond. This means that SaaS providers need to be proactive in their AI strategy. They must consider how they'll respond if a competitor bundles in AI features for free, and how they can leverage AI to enhance their core product offering rather than treating it as a separate, chargeable feature.

Ultimately, the commoditization of GenAI doesn't mean the end of innovation or profit in this space. Just as free Wi-Fi became the foundation for new business models and services, ubiquitous AI is likely to spawn its own ecosystem of novel applications and opportunities. The key will be in how companies leverage this technology to create unique value for their customers, beyond the AI itself.