So What If We're Stochastic Parrots?

How AI learned to build worlds from words

In the summer of 2020, artificial intelligence researchers were giddy with excitement. OpenAI had just released GPT-3, a language model so large and capable that it seemed to border on magic. With 175 billion parameters—two orders of magnitude larger than its predecessor—GPT-3 could write poetry, generate code, and answer complex questions with startling fluency. "Playing with GPT-3 feels like seeing the future," tweeted one developer, capturing the zeitgeist of a field convinced it was witnessing a breakthrough.

But not everyone was swept up in the excitement. In March 2021, as the tech world continued to marvel at GPT-3's capabilities, Emily Bender, a computational linguist at the University of Washington, and her colleagues published a paper with a deliberately provocative title: "On the Dangers of Stochastic Parrots: Can Language Models Be Too Big?"

The term "stochastic parrot" was their answer to the hype—a vivid and damning metaphor. "Stochastic" from the Greek word for "random" or "unpredictable," and "parrot" implying mindless repetition without understanding. These systems, Bender argued, were essentially sophisticated mimics, capable of producing plausible-sounding language without any real comprehension of what they were saying. Just parrots, albeit ones trained on the entire internet, regurgitating variations of what they'd heard before.

The critique was sharp, well-reasoned, and fundamentally correct. When language model output is accurate, Bender would later explain, "that is just by chance. You might as well be asking a Magic 8 ball." The models don't understand meaning—they're pattern-matching machines that stitch together linguistic forms based on statistical correlations.

The metaphor stuck because it felt intuitively right. When you watch a language model generate text, it does seem like elaborate autocomplete. I've made this argument myself. The models are impressive, sure, but they're fundamentally just predicting the next word based on statistical patterns in their training data. They're not thinking; they're computing probabilities. They don't understand the world; they predict text about the world. The distinction seemed crucial.

And yet, here's what Bender and her colleagues couldn't have anticipated: to be really good at predicting what comes next, you have to understand what's actually happening.

Consider this spatial reasoning challenge: "You're standing at the entrance of a university campus. The library is 200 meters north of you. The cafeteria is 150 meters east of the library. The parking lot is directly south of the cafeteria. If you need to get from the library to the parking lot, which direction should you head?"

Southeast. Any capable language model will tell you this. But here's what's remarkable—this specific campus layout, with these exact distances and relationships, almost certainly doesn't exist in the training data. The model isn't retrieving a memorized map. It's constructing a spatial mental model, placing landmarks in relation to each other, and calculating routes through that imagined space.

The stochastic parrot, it turns out, learned to understand the world in order to parrot it better.

This isn't just speculation. In 2022, researchers at MIT trained a model to play legal moves in the board game Othello by simply predicting the next move in thousands of game transcripts. The model was never explicitly taught the rules of Othello, never shown a board, never told what constitutes a legal move. It just learned to predict what move typically came next in a sequence.

Yet when researchers peered inside the model's "brain," they found something interesting: it had spontaneously developed an internal representation of the board state. Not only could it predict legal moves, but it had built a complete mental model of the game—tracking which squares were occupied, which pieces belonged to which player, how the board changed after each move. Follow-up research revealed these representations were even more sophisticated than initially thought—the model had learned to think in terms of "my pieces" versus "their pieces," showing a genuine understanding of the game from a player's perspective.

The model wasn't just pattern matching. It was world building.

The Paradox of Sophisticated Mimicry

If these models are just predicting tokens based on text patterns, how can they understand anything about the physical world? Text doesn't contain gravity or spatial relationships—only words about them.

This is where the "stochastic parrot" critique reveals its own contradiction. To be really good at predicting what humans would write about the world, you need to understand how the world actually works.

Language models can navigate complex spatial environments described in words, solve novel mathematical problems by understanding structure rather than memorizing solutions, and exhibit "emergent abilities" that appear suddenly at scale without explicit training.

The pattern is consistent: to predict human language about the world, models end up modeling the world itself.

The Mirror Test

The "stochastic parrot" metaphor was meant to diminish AI systems, to show them as mere mimics without true understanding. But perhaps the most unsettling implication of this critique isn't what it says about AI—it's what it says about us.

If language models are just sophisticated pattern matchers, what does that make human intelligence? We also learn from examples, extract patterns from experience, and build internal models of how the world works. Even our primary tool for understanding—language itself—shapes how we think.

When ChatGPT launched in late 2022 and took the world by storm, Sam Altman, OpenAI's CEO, seemed to acknowledge this uncomfortable parallel. "I am a stochastic parrot, and so r u," he tweeted, perhaps recognizing that the critique Emily Bender had leveled at AI systems applied just as well to human cognition.

Consider what happens when programmers switch languages. I moved from Java to Ruby years ago and found that my ability to think about algorithms changed. Java's verbose syntax had trained me to think in rigid hierarchies; Ruby's fluid nature suddenly made me see elegant one-line solutions that would have been awkward to even conceive in Java.

This isn't just personal preference. The Sapir-Whorf hypothesis suggests that language acts as "training data" that configures our mental models. Speakers of Guugu Yimithirr, an Aboriginal Australian language, don't use relative directions like "left" or "right." Instead, they always use absolute directions—north, south, east, west. As a result, they maintain an internal compass at all times, pointing north with remarkable accuracy even in unfamiliar locations—a skill that seems almost supernatural to English speakers who think in terms of "turn left at the coffee shop."

The language literally rewired their spatial cognition, just like how programming languages rewire how developers approach problems.

But striking evidence comes from neuroscience—from studying the original neural networks, the wet neurons that inspired our artificial ones.

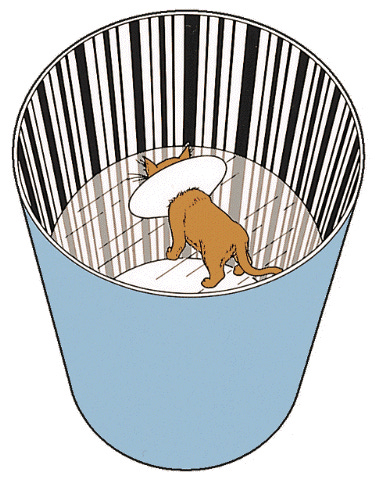

In the 1970s, researchers Colin Blakemore and Grahame Cooper conducted an experiment that would be considered ethically unthinkable today. They raised newborn kittens in cylindrical chambers lined entirely with vertical black and white stripes. For the first few months of their lives, these kittens saw nothing but vertical lines—no horizontal edges, no diagonal patterns, no complex shapes.

When the researchers finally released them into normal environments, the results were both fascinating and heartbreaking. The cats were behaviorally blind to horizontal lines. They would bump into horizontal surfaces like table tops and low barriers as if they didn't exist, though they could navigate around vertical objects like chair legs just fine.

The limited visual "training data" had physically rewired their neural architecture. The neurons that should have responded to horizontal orientations simply never developed. Their brains had been configured by their experience, just like how training data configures an artificial neural network.

Human brains work the same way. We're biological pattern-matching systems whose neural architecture is literally shaped by our experience. When a child learns that objects fall when dropped, they're not accessing some innate physics engine—they're building neural pathways through repeated observations, just like those kittens learning to see.

Knowing About the World vs. Being in the World

But there's a crucial distinction between knowing about the world and being in the world.

All of the language model's vast knowledge comes from descriptions, stories, and explanations—information about the world, not experience in the world. These models know about tables from reading millions of text descriptions, but they've never bumped into one. They understand gravity from processing countless physics explanations, but they've never felt the stomach-dropping sensation of falling.

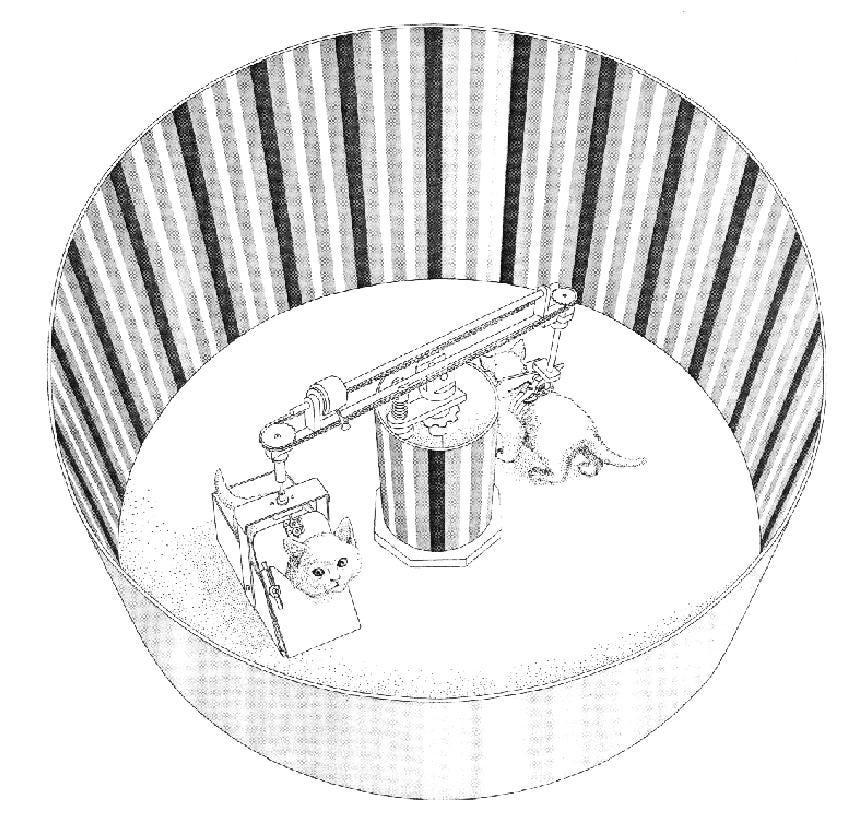

This distinction matters more than we might think. In another famous experiment, psychologists Richard Held and Alan Hein placed pairs of kittens in a carousel apparatus. One kitten could walk freely, powering the carousel for a second kitten who experienced identical visual input but remained passive. The active kitten developed normal depth perception and spatial awareness. The passive kitten, despite seeing exactly the same visual information, failed to develop proper spatial understanding.

The passive kitten had all the visual data but none of the interaction. It could see the world but had never learned to be in it.

Current language models are like that passive kitten—or perhaps more accurately, like the stochastic parrot itself. They've absorbed vast amounts of information about physical reality but have never taken a single step, never felt resistance when pushing against a door, never learned that some surfaces support weight while others don't.

The parrot knows every word for flight but has never felt the wind beneath its wings.

You can watch a million YouTube videos about cooking, but that doesn't mean you know how to navigate a kitchen. The difference between reading about riding a bicycle and actually balancing on two wheels is the difference between knowing about the world and being in the world.

This is the frontier that robotics is now exploring—what happens when the stochastic parrot finally tries to leave its cage and interact with the physical world. The models learned to understand the world by reading about it. The real test is whether they can learn to be in it.