Size, Autonomy, and Decentralization: Charting the Evolution of LLMs

The article reviews how 2023 AI advancements defied expectations on model scale, centralization, and agent autonomy.

"Prediction is very difficult, especially if it's about the future."

– Niels Bohr.

Niels Bohr's words resonate deeply as we navigate the fast-changing world of Large Language Models (LLMs). In the span of the last year, the landscape has undergone transformations that have upended our beliefs of what was likely. In this article we'll explore some of the truths we held to be self-evident that have since been found to be irrelevant.

Size: The Shift from Bigger to Smarter

A year ago, model size dominated AI discussions. Speculation was rampant, with many expecting GPT-4 to redefine the scale of model parameters from billions to trillions. This anticipation mirrored early beliefs in computing, where the performance of a CPU was gauged by its transistor count. Similarly, it was assumed that the size of an LLM would be the best predictor of its intelligence and capability.

The anticipation surrounding GPT-4 was emblematic of this size-centric philosophy. Predictions painted a picture of a colossus model that would require a staggering 700 terabytes of RAM. Yet, the reality of GPT-4's unveiling—a Mixture of Experts comprising eight models, each with 220 billion parameters—challenged these preconceptions. It turned out that brawn wasn't the sole benchmark of brilliance.

Companies like Mistral have shown us a way forward that isn't bigger. Their development of a 7 billion parameter model, which can run on an iPhone and rivals the performance of Llama's 13B and 34B models, was a revelation. By December 2023, Mistral further disrupted the status quo with its 8x7B ensemble, a Mixture of Experts configuration of eight 7 billion parameter models. This approach outperformed the LlaMA 70B and GPT-3.5 (175B) models in benchmarks.

Foundational Models: From Centralization to Democratization

In the early days of Large Language Models (LLMs), the sheer size and complexity of developing such technologies meant that only a handful of well-funded companies could venture into this space. OpenAI's transition from a non-profit to a more commercially structured entity underscored the significant financial investments required.

However, the narrative took an unexpected turn with the resilience of the open-source movement. Far from being left behind, this community not only kept pace but, in many instances, pushed the boundaries of what was possible with LLMs. This shift signaled a move away from the centralization of AI technology, opening the doors to a more democratized landscape.

The movement gained further momentum as major players like Meta, Cohere, Salesforce, Adept, and Mistral threw their hats into the ring. In February 2023, Meta announced the LLaMA model, a 65-billion-parameter LLM. April 2023 marked the birth of Mistral, born from the collective expertise of AI researchers from Meta and Google's DeepMind. Mistral's has rapidly ascended the leaderboards in model performance and company valuation - raising $400M at a valuation of $2B in less than a year of existence.

Agents: Stuck Like a Roomba in a Corner

In March 2023, Toran Richards's unveiling of AutoGPT marked a milestone in the LLM journey, introducing an agent framework based on GPT-4. This innovation promised a new era of autonomy for LLMs, sparking both excitement and concern. As the year progressed, the drive to enhance and build upon this framework gained momentum. Notably, Microsoft stepped into the fray with AutoGen, a framework that enables multiple agents to collaborate on complex tasks.

However, the reality of these advancements has largely been confined to the realm of "demoware" – captivating in demonstrations yet falling short of practical, production-ready application. In many instances, these agents resemble a Roomba stuck in a corner, crunching through tokens.

Despite these challenges, the journey towards achieving functional and autonomous agents continues. OpenAI has been methodically addressing the infrastructure needs of these agents by developing a secure sandbox for code execution, enhancing function calling capabilities, and introducing a rudimentary form of memory. Adept has been refining a model specializing in understanding and executing knowledge tasks.

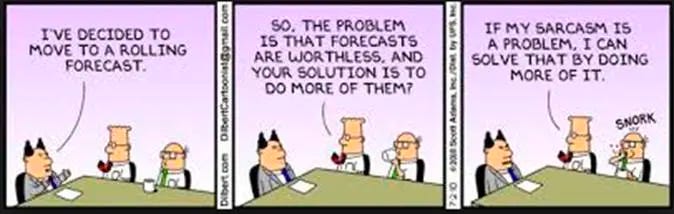

As we navigate the unpredictable waters of GenAI, the words of Niels Bohr remind us of the inherent uncertainty in making predictions about the future. Yet, this does not diminish the value of such forecasts. Rather, predictions serve as a compass in the vast ocean of our knowledge, guiding us through the complexities of technological advancement. They are not just speculative exercises but tools that allow us to frame our understanding, prepare for potential futures, and shape the direction of innovation.