Rethinking Certainty: Updating AI Mental Models Before the Cement Dries

This article examines how rapid advances in AI are disrupting short-held assumptions and mental models.

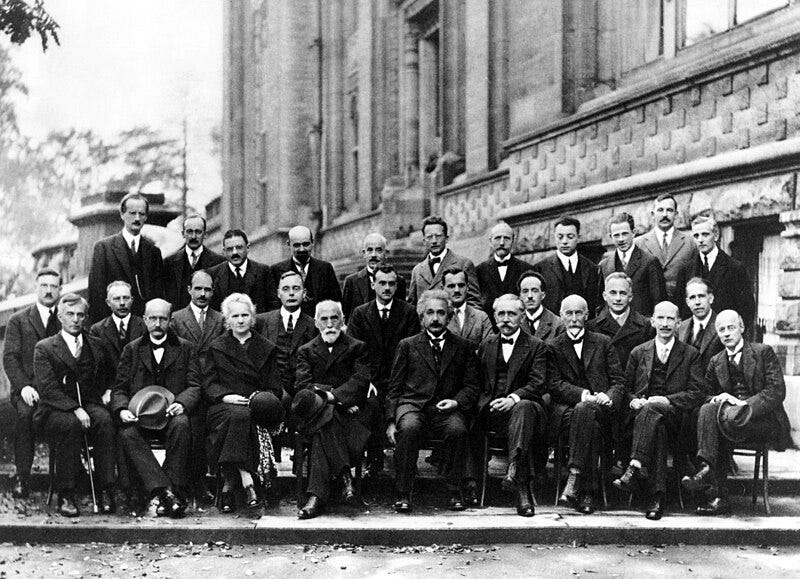

In October 1927, 29 of the greatest minds in physics gathered at the Hotel Metropole in Brussels for the fifth Solvay Conference, hoping to reconcile divisions that had arisen in the world of physics. Yet beneath the collegiality simmered a clash between two warring worldviews – Einstein's skepticism over quantum theory's probabilistic nature encapsulated in his famous quote, "God does not play dice with the universe" versus Bohr and Heisenberg's full embrace of the unpredictable captured in the Copenhagen interpretation.

Nearly a century later, AI finds itself at a similar crossroads, with new breakthroughs dismantling assumptions almost as soon as the cement has dried. From prompting becoming the new programming language (Software 3.0) to shattering constraints on model context windows, we seem to be entering another age of radical change in how we understand AI.

Mental Mode 1: We have a limited bandwidth to "talk" to the Language Model

The context window for large language models (LLMs) refers to the maximum amount of text the model can consider at one time when generating a response. LLMs take the text and break it up into tokens - shortened chunks of characters. Though not exact, tokens are around four characters in English. So a paragraph may contain around 100 tokens, while 1,500 words is approximately 2,048 tokens.

In the early days of LLMs like GPT-1, the context window was tiny - just 512 tokens. This severely limited how much information you could provide to the model. To ground this in practical terms, with GPT-1 you could really only provide a few tweet's worth of context. Careful prompt engineering was essential to squeeze instructions and examples into that tight space.

But context windows have been rapidly expanding. In 2020, GPT-3 doubled the context window to 2,048 tokens. With this size, you could provide a short blog post as context. Anthropic's Claude model increased it to 9,000 tokens in March 2023, then an astounding 100,000 tokens in May 2023. For perspective, the entire text of The Great Gatsby is around 72,000 tokens. Suddenly you could feed Claude a short novel's worth of text to work from. In November 2023, OpenAI matched that with a 128,000 token context window for GPT-4.

In February 2024, Google announced its new Gemini 1.5 model supports an incredible 1 million token context window, with plans to reach 10 million (25% of the Encyclopedia Brittanica). That's a 4,800x increase from GPT-3! The rapidly expanding context window has opened up new possibilities for how we can interact with LLMs. While early models required carefully sculpted prompts, new models can ingest documents, research papers, and more as their starting context.

Mental Model 2: Prompt Engineering is a Dark Art

In the early days of large language models (LLMs), getting value from them required a delicate, artful touch. As AI researcher Andrej Karpathy noted, "Prompt engineering is like being an LLM psychologist."

Why was prompt engineering so critical? One reason was that models were trained on messy, inaccurately labeled datasets. For example, text-to-image models like DALL-E were trained on image datasets with poor captions. Just consider the caption I gave to the image in the section above. I captioned it to match what I was talking about, not what's actually in the picture.

This meant early text-to-image models struggled with "prompt following." You could tweak and tweak your prompt but feel lost because the model didn't interpret prompts the way you'd expect. I've experienced that frustration firsthand trying to generate the images from Midjourney.

Dedicated "prompt whisperers" learned to craft prompts that aligned with how the models interpreted language based on their training. But average users aren't used to speaking in the way the models expected.

OpenAI addressed this for DALL-E 3 by re-captioning (using an AI model, of course!) their training dataset with higher-quality labels that were descriptive. But now DALL-E spoke a language that sounded excessively detailed and flowery to humans. So, to make DALL-E 3 more accessible, they use ChatGPT as the interface. ChatGPT knows how to translate natural human prompts into DALL-E's preferred language.

Each iteration moves us further from needing to understand model internals and training quirks. Prompting becomes less of an arcane art and more like a natural conversation. As the ever-insightful Karpathy observed, "The hottest new programming language is English." Abstraction and translation layers empower average users and unlock the potential of LLMs ending the reign of the prompt wizards.

You can read more in my article from last year:

Ever-Evolving Mental Models for an Ever-Evolving Field

The 1927 Solvay Conference brought together the greatest minds in physics, yet it is remembered more for its iconic photo capturing the luminaries in attendance than for resolving the clashing perspectives on quantum theory. Nearly a century later, concepts like wave-particle duality continue to elude simple categorization.

Similarly, our mental models for AI will keep evolving as new breakthroughs emerge. We may never have a large enough context window for every use case or eliminate the need for artful prompting. Much like quantum mechanics, AI contains innate complexities that resist tidy solutions.

Our fortune cookie has come true - we live in interesting times. The pace of AI progress challenges our assumptions almost faster than we can form them.

In other news:

I’m presenting at the Austin Business Owners Council on Feb 26th and Austin Silicon Hills Luncheon on April 24th. If you’re attending those events, It would be great to connect.