Mastering the Maze: A Map of Open-Source LLM Frameworks

From LangChain's rise and criticism to Llama Index's competitive stride and AutoGPT's introduction, this article paints a picture of LLM's open-source middleware.

In the rapidly advancing world of artificial intelligence, large language models (LLMs) stand as towering titans, revolutionizing countless sectors with their abilities. But for all their prowess, LLMs aren't standalone tools; they are the beating heart in a complex body of computational components, where various frameworks play the role of vital organs, orchestrating the seamless functioning of these advanced models. The rise and development of these frameworks—LangChain, Llama Index, AutoGPT, and many more— have played an instrumental role in making the utilization of LLMs easier and more efficient. In this article, we will delve into the realm of these sophisticated orchestrators, exploring their evolution, capabilities, triumphs, and the challenges they face.

The Missing Middleware: From FOMO to RAG

On the surface, it might seem like LLMs are autonomous entities that simply accept inputs and generate outputs. But the reality is more complex. Just as a maestro needs an orchestra to create a symphony, an LLM often requires a layer of middleware—what Astasia Myers of Quiet VC dubbed FOMO (Foundational Model Orchestration) systems—to reach its full potential. These frameworks serve as invaluable tools, allowing developers to chain calls from LLMs to other tools, APIs, or even other LLM calls, effectively bridging the gap between standalone models and composite AI solutions.

One reason FOMO solutions have emerged is because the foundational model API exists within the context of a pipeline the includes data computation and knowledge systems. The majority of the time finding value from the model isn’t just querying the API to receive a result. Instead, it’s a multi-step process. Currently, hosted APIs don’t offer pre- and post-processing as part of the platform experience

Astassia Myers, Quiet VC

In the infancy of LLMs, building apps meant surmounting a challenge: the limited context window that limited the models' interaction with larger documents. Developers had to find ways around this issue, resulting in the use of systems like vector databases (DBs), which allowed for the retrieval of relevant sections of text before invoking the LLM. This led to the development of the Retrieval Augmented Generation (RAG) pattern, one of the early popular use cases for these frameworks.

LangChain: The Rise and Criticism

Among the projects appearing in the Twitteverse (x-verse?) after ChatGPT's release was LangChain, a project created by Harrison Chase. LangChain is more than a Python library. It offers a user-friendly way to interact with LLMs by abstracting the call process and providing interfaces to Vector DBs. As the project expanded, it started offering numerous abstractions, including adding AI Agents.

LangChain quickly gained popularity among users. Its success led to impressive funding rounds, including a $10 million seed round and a subsequent $20-$25 million Series A round at a $200 million valuation. As of now, LangChain is considered a go-to choice for developers working on LLM projects.

However, LangChain isn't without its critics:

In all, I wasted a month learning and testing LangChain, with the big takeway that popular AI apps may not necessarily be worth the hype

The problem with LangChain is that it makes simple things relatively complex, and with that unnecessary complexity creates a tribalism which hurts the up-and-coming AI ecosystem as a whole. If you’re a newbie who wants to just learn how to interface with ChatGPT, definitely don’t start with LangChain.

Max Woolf, The Problem with LangChain

Llama Index: Not just for data

As LangChain was securing its position as a favorite among LLM app builders, another project emerged. Jerry Liu initiated work on a project known as GPT Index, designed to simplify the use of personal data with LLMs. Now rebranded as Llama Index and backed by an $8.5M seed round from GreyLock, it's made a strong entrance into the scene.

At its core, Llama Index is a data framework tailored for LLM applications. It acts as a bridge, connecting external data with LLMs and enabling users to augment LLMs with their private data. The functionality of Llama Index extends beyond simple data integration. It also provides tools to ingest, structure, and access private or domain-specific data, making it an indispensable resource in the toolbox of many LLM developers.

Yet, the creators of Llama Index recognized the limitations of a data-centric approach. They realized that there are only so many data loaders one could build, so they began exploring agent frameworks. Following the current trend, Llama Lab began to work on the implementation of llama_agi, an imitation of the AGI concept, and auto_llama, a project inspired by AutoGPT. The team is now endeavoring to transform Llama Index into a comprehensive platform for LLM applications.

AutoGPT: The Attention-Grabber

On March 30, 2023, a novel application entered the AI scene. Toran Bruce unveiled AutoGPT, an open-source Python application using GPT-4, and the response was immediate. Unique for its autonomous capabilities, Auto-GPT performs tasks with minimal human intervention, even self-prompting. The implication of Artificial General Intelligence (AGI) stirred excitement. Notably, AutoGPT achieved this without the help of LangChain or any similar library building it's own framework.

However, AutoGPT is not without its shortcomings. The verbose processing tends to exhaust tokens quickly, with costs for a simple task estimated at around $14. Furthermore, it has a tendency to get stuck in loops and go off the rains. Judging AGI by the performance of AutoGPT, human intelligence appears to be safe from domination.

Upon first glance, one might consider $14.4 a reasonable price to find a Christmas recipe. However, the real issue emerges when you realize you must pay another $14.4 to find a recipe for Thanksgiving by following the same chain of thought again. It becomes clear that generating recipes for Christmas or Thanksgiving should differ by only one "parameter": the festival. The initial $14.4 is spent on developing a method for creating a recipe. Once established, spending the same amount again to adjust the parameter seems illogical. This uncovers a fundamental problem with Auto-GPT: it fails to separate development and production.

Han Xiao, CEO of Jina.ai

Auto-GPT Unmasked: The Hype and Hard Truths of Its Production Pitfalls

In response to these issues, LoopGPT emerged as a modularized fork of AutoGPT, aiming to streamline the original framework.

Hugging Face:

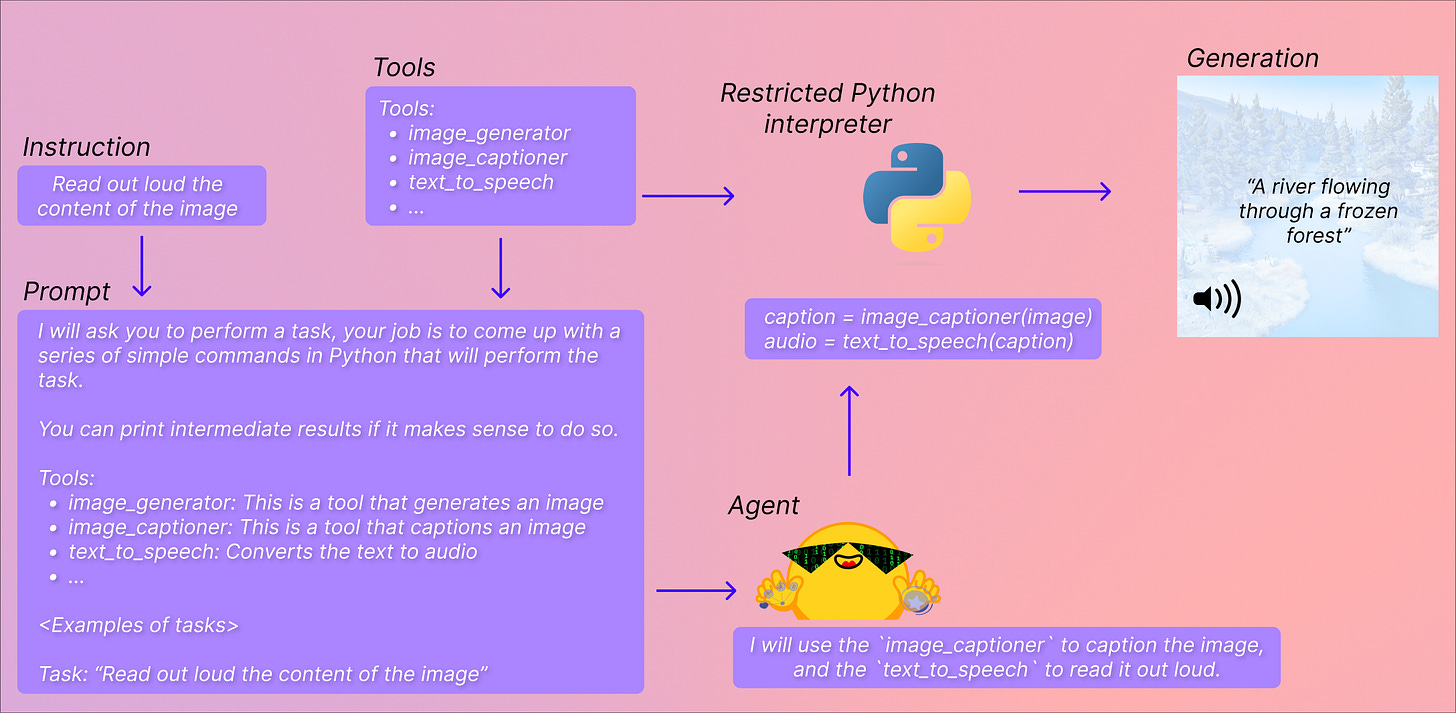

In May 2023, Hugging Face, known for hosting a diverse collection of 100K models—akin to a GitHub for AI—unveiled its Transformers Agent framework. From the outset, the Hugging Face agents included 10 tools tailored to common tasks such as document question-answering and text summarization. They offered the flexibility to add custom tools and, notably, the capability for large language models to generate code to run in a restricted Python interpreter.

This move into the agent framework domain by Hugging Face is worth highlighting due to their previous contribution to open-source AI. Their implementation of Google's transformer architecture became a widely adopted framework for deploying and fine-tuning NLP models like BERT, GPT, RoBERTa, and more. With the provision of pre-trained models and user-friendly APIs, Hugging Face has made strides in making NLP more accessible to a broad spectrum of users, including developers, researchers, and AI enthusiasts.

In the rapidly evolving AI landscape, open-source frameworks like LangChain, Llama Index, AutoGPT, and Hugging Face's Transformers Agent are blazing their trails. They share the stage with other projects such as Dust.tt, SuperAGI, and BabyAGI, offering new approaches in LLM integration.

However, we're still at the dawn of this new era. As these tools and models adapt and evolve, we're learning to overcome limitations and exploit new possibilities, accelerating our journey.

This is just a snapshot of the dynamic, open-source world of AI and LLMs. The ever-changing tapestry also includes a plethora of closed-source commercial frameworks, which deserve equal attention and exploration. We'll look into these in a future article.

Related Articles:

Igniting Cognitive Combustion: Fusing Cognitive Architectures and LLMs to Build the Next Computer

“A system is never the sum of its parts, it's the product of their interactions” Russell Ackoff. We tend to fixate on the latest gadgets and algorithms, yet technology alone does not drive real-world change. True progress depends on the systems that integrate technologies into a solution. Individual tools are a means to an end; systems are the end itself.