Mad Model Disease: When AI Eats Its Own Words

Exploring the phenomenon of 'model autophagy' where AI language models are increasingly trained on synthetic data containing their own regurgitated outputs

In the late 1980s, a mysterious and devastating neurological disorder began to emerge in the cattle herds of Britain. Cows exhibited strange behavior, becoming aggressive and uncoordinated, before eventually succumbing to the fatal condition known as bovine spongiform encephalopathy (BSE), or "mad cow disease." As the crisis unfolded, scientists traced the cause to the practice of feeding cattle with rendered protein from other ruminant animals, including cattle themselves. This cannibalistic cycle, driven by an attempt to find an inexpensive protein source, had dire consequences, leading to the slaughter of millions of cattle.

However, the horror of mad cow disease extended beyond the ranch. In the early 1990s, a new variant of the fatal brain disorder Creutzfeldt-Jakob Disease (vCJD) began appearing in humans, and it was linked to the consumption of BSE-contaminated beef products. vCJD attacked the human brain, gradually destroying it and causing a range of debilitating symptoms, including personality changes, loss of coordination, and, ultimately, death. The emergence of this human form of mad cow disease sparked widespread panic and a firestorm of public outcry as a livestock disease jumped to humans.

Just as mad cow disease was traced back to feeding cattle with remnants of cattle, the world of artificial intelligence is grappling with a similar self-consuming phenomenon. As the demand for larger language models continues to grow, driven by the pursuit of better performance through scaling laws, we find ourselves in a similar predicament. In our quest to build ever-larger training datasets, we are vacuuming up content from the web, which increasingly contains outputs generated by other AI models. This ouroboros-like cycle, where models are being trained on their own synthetic outputs, has been termed "model autophagy" – a process akin to the cannibalistic feeding that led to the mad cow disease crisis. In this article, we will explore the potential implications of model autophagy, delving into the concerns raised by researchers about the amplification of biases, artifacts, and the potential dilution of diversity in the data that shapes the future of AI.

The Hapsburg AI: When Models Eat Their Own Words

What makes language models so effective is the sheer volume of text they "read" during training. We can visualize this training process as the model poring over a vast collection of books, absorbing every word, sentence, and paragraph. Assuming a typical book contains around 100,000 tokens (words or punctuation marks) and a standard library shelf holds about 100 books, each shelf would contain approximately 10 million tokens.

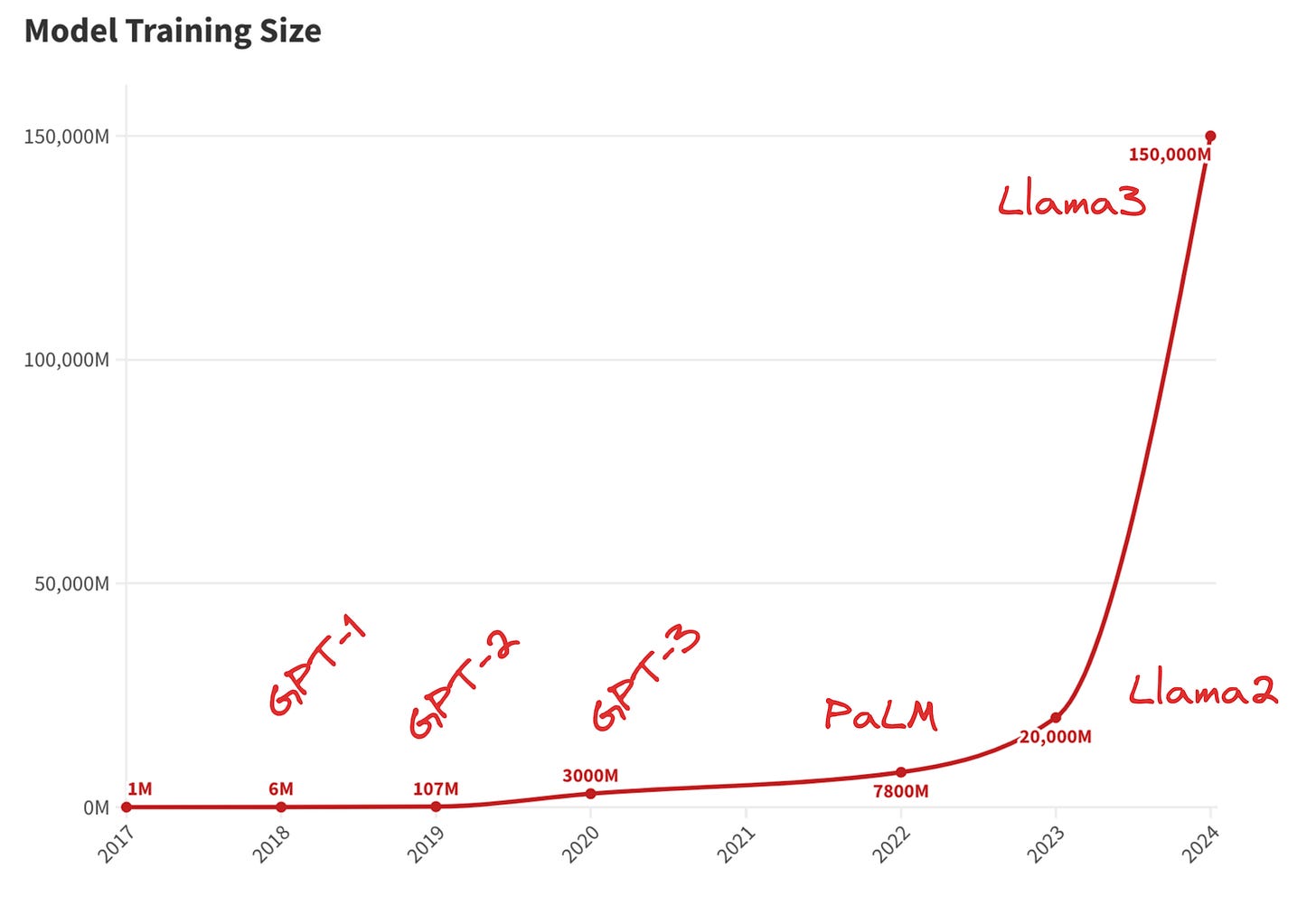

In the early days, the datasets used for training were relatively modest. The original Transformer model for English-to-German translation, for instance, used the WMT dataset with 4.5 million sentence pairs, equivalent to about 10 library shelves' worth of text. GPT-1, OpenAI's pioneering language model, was trained on the Book Corpus dataset comprising 7,000 books, or around 60 library shelves.

However, as the demand for larger and more capable models grew, so did the appetite for training data. By 2020, GPT-3 had consumed a staggering 300,000 million tokens – the equivalent of 30,000 library shelves. A year later, Google's PALM model raised the bar even higher, devouring 780,000 million tokens, or roughly 78,000 library shelves' worth of text.

And the data hunger shows no signs of abating. In 2024, Meta's Llama 3 model was trained on a whopping 15 trillion tokens, equivalent to a mind-boggling 1.5 million library shelves. To put that into perspective, if you were to read one book per day, it would take you over 4,000 years to consume the same amount of text.

As the demand for data has grown exponentially, we've turned to a new source: synthetic data generated by AI models themselves. Llama 3, for instance, was trained on data that included outputs from its predecessor, Llama 2. This practice, known as "model autophagy," has raised concerns among researchers about the potential consequences of feeding models a diet of their own regurgitated outputs.

The term "Hapsburg AI" has been coined to describe the potential outcome of this self-consuming cycle, drawing an analogy to the infamous Habsburg dynasty known for its propensity for inbreeding. Just as the Habsburgs became increasingly inbred over generations, leading to a range of genetic disorders and a narrowing of their gene pool, there is a fear that language models trained on an ever-shrinking pool of synthetic data could become increasingly generic, normalized, and devoid of diversity – a veritable "slop of anodyne business-speak."

In 2023, researchers at Rice University published a paper titled "Self-Consuming Generative Models Go MAD," in which they warned about the potential risks associated with model autophagy. They conducted a thorough analysis of various "autophagous loops" – scenarios where synthetic data generated by previous models is used to train new models, with or without the addition of fresh, real-world data.

Their primary conclusion across all scenarios was sobering: without enough fresh, real data in each generation of an autophagous loop, future generative models are doomed to have their quality (precision) or diversity (recall) progressively decrease. They termed this condition "Model Autophagy Disorder" (MAD), drawing an analogy to the devastating mad cow disease.

Will Model Autophagy Destroy the Web?

As we delve deeper into the realm of model autophagy, a fear echoes through the halls of the tech world: the future of web content is destined to become an echo chamber of talking-head GenAI programs, regurgitating uninspired slop.

Take, for instance, LinkedIn's "collaborative articles" feature. Launched with the ambitious goal of tapping into the collective "10 billion years of professional knowledge" within the network's over 1 billion users, the feature seems to have been met with enthusiasm, boasting 10 million contributors and a 270% increase in readership. However, as Fortune magazine writer Sharon Goldman vividly recounts in her article "'Cesspool of AI crap' or smash hit? LinkedIn's AI-powered collaborative articles offer a sobering peek at the future of content," the actual content tells a different story.

Users have lambasted the feature as "an ever-deteriorating ouroboros of 'thought leadership' wank and AI word vomit." The promise of harnessing the collective wisdom of industry experts has been overshadowed by a deluge of synthetic, uninspired content, leaving readers questioning the future of online discourse.

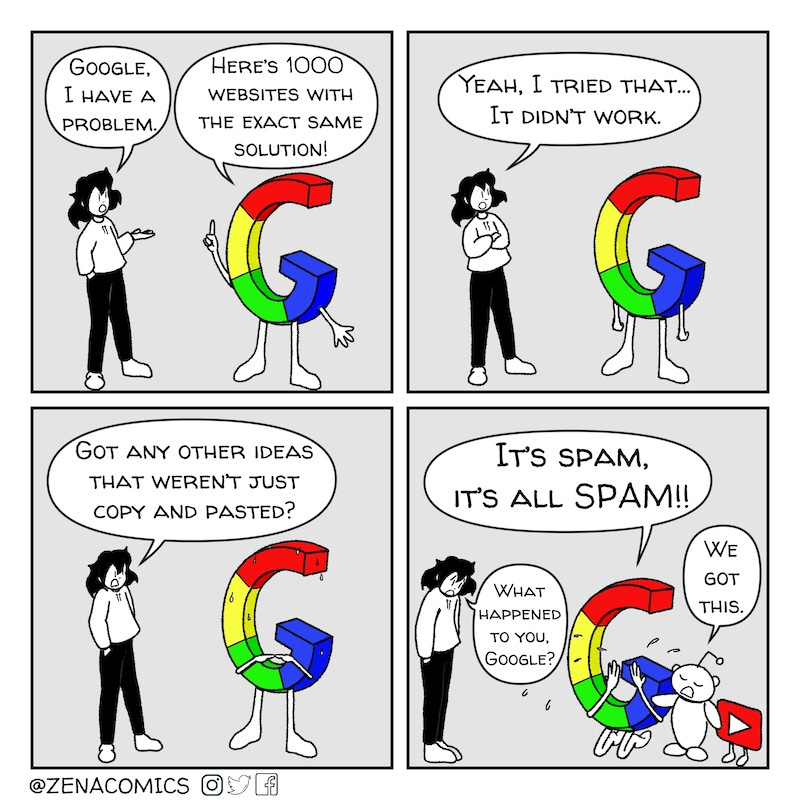

But as tempting as it may be to blame Large Language Models for this descent into mediocrity, the truth is that web content has already been destroyed by AI, but the culprit is not LLMs. Instead, it is the very algorithm that has shaped the internet as we know it – the Google Search Engine.

Death by a Thousand Keyword Stuffs: How SEO Devoured the Web

In the early days of the internet, discovering new websites was a delightful adventure. Back in 1994, I would entertain myself by browsing the latest additions to a Stanford University student project called Yahoo!, marveling at this newfangled digital realm's modest but steady growth.

But before long, the internet exploded. Websites and pages proliferated, and the once-quaint task of manually browsing for new content no longer made sense. Thus, the era of search engines was born, with Google emerging as the undisputed king.

Almost in lockstep with the rise of search, however, came the art of "search result persuasion" – a practice that would ultimately contort the web into a twisted, less authentic version of itself.

Over the years, a vicious dance ensued. Search engines deployed increasingly sophisticated algorithms to detect and penalize those attempting to game the system. At the same time, website builders, driven by a Darwinian desire for visibility, relentlessly sought new techniques to outsmart the algorithms.

Keyword stuffing was in vogue, then swiftly out. Backlinks counted, but only if they weren't 'shady.' This never-ending arms race between search engines and SEO experts has, in many ways, destroyed the very essence of web content.

One category where this battle has left a particularly painful mark is the humble recipe search. What should be a simple listing of ingredients and instructions has morphed into a mile-long saga, replete with the touching story of the recipe's roots in a grandmother's love for her grandchild, and other miscellaneous fluff.

The chefs-turned-SEO experts have no choice but to comply with the search engine AI's demands. It's difficult to overstate the power Google wields over recipe publishers. Most major food and recipe sites derive two-thirds or more of their visitors from the search engine, with the top three slots on desktop and four on mobile earning 75 percent of the clicks for any given search term, from "banana bread" to "black bean soup."

Google's metrics for a page's quality and dwell time are unlikely to be met with a tiny, no-frills webpage. Thus, the once-succinct recipe has been inflated beyond recognition, a bloated monstrosity sacrificed at the altar of search engine optimization.

Finding Blooms in the Unmanicured Digital Commons

As we grapple with the potential consequences of model autophagy and the dilution of web content, it's tempting to lament the loss of the Internet's utopian origins. But the digital commons was never a pristine, untainted space. Back in 1978, when the predecessor to the internet, ARPANET, had a mere 2,600 users, a marketing manager from Digital Equipment Corporation sent the first spam email to 15% of the entire user base.

The unruly nature of our digital realms is simply a reflection of the human society that created them. Just as we've struggled to find unity in addressing challenges like climate change, our response to threats like model autophagy has been fragmented. When mad cow disease spread among UK's cattle herds, European governments swiftly instituted aggressive bans on feeding animal byproducts to ruminants. In contrast, the United States took a more piecemeal approach, prohibiting the practice for some animals like cattle but permitting it for others like pigs and poultry.

This messiness, this fertile chaos, is not a bug but a feature of human innovation. Amid the concerns over SEO-warped content and AI models consuming their own outputs, green shoots of solutions are already emerging. Platforms like Medium, with over 100 million active monthly users and nearly 1 million paid subscribers, are cultivating curated digital gardens. Discord has grown to nearly 200 million monthly users by enabling private community spaces. And the Web3 movement, while nascent, represents an effort to decentralize the Internet itself.

In the realm of AI training data, initiatives like Together.ai's Red Pajama 2 dataset are working to clean and curate the raw inputs that shape machine learning models. By filtering and processing Common Crawl's 100+ trillion token web corpus, they produced a 30 trillion token dataset - which has been used to train over 500 models. Tools like Lilac, recently acquired by Databricks, are empowering practitioners to build more robust, trustworthy training data pipelines.

The challenges we face today represent the internet's necessary struggle that will ultimately give rise to new paradigms. Embrace the mess.