From Chat to Glasses: Platform Wars Evolve

OpenAI’s Apps SDK validates the CPU-or-OS question—but the real platform battle might be happening on your face

When you spend time thinking about a technology, you start noticing things. Not predictions exactly—more like watching ripples form on water and sensing the current underneath. A feature announcement here, a strategic pivot there. Individually, they’re just news. Together, they sketch a pattern.

This week, OpenAI announced their Apps SDK—a way for developers to build apps that live entirely inside ChatGPT. Apps like Zillow and Spotify that surface when relevant to your conversation, with rich interfaces that blend into the chat. It’s available to ChatGPT’s 800 million users starting now.

The announcement answers a question I’ve been circling for two years: Would large language models become CPUs—infrastructure that powers apps—or Operating Systems—platforms that host them? Back in 2023, the industry seemed settled on CPUs. But the platform opportunity felt too big to ignore. OpenAI’s Apps SDK suggests they chose Operating System. They were right to.

But here’s what makes this interesting. While we’ve been watching the chat interface evolve, three major tech companies just pivoted hard toward smart glasses. Meta shipped the Ray-Ban Display in September. Google announced Android XR at I/O. Apple halted their Vision Pro redesign in October to fast-track lightweight glasses instead.

The platform question got answered this week. But it might already be evolving.

The CPU Consensus

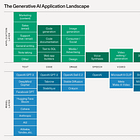

In early 2023, the narrative seemed settled. Sequoia released their Generative AI landscape, mapping the ecosystem using a familiar framework: application layer on top, infrastructure layer below. LLMs sat comfortably in the infrastructure section, positioned as “foundation models”—something developers would build on top of. The diagram looked like every other tech stack diagram you’d seen. Nothing suggested these models would host apps rather than power them.

The analogy made intuitive sense. Nathan Baschez wrote that “LLMs are the new CPUs,” performing intelligent probabilistic reasoning instead of deterministic operations on ones and zeros. SaaS companies rushed to integrate LLM capabilities into their products. We even saw graphs comparing model parameter growth to Moore’s Law.

The underlying assumption was: apps would use AI as a component. The app would still own the user experience, still control the interface, still mediate between users and functionality. AI would make apps smarter, but the app would remain the platform.

But I kept returning to a troubling question. If AI could really understand context and intent—if it could generate relevant responses from natural language—why did we need all these separate apps with their elaborate navigation schemes? I wrote about this in April 2023, looking at the app sprawl problem. The average enterprise deployed over 100 apps. Employees wasted nearly a month per year just switching between them, according to a RingCentral study. A month per year, lost to clicking through menus and toggling windows.

If AI was really that capable, why would we need this maze?

The OS Possibility

There was another way to think about it. Back in February 2023, I’d written about how AI might make user experience disappear entirely. Not improve it—eliminate it. The example I used was dictionaries. We used to navigate to a shelf, pull down a book, flip to the right section, scan for the word. Now we just highlight text and get the definition. The journey vanished. The tool became invisible.

What if that pattern extended to all software? What if interfaces could be generated on-demand—appearing when you need them, vanishing when you don’t? Not AI-powered apps, but apps that materialize in the moment to serve a specific need.

OpenAI seemed to sense this possibility. In March 2023, they launched their GPT store—an attempt to turn ChatGPT into a platform. The concept was there: ChatGPT as the interface, GPTs as functionality you could invoke conversationally. But it never quite clicked. The execution felt clunky. The plugins were hard to discover. The whole thing had the energy of a feature release, not a platform shift.

Still, two puzzles kept nagging at me. First: if AI can understand context and intent, why do users navigate through menus and buttons at all? Why click through settings when you could just say what you want? Second: why build static apps in the first place? Why pre-program every possible interface when AI might generate the exact interface you need right now?

I wrote about this tension in June 2023. The question was whether LLMs would be embedded in apps (CPUs) or whether they’d become the platform that hosted apps (Operating Systems). The platform opportunity seemed impossible to ignore—especially for OpenAI, sitting on ChatGPT’s explosive growth. Even Intel, a company that literally made CPUs, didn’t want to be invisible infrastructure. Their “Intel Inside” campaign spent $1.5 billion in 2001 alone to make sure consumers knew what powered their computers.

But the path from insight to execution wasn’t obvious. The GPT store proved that. You needed more than an idea. You needed the right implementation.

This Week’s Answer

The Apps SDK, launched October 6th, might be that implementation. It’s built on the Model Context Protocol (MCP), which means apps live inside ChatGPT rather than the other way around. When you’re talking about buying a house, Zillow surfaces with an interactive map. Planning a party? Spotify appears with playlist creation tools. The interfaces blend into the conversation—rich where they need to be, invisible when they’re not.

The execution addresses what the plugin store missed. Apps appear contextually, suggested by ChatGPT at the right moment. No browsing an app store. No initiating chat with a separate GPT. ChatGPT mediates the entire experience, deciding when to surface which functionality. The 800 million users stay in one interface.

This validates the Operating System model. ChatGPT owns the relationship with users. It controls when apps appear, how they’re presented, which ones get prominence. Apps become rather than destinations—functionality that ChatGPT orchestrates rather than standalone experiences users must navigate to. The digital wayfinding I wrote about in 2023 is starting to disappear, just like dictionaries did.

But there’s still a gap between this implementation and the vision. These apps are statically coded. Developers pre-build interfaces through MCP, defining what users can do and how they can do it. The truly dynamic piece—AI generating interfaces on-the-fly based on the specific context of your conversation—isn’t here yet.

You can ask Zillow to show you homes in your budget, but the Zillow app still defines what “showing you homes” looks like. The interface adapts to your query, but it doesn’t fundamentally change based on whether you’re a first-time buyer, an investor, or someone relocating for work. The app surface is responsive, not generative.

Maybe that’s enough. Maybe responsive apps that appear contextually solve the problems I was circling. Or maybe we’re still halfway to something more fluid. Either way, the platform question has an answer now: LLMs are becoming Operating Systems, and ChatGPT is the one pulling it off.

The Next Platform

Except while we’ve been focused on chat interfaces, hardware just shifted underneath us.

In September, Meta shipped the Ray-Ban Display—smart glasses that look like regular Ray-Bans but include tiny displays inside the lenses. Meta AI can now show you answers rather than just speak them. Ask for directions, and a map appears in your field of vision. Want to see recipe steps while cooking? They’re right there, hands-free. The glasses pair with an EMG wristband that reads nerve signals from your hand, letting you swipe through information with a thumb gesture. The interface becomes spatial, embedded in what you’re already looking at.

Google followed with Android XR, announced at I/O 2025. It’s a platform for both headsets and glasses, with Gemini AI built in. They’re not just putting AI on glasses—they’re building the operating system for spatial computing. The same platform battle that played out on phones and PCs is setting up again, this time for a new interface.

Then came Apple’s move. In October, Bloomberg reported that Apple halted their Vision Pro redesign to fast-track lightweight smart glasses instead. The $3,500 headset that was supposed to define spatial computing? Deprioritized. Apple’s shifting resources to glasses that compete directly with Meta’s—multiple frame options, fashion-forward design, everyday wearability. They’re treating it like they treated the iPhone: a platform play, not a peripheral.

Three major tech companies, all pivoting to glasses. Not clunky VR headsets you wear for gaming sessions. Lightweight glasses you could wear all day, everywhere. AI that sees what you see, surfaces information when you need it, interfaces that appear in your line of sight rather than on a screen.

The pattern suggests chat isn’t the final form of this platform. Maybe it’s transitional—a way station between traditional apps and something more ambient. Apps that don’t live in ChatGPT but in your environment, overlaid on the world you’re already looking at. The contextual surfacing that makes the Apps SDK compelling could be even more powerful when the context is literal—what you’re looking at right now, where you are, what you’re doing.

The platform question OpenAI answered this week might be reopening. Not whether LLMs are infrastructure or platforms—that’s settled. But which interface wins the platform battle: the one you chat with or the one you wear?

I don’t know why autocorrect changed enshittification to sensitization!

Seems plausible, but I wonder…when the information overlay on the environment shapes and constrains our embodied sensory experience of it, what forms of wisdom will be lost? What “sensitization of our experience will we be opening up to? Disinformation, advertising, propaganda are sure to work their way into this medium as well. And then where do we find clarity and compassion?