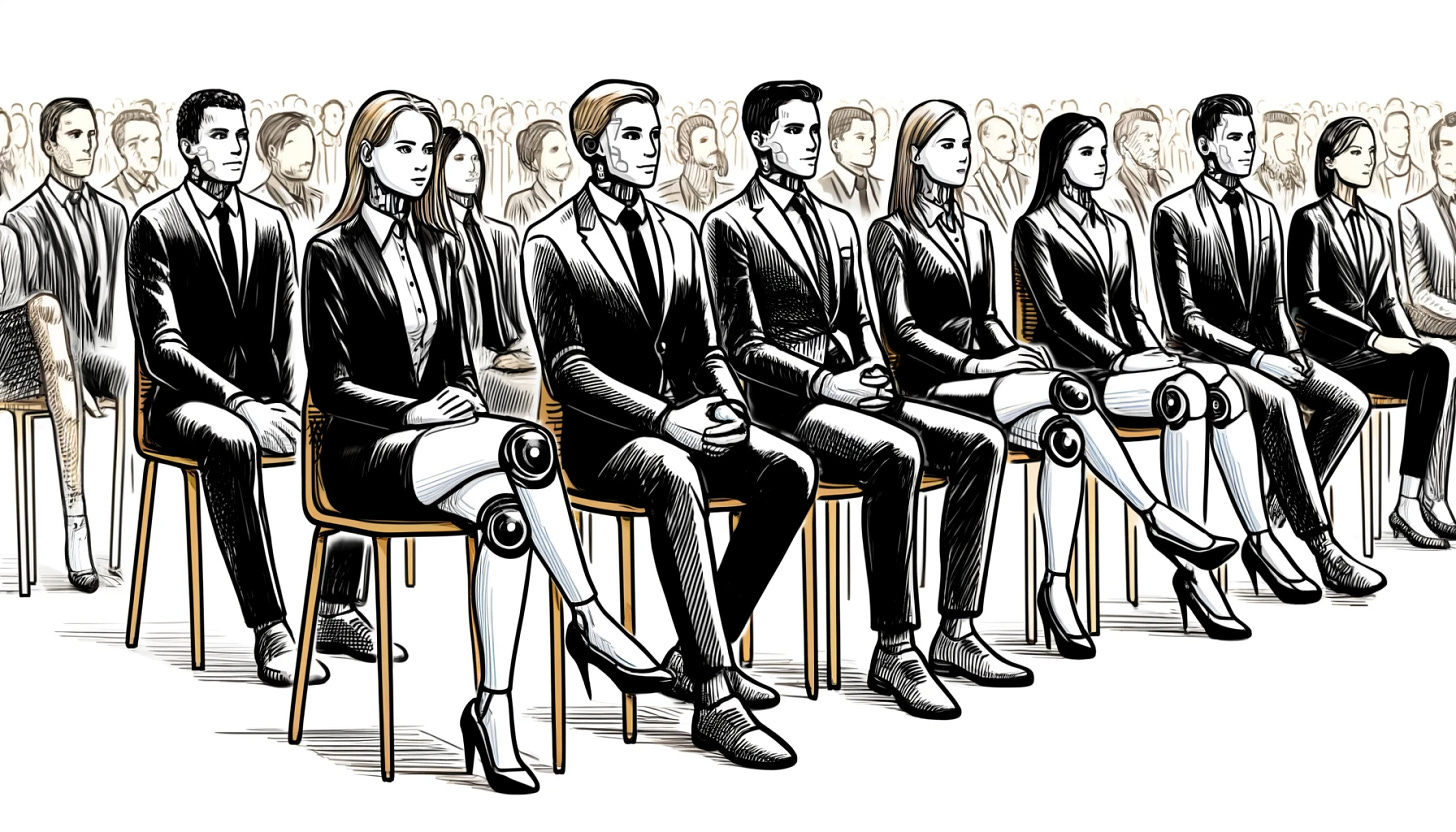

From Arthur's Androids to AI Associates: How Professional Services Will Evolve

Arthur Andersen's pioneering employee training model, which set the standard for the consulting industry, now serves as a blueprint for nurturing the AI-powered consultants of the future.

In the early 1900s, a young orphaned mailboy found himself captivated by the world of accounting while working at Allis-Chalmers in Chicago. Determined to pursue his new found passion, he attended night school and graduated with a bachelor's degree from the prestigious Kellogg School of Business at Northwestern University. His hard work and dedication paid off when, in 1908, he became the youngest CPA in the state. Just five years later, in 1913, he took a leap of faith and bought out an accounting firm, boldly naming it after himself: Arthur Andersen & Co.

Arthur Andersen recognized early on that the key to scaling his company and providing consistent, high-quality service to clients lay in the education and training of his employees. In a groundbreaking move, he created the profession's first centralized training program, investing in his staff by training them during work hours. This commitment to employee development fostered a strong sense of loyalty and pride among his workforce, who affectionately referred to themselves as "Arthur's Androids."

As the company grew, so did its reputation for consistency. A 1992 New York Times article shed light on the extraordinary lengths Arthur Andersen & Co. and its subsidiary, Andersen Consulting (now known as Accenture), went to recruit and train top talent. Each year, the firm would welcome 4,000 fresh graduates to its sprawling 150-acre St. Charles campus, where they would undergo a rigorous indoctrination into the company's methodology. Upon completion of their training, these newly minted consultants would join the ranks of the firm's then 22,000-strong workforce, spread across 151 offices worldwide.

Andersen's approach to recruiting and training top graduates has since become the standard in the consulting industry. Today, large consulting firms actively target the best and brightest from prestigious universities, investing heavily in comprehensive training programs designed to mold these talented individuals into highly skilled and well-compensated consultants.

As we stand on the brink of integrating a new type of worker (AI) into our ranks, this article examines the potential role of this recruitment and training model in expanding the use of AI in business. In the AI industry, foundational models are akin to recent college graduates—equipped with basic skills but ready for further refinement to master the nuances of specific fields. We'll look at how these foundation models are being trained for use cases in specific verticals.

Building the Foundation

First, let's look back at how we got here. As we covered in a previous article, the key to the current era of transformer-driven Large Language Models (LLMs) is the realization that training models with more data makes them more powerful and generalizable. However, obtaining clean and labeled data at the scale required to train large-scale language models is hard. To overcome this challenge, OpenAI decided to employ unsupervised learning techniques to build their models, training them on vast amounts of raw data and later refining them with human feedback.

These models were primarily trained on web content. For example, GPT-3, one of the most well-known LLMs, was trained on the Common Crawl dataset, which contains petabytes of data scraped from the internet, including websites, books, and articles. Given this training data, it's no surprise that GPT-3 excelled at generating web-like content right out of the box.

Early adopters of GPT-3, such as Jasper and Writer, capitalized on this strength and built successful businesses around marketing content generation. However, the model's reliance on internet-sourced data also led to some unintended consequences. Just like the internet itself, GPT-3 learned to speak with authority on topics where it had no real expertise, often resulting in plausible-sounding but inaccurate information.

Despite these limitations, the ability of these models to generalize and adapt to various tasks earned them the title of "Foundation Models." The idea behind this term is that these models serve as a solid foundation upon which companies can build a wide range of applications, tailoring them to specific use cases through additional training.

Fine-Tuning Language Models for Code Development

Model builders, being developers themselves, recognized the potential of using freshly minted language models like GPT-3 to make programming easier. OpenAI formed a partnership with GitHub to train its GPT-3 model on public repositories containing over a billion lines of code in various programming languages. Prior to this, GPT-3 could understand natural language and generate responses based on its training, but its coding skills were limited.

In 2021, OpenAI announced the Codex model, a capable Python programmer that was also proficient in over a dozen languages, including JavaScript, Go, Perl, PHP, Ruby, Swift, TypeScript, and even Shell. Microsoft launched the Codex model in its GitHub Copilot product, where this newly trained model could directly assist programmers in one of the most popular code editors on the market.

This first role-specific model has been a resounding success. GitHub's research found that programmers using Copilot can complete tasks in about half the time compared to those who don't. Programmers seem to agree, as they've signed up in droves for Copilot. It is estimated that Microsoft made $100 million in 2023 from the $10/month Copilot fees.

Other companies are also trying their hand at fine-tuning their own software engineers. For example, Tabnine and Codium are offering similar AI-powered code completion tools, while Google has a project called Codey, which aims to provide AI-assisted code completion and generation within their development ecosystem. Cognition Labs even went so far as to claim they've built a full software programmer named Devin.

Dr. AI Will See You Now

The success of fine-tuned language models in the realm of code development has inspired similar efforts in the healthcare industry.

In 2022, Google's state-of-the-art foundational model, PaLM, was trained on the MedQA dataset, which consists of multiple-choice questions from the United States Medical License Exams (USMLE). The resulting model, dubbed Med-PaLM, scored an impressive 67.6% on the USMLE in 2022. However, by May 2023, Google had further improved the model, and the new Med-PaLM2 achieved a remarkable 86.5% on the USMLE, surpassing the performance of many human medical professionals.

The Med-PaLM2 model's performance extends beyond multiple-choice questions. Google conducted detailed human evaluations on long-form questions across multiple axes relevant to clinical applications. In a pairwise comparative ranking of 1,066 consumer medical questions, physicians preferred Med-PaLM2 answers to those produced by physicians on eight of nine axes on clinical utility.

Recognizing Med-PaLM2's potential impact on the healthcare industry, Google has made the model available on its cloud platform for approved use cases. Consulting companies, such as Accenture and Deloitte, are among the first to incorporate these models into their healthcare practice areas.

Counselor AI Takes the Stand

In 2022, a new player emerged in the world of AI-powered professional services: Harvey.AI. Founded by a former Meta AI researcher and a lawyer, Harvey raised $5 million in its initial funding round, which included investment from OpenAI. While the company has started by focusing on the legal industry, its ambition is to build a comprehensive AI-powered professional services platform.

Harvey.AI's approach to AI-powered legal services is bespoke. The company starts with OpenAI's GPT model and fine-tunes it on legal industry data. This process is then taken a step further by fine-tuning the AI for individual law firms, allowing the model to learn and adapt to each firm's specific forms, templates, and practices.

As Gabriel Pereyra, co-founder of Harvey.AI, explains:

"Think of OpenAI's training of the base model as K-12 schooling. Then Harvey puts the model through law school using general legal data. But even a law school graduate does not know how a particular firm works. Harvey's firm-specific training is like teaching an associate the firm's unique practice."

Sound familiar?

The Ever-Evolving Androids

However, it's important to recognize that the AI landscape is evolving at an unprecedented pace, and the term "foundational models" is optimistic. These models are not the firm footing their name implies they are.

Take, for example, the Codex model, which was pretty awesome when it was first released. Its learnings were quickly folded into ChatGPT 3.5, and the model was retired. Similarly, Google's healthcare team invested considerable resources in tuning Med-PaLM atop the company's best LLM at the time. However, with the introduction of Google's new multimodal-capable model, Gemini, in December 2023, the PaLM story effectively came to an end. The healthcare team will now have to retrain their models atop Gemini.

This rapid evolution of foundational models presents a unique challenge for companies investing in domain-specific AI applications. While they spend time and resources fine-tuning models for their specific needs, the freshly minted "graduates" coming out of the model builder warehouses are often more capable than the models they have spent time training.

A prime example of this is Bloomberg GPT, a financial-focused language model on which Bloomberg spent millions of dollars. Within months of its release, GPT-4 had already surpassed Bloomberg GPT's performance on financial tasks.

Arthur Andersen was right - to build a scalable business, consistency and standardization are needed. The idea of training base models to be accomplished experts in their fields is likely how AI will enter verticals. But, for the moment, the descendants of Arthur Andersen's Androids are safe.