Failing Forward: How Your Approach to Mistakes Predicts AI Success

Why planning for failure is the key to AI implementation success

I have lots of discussions with people about AI and I've developed a heuristic that predicts who will work through challenges and who will find it frustrating and give up. It has nothing to do with technical skill or resources—it's all about how they talk about failure.

This pattern emerged as I noticed something different about AI from previous technologies. For decades, we've built automation systems that follow deterministic rules—input X always produces output Y. But AI operates in probabilities, offering the most likely answer from countless possibilities including incorrect ones.

This shift from certainty to probability means errors and mistakes are always going to be part of your product or service. This demands a different approach. The teams I see struggling with AI implementation often bring the wrong mental model to the task. They approach AI as if it were traditional software that can be perfected before deployment.

This reveals two opposing philosophies: working to prevent failures versus working to withstand failures. The first approach sees failure as a flaw to eliminate before deployment. The second sees failure as inevitable and builds systems that expect, detect, and learn from it.

Engineers call this second approach "fault-tolerant design"—creating systems that continue functioning even when components fail. While traditional software development often focuses on preventing errors, fault-tolerant systems assume errors will occur and prepare accordingly.

Life and Death Lessons: Aviation vs. Medicine

The contrast between these two philosophies becomes clearest when we look at industries where failure costs lives. According to the World Health Organization, "There is a 1 in a million chance of a person being harmed while travelling by plane. In comparison, there is a 1 in 300 chance of a patient being harmed during health care." This stark difference in safety outcomes isn't accidental.

Aviation's remarkable safety record didn't happen by focusing solely on pilot skill or aircraft design. It came from building an entire industry culture around the assumption that failures will occur. According to NASA research, 70% of aviation accidents involve human error. Rather than treating this as a problem to completely eliminate through training, the industry built systems that assume human error is inevitable.

Every commercial aircraft has multiple redundant systems. Pilots actively practice engine failure scenarios. The "black box" records everything for analysis after incidents. Most importantly, the industry has created a "just culture" where reporting errors is encouraged without blame, allowing everyone to learn from mistakes before they become disasters.

Now contrast this with healthcare. Despite compelling research showing that simple checklists can dramatically reduce medical errors, adoption remains inconsistent. Many hospitals still rely primarily on physician skill rather than systemic safeguards. Complications often remain private, limiting industry-wide learning. The culture still often focuses on individual excellence rather than system resilience.

The difference isn't that pilots are better than surgeons or that planes are simpler than human bodies. It's that aviation has fully embraced working to withstand failures through fault-tolerant design, while medicine still largely operates by working to prevent failures through individual excellence.

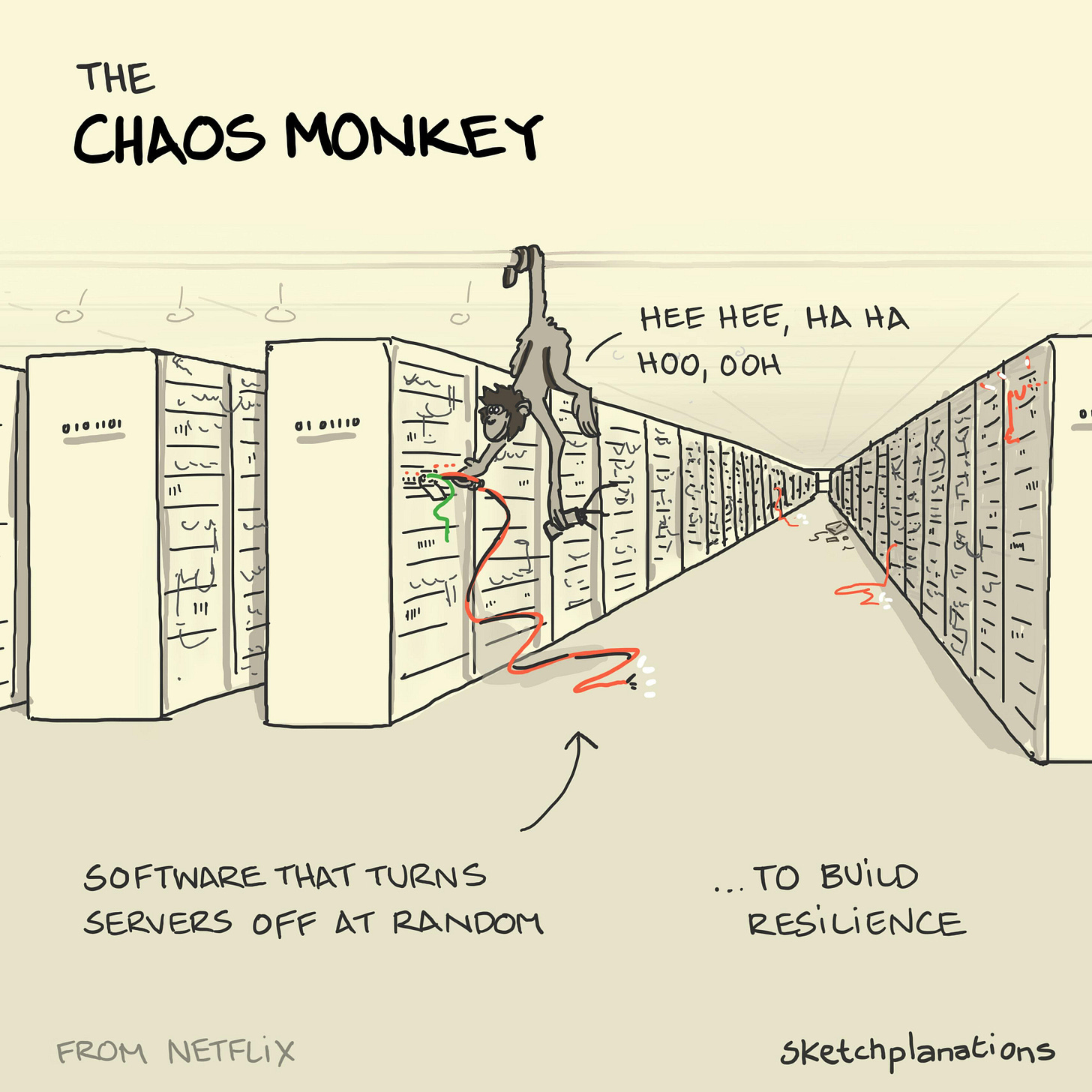

Netflix's Deliberate Chaos

This approach to failure isn't limited to life-or-death situations. Netflix offers an example with their "Chaos Monkey" project. In 2010, they created a tool that deliberately causes failures in their production systems—randomly shutting down servers during business hours.

Why would a company intentionally break its own systems? As they explained in their technical blog: "By running Chaos Monkey in the middle of a business day, in a carefully monitored environment with engineers standing by to address any problems, we can still learn the lessons about how our system responds to failures before they happen in the wild."

Chaos Monkey runs as a scheduled job that creates terminations during working hours, forcing engineers to design systems that continue functioning despite random failures. This approach changed how Netflix builds resilient systems and has been adopted by many other tech companies.

This is fault-tolerant design taken to its logical conclusion. Netflix doesn't just work to withstand failures—they deliberately create failures to ensure their systems can handle them. Rather than hoping everything works perfectly, they assume things will break and build accordingly.

Beyond Resilience: Becoming Antifragile

We've explored two approaches to failure: working to prevent failures and working to withstand them. But there's a third, even more powerful approach.

Think about how we ship different objects. With fragile crystal glassware, we work to prevent failures—carefully bubble-wrapping each piece and marking the box "FRAGILE." With a metal toolbox, we accept that it might get knocked around—it's fault-tolerant by design and will function despite a few dents.

But what about a system that actually gets better when things go wrong?

This is antifragility, a concept coined by Nassim Taleb in his book Antifragile. Unlike fragile systems that break under stress or robust systems that merely withstand stress, antifragile systems actually improve from disorder, volatility, and failure.

Your immune system is antifragile—exposure to germs makes it stronger. Your muscles are antifragile—they grow from the stress of exercise. The most successful AI companies aspire to build this same quality into their systems. When they succeed, the resulting feedback loop creates a competitive advantage that's difficult for others to replicate.

When ChatGPT added thumbs up and thumbs down buttons to responses, they weren't just gauging user sentiment, they were creating a system that gets stronger from its own failures. Each thumbs down becomes training data that improves future responses. OpenAI collects these signals at scale, identifying patterns in what users find helpful versus unhelpful, then uses this data to fine-tune their models. The more people use the system and provide feedback, the more refined the model becomes. The system doesn't just tolerate failure; it converts failure into improvement.

So I return to my original heuristic: how people talk about failure predicts their success with AI. How do you talk about your AI projects? Are you working to prevent failures, working to withstand them, or have you designed systems that actually improve from them?