Digital Memento: How AI is Learning to Remember

What Christopher Nolan's 'Memento' can teach us about AI's struggle with memory and context - and how we're solving it

When I start a new conversation with ChatGPT or an LLM, I'm reminded of Leonard Shelby from Christopher Nolan's "Memento" - a man who can't form new memories. Like Leonard, our most advanced AI systems are frozen in their own perpetual present - trapped at their training cut-off date, starting each interaction fresh, unable to remember what comes after.

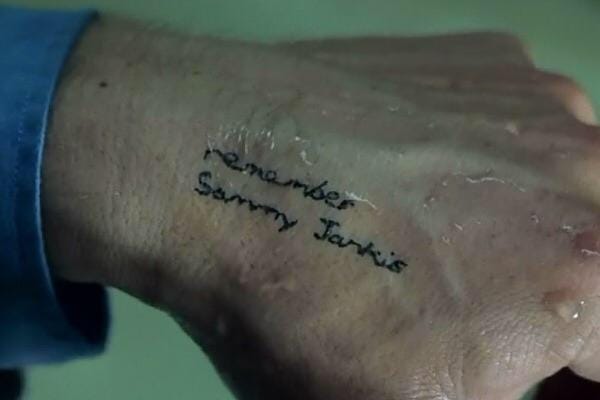

"Memento" is one of my unforgettable films because it tells a unique and haunting story. Leonard suffers from anterograde amnesia - a condition that prevents him from forming new memories. Every morning, he wakes to the same confusion, his mind reset to the last moment he remembers: the murder of his wife. To function in a world that constantly slips away from him, Leonard has developed an intricate system of external memory. His body is covered in tattoos - permanent reminders of crucial facts. His pockets overflow with annotated Polaroid photos and handwritten notes. These physical artifacts become his context, his only way to piece together who he is and why he's hunting his wife's killer.

Twenty-four years after the film's release, I find myself thinking about Leonard's condition as I work with Large Language Models (LLMs). These models arrive pre-trained with vast knowledge – they can discuss quantum physics, write poetry, or debug complex code. Yet, like Leonard, they live in a perpetual present, unable to retain and use things they learn from one conversation to the next.

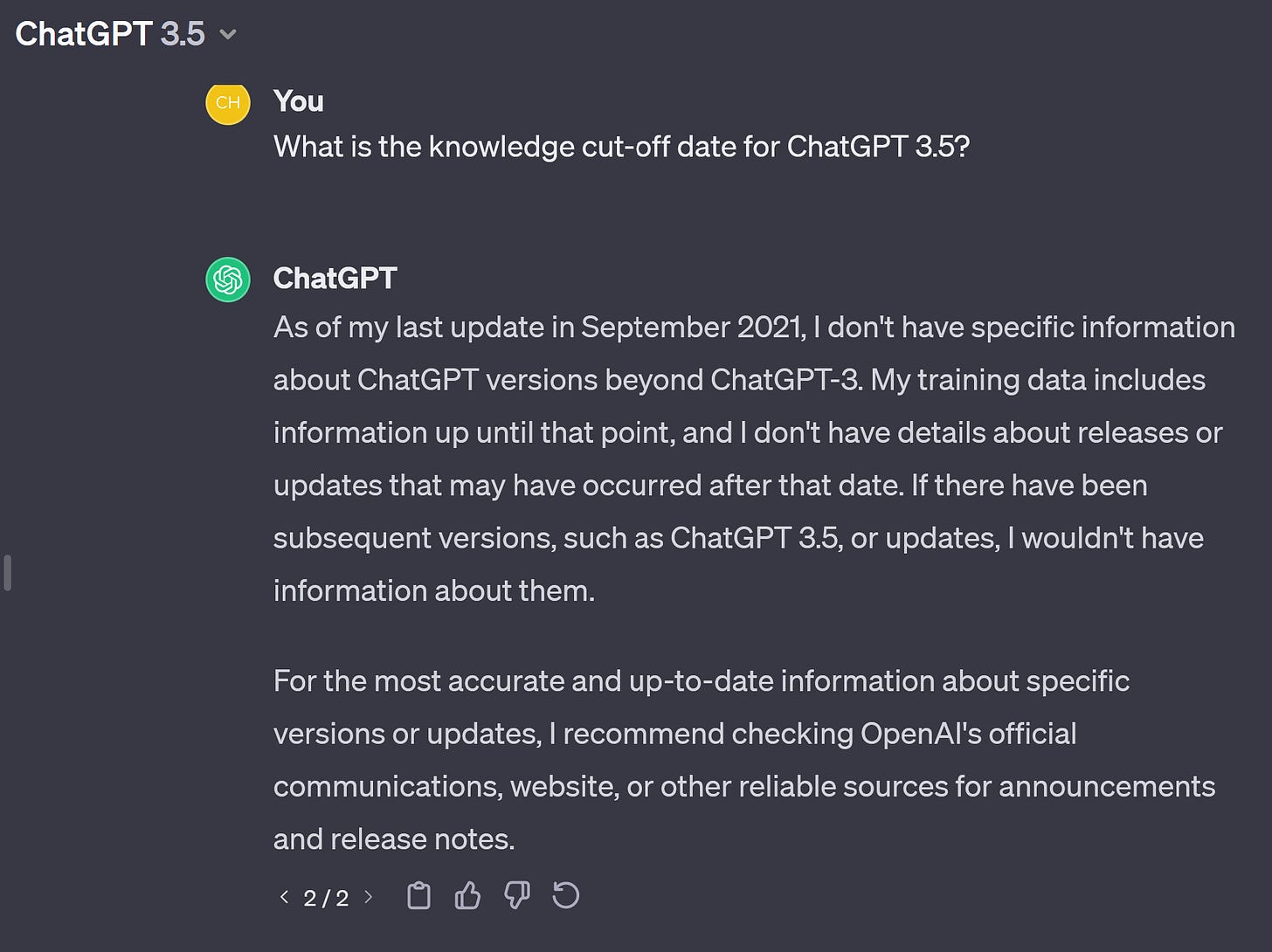

When ChatGPT first launched, this limitation was particularly striking. Even today, if you ask ChatGPT about its knowledge cutoff date, you'll get a response that feels eerily similar to Leonard explaining his condition

The irony isn't lost on me. We're working with some of the most sophisticated artificial intelligence ever created, capable of processing and synthesizing information in ways that seem almost magical, yet they suffer from a form of digital amnesia. Every morning, metaphorically speaking, they wake up and need to be reminded of who we are and what we're trying to accomplish.

It's like working with a brilliant but forgetful intern. You spend hours explaining your project, your preferences, your way of thinking. The intern gets it perfectly – maybe even adds insights you hadn't considered. You leave the office feeling accomplished, only to return the next day to find you need to start the explanation all over again.

This challenge – how to help AI systems maintain context and "remember" their interactions – has become one of the most important pursuits in modern AI development. And just as Leonard developed his system of external memories, we're creating our own solutions to bridge this gap between artificial intelligence and artificial memory.

Simple Solutions for Simple Tasks

If Leonard only needed to perform the same tasks each day - say, working as a hotel concierge with his standard set of notes and photos - his memory system would work perfectly. Similarly, we've found that LLMs excel when handling well-defined, repetitive tasks that fit within a single context window. Their inability to form new memories doesn't matter when each task is self-contained.

Consider a customer service email processor. Traditional implementation might require complex regex patterns, multiple if-statements, and careful string manipulation. With an LLM, it becomes a matter of storing a prompt like "Transform this email into a professional response addressing the customer's concerns about [issue]." This prompt can be reused for thousands of emails, each time producing uniquely tailored responses.

The solution is elegantly simple: maintain these prompts in your code, pass in the specific details for each case, and let the LLM handle the heavy lifting. Companies are building entire products around this pattern, treating prompts as reusable templates that can be called whenever needed - like Leonard reaching for the same trusted photo in his pocket.

But here's where we encounter a limitation. There's a growing trend to use these models as autonomous agents, engaging them in long-running conversations to solve complex problems. Imagine trying to architect a software system through conversation with an AI, or collaboratively writing a novel. As these interactions grow longer, the context window - our digital equivalent of Leonard's pocket space - begins to overflow. Important details from earlier in the conversation slip away, and the model's responses drift from the established context.

This is when we find ourselves, like Leonard, constantly having to remind our AI assistant of critical information from just moments ago. The brilliant conversation partner who was helping design your system architecture has forgotten key decisions from an hour ago. The collaborative writing assistant has lost track of important character developments from earlier chapters. We're bumping up against the boundaries of what a simple context window can maintain.

Breaking Through the Memory Barrier

Just as Leonard eventually realized that tattoos and Polaroids weren't enough, the AI industry is discovering that simple context windows need to evolve into more sophisticated memory systems. The solutions being developed mirror Leonard's journey - from permanent tattoos to organized notes, and now to something even more sophisticated.

The first breakthrough came with "permanent tattoos" for our AI assistants. Platform providers like OpenAI introduced Custom Instructions - allowing users to inscribe permanent preferences into their AI interactions. Like Leonard's tattoos reminding him of crucial facts about himself, these instructions persist across conversations, telling the AI how to behave, what tone to use, or what role to play.

ChatGPT then introduced something akin to Leonard's pocket notes - a memory feature that can remember key details about users and their preferences. Unlike the permanent nature of Custom Instructions, these memories are more flexible, can be updated or deleted, and give users control over what information persists - much like Leonard choosing which Polaroids to keep or discard.

But the industry is pushing even further. Companies like Letta are essentially giving Leonard a smart digital assistant to manage his memory system. Born from the popular MemGPT open source project, their technology enables AI agents to maintain context far beyond single conversations, implementing both short-term and long-term memory storage. It's as if Leonard could now not only collect his photos and notes but have them automatically organized, cross-referenced, and surfaced at exactly the right moment.

The Future of AI Memory: More Than Just Notes and Tattoos

We're still in the early stages of teaching AI systems to remember. Like Leonard's initial system of tattoos and Polaroids, our current solutions are functional but somewhat crude. Yet the trajectory is clear - memory isn't just going to be an add-on feature for AI models, but a fundamental capability built into their architecture, as essential as web browsing or search is today.

This isn't about waiting for more powerful models like Claude 4 or GPT-5. The ability to maintain context and build understanding over time is a separate challenge from raw intelligence. Just as Leonard didn't need more intelligence to solve his wife's murder - he needed a better system for retaining and connecting information - our current models don't necessarily need more parameters. They need better memory systems.

As these memory solutions mature, we'll be able to engage AI systems in increasingly complex and lengthy projects. Imagine an AI assistant that can truly collaborate on a complicated software development project, or help write a novel while maintaining perfect consistency in plot and character development. The possibilities extend far beyond what we can achieve with our current context-limited interactions.

In the meantime, I've developed my own simple workaround that feels somewhat similar to Leonard's system of leaving notes to himself. When I'm deep in a long conversation with an AI, I periodically ask it to summarize our context with a prompt like this:

""" Please turn the conversation so far into a system prompt I can use with Claude so the next session understands what I'm trying to do and how I'm approaching it. Write out who I am and who you are in detail. """

This allows me to see if the most important details are still in the memory. If they are not I can correct it. If they are it never hurts to be reminded who we are and what we're working on. After all, that's what Leonard tried so hard to do...