Brown M&Ms: Why Verifying AI Works Matters as Much as Making It Work

What Van Halen can teach us about delegating complex work

Rock stars have a reputation for absurd demands. Tabloids report Mariah Carey once asked for 20 white kittens and 100 doves, while other stars allegedly specify exact dressing-room temperatures. The stories pile up, each more ridiculous than the last, reinforcing our image of celebrity excess.

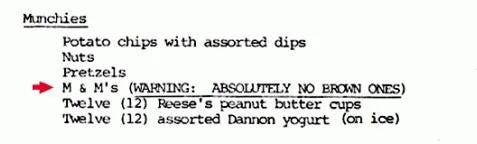

But one of the more eccentric demands comes from Van Halen’s 1982 world tour contract - “No brown M&Ms in the backstage area upon pain of forfeiture of the show, with full compensation.” What kind of an aversion to brown M&Ms produce a rider like that? Who demands someone go through and pull out brown M&Ms?

Then in his memoir, Crazy from the Heat, lead vocalist David Lee Roth revealed what it was actually about.

Van Halen was the first band to take massive productions into tertiary markets—smaller venues that had never hosted a show this size, staffed by local crews they’d never worked with before and would likely never see again. They’d arrive with nine eighteen-wheeler trucks full of equipment, where the standard was three trucks maximum. The sheer scale created serious technical challenges that depended on dozens of local contractors and subcontractors—electricians, riggers, structural engineers—each making critical decisions Roth would never directly see.

The contract rider was massive, filled with technical specifications. Detail after detail, each one critical to keeping the crew safe and the show operational.

And then, buried in the middle of these technical specifications, Article 126: no brown M&Ms.

When Roth walked backstage, if he saw even a single brown M&M in that bowl, he knew instantly that they hadn’t read the contract and would call for a complete line-check of the entire production. As he later explained, “Guaranteed you’re going to arrive at a technical error. They didn’t read the contract.” Sometimes those errors were life-threatening—girders that couldn’t support the weight, flooring that would sink, structural problems that could collapse the stage.

The brown M&Ms were a simple test that told Roth whether he could rely on work done by people he’d never met. If they didn’t catch the part about brown M&Ms what else did they miss? When you can’t check everything yourself, you need to find your brown M&Ms—one simple thing that reveals whether anyone checked anything at all.

The Verification Gap

We face the same challenge with AI systems today. We deploy agents to analyze documents, write code, draft contracts, generate reports. Then we stand backstage wondering: can we verify what they produced?

If verifying the work takes as much effort as checking every girder and cable yourself, delegation breaks down. Why delegate if checking the work takes just as much time as doing it yourself?

This might explain why AI coding agents have gained traction in software development. The verification infrastructure already exists. When AI suggests code, you run it to see if it works. You write tests to validate its behavior. You review it before merging. You revert it if problems emerge. The work is hard to do (writing correct code) but verification can be automated.

The software industry already handles delegation at scale. For example, consider a large software project like the Windows operating system. It requires coordinating work from people who’ve never met, spread across time zones and continents. The Windows operating system contains approximately 50 million lines of code. For Windows 8, Microsoft organized 35 feature teams, each with 25-40 developers plus test and program management—roughly a thousand people working on something no single person could comprehend in its entirety.

These developers don’t all know each other. A programmer in Redmond commits code that integrates with work from someone in Bangalore who’s collaborating with a contractor in Prague. Yet the system works because the software industry has built infrastructure for distributed trust: version control tracks every change, automated tests verify that new code doesn’t break existing functionality, code review processes catch errors before integration, continuous integration systems detect conflicts, and critically, everything can be reverted if something goes wrong.

Now contrast this with legal work or financial analysis. An AI system analyzes case law and drafts a legal argument, or processes financial documents and produces analysis. How do you verify either is correct? You’d need to check each citation, validate the reasoning, confirm the logic. The verification can take as long as doing the original work yourself. If supervising the AI takes as much effort as doing the work yourself, what’s the benefit?

The gap isn’t AI capability. The gap is verification cost. And unlike software development, these fields haven’t found their brown M&Ms yet—that simple check that reveals whether the complex work was done right.

So what would those checks look like?

Show Your Work

The answer might be simpler than we think. My elementary school math teacher had an approach that applies here. She told us repeatedly to: “Show your work.”

When we’d turn in math homework, she didn’t just want the answer. She wanted to see how we got there. Because seeing “x = 7” told her nothing. Did we understand the concept? Did we follow the right process? Or did we guess and get lucky?

When the answer was wrong, “show your work” let her identify exactly where our thinking went astray. Maybe we understood the concept but made an arithmetic error. Maybe we set up the equation wrong from the start. The work itself was the diagnostic.

The Hitchhiker’s Guide to the Galaxy has a famous joke about this. A supercomputer named Deep Thought spends 7.5 million years calculating the Answer to the Ultimate Question of Life, the Universe, and Everything. Finally, it announces: “Forty-two.”

The joke is that the answer is meaningless without understanding the question. But there’s a deeper problem: even if we knew the question, “42” tells us nothing about how Deep Thought arrived at that answer. We can’t verify its reasoning. We can’t check its work. We can’t identify where it might have gone wrong. We just have to trust that after 7.5 million years of computation, it got it right.

This is the problem with integrating AI outputs today. They give us the answer without an easy way of reviewing the work.

But what does “show your work” look like when the work is complex cognitive analysis rather than simple arithmetic?

Designing for Verification

I’ve been wrestling with this question while building an AI agent that processes company financial documents and analyzes them. This routine work could save human financial analysts hours of work.

But presenting just the final analysis recreates the Deep Thought problem. Here’s the insight you were looking for! But how did we get here? What assumptions did we make? Which documents did we pull these numbers from? The human reviewing the analysis would need to reverify everything, which defeats the purpose.

The solution was to make the system output its work the same way a human analyst would: spreadsheets with exposed formulas that professionals already know how to read. They can trace calculations backward, spot-check assumptions, modify inputs to test scenarios. But more importantly, the system includes a diagnostic layer—automated checks that surface whether source documents processed correctly, whether totals reconcile, whether there are calculation anomalies.

This shifts verification from “check everything or trust nothing” to “review diagnostics, spot-check anomalies.” The human doesn’t need to reverify every calculation. They glance at the diagnostic checks—green or red—then investigate only when something flags. Hours of verification work becomes minutes.

I’d found my brown M&Ms for financial analysis: those diagnostic checks. If they pass, the work is probably sound. If they don’t, I know exactly where to look.

This is one implementation of “show your work,” but the principle applies everywhere AI does complex cognitive tasks.

Which raises a larger question: why is every team building these verification systems from scratch?

The Missing Infrastructure

I spent weeks designing these Excel structures and diagnostic checks for one specific use case—financial document analysis. A legal team would need to build something completely different. A medical team would need something else entirely. Every domain solving the same fundamental problem independently.

This felt familiar. Before Git became standard, software teams had version control tools, but collaborating with large teams was still painful. People developed custom workflows and processes to work around the limitations. Then Git arrived with both better tooling and new ways of working. But it wasn’t automatic—developers had to learn how to branch properly, how to merge effectively, how to use the infrastructure.

That’s where we are now with AI in other domains.

What’s the Git for lawyers working with documents? For financial analysts collaborating on Excel models? These tools aren’t great for team collaboration even without AI in the picture. Adding AI to the mix won’t make verification easier unless both the tooling improves and people learn to use it properly.

Software development got lucky. The verification infrastructure existed before AI arrived. Other domains are building theirs now, one custom solution at a time.

Roth didn’t need to understand electrical systems or structural engineering. He just needed to walk backstage and glance at a bowl of M&Ms. That single check gave him a hint about the quality of the work.

Every field adopting AI needs to find its brown M&Ms—the checks that reveals whether the complex work was done right. Not systems that never make mistakes, but verification so simple that catching mistakes becomes easy and routine.

The question isn’t whether AI can do the work. It’s whether we can verify it did the work correctly without doing it ourselves.

What are your brown M&Ms?