AI's WYSIWYG Moment: Charting AI's Next Interface Revolution

How the next leap in AI interfaces could mirror the word processing revolution, transforming how we interact with artificial intelligence.

Throughout the history of technology, we've seen how a breakthrough in user experience can suddenly unlock a technology's potential, leading to widespread adoption. The graphical user interface revolutionized personal computing, touchscreens transformed mobile devices, and voice commands are reshaping our interaction with smart homes. Each of these innovations marked a turning point, taking a powerful but complex technology and making it accessible to the masses.

Today, we are at a similar crossroads with artificial intelligence. The current paradigm of interacting with AI through chatbots, whether by voice or text, has certainly made waves. However, it doesn't quite feel like the watershed moment we're waiting for - the interface that will truly unleash AI's potential and integrate it seamlessly into our daily lives.

In this article, we'll explore how new interface paradigms have historically uncorked a technology's potential, focusing on the transformation of word processing from arcane computer markup to the intuitive WYSIWYG (What You See Is What You Get) interfaces we now take for granted. This journey from complexity to simplicity, from expert-only tools to universally accessible applications, offers insights into the potential future of AI interfaces.

We'll then turn our attention to the cutting edge of AI interaction, examining early efforts that hint at a future moving beyond the current "What You Say Is What You Get" model. These innovations are pointing towards a "What You See Is What It Sees" paradigm, where AI could understand and interact with our entire digital workspace, potentially changing how we leverage artificial intelligence in our daily tasks.

The Word Processing Revolution: A Blueprint for AI's Future

To understand the potential trajectory of AI interfaces, we need only look back a few decades to the evolution of word processing. This transformation offers a parallel to our current AI landscape and provides insights into how a shift in user interface can accelerate adoption.

In 1964, J.H. Saltzer at MIT introduced RUNOFF, a software program that separated the acts of writing and formatting. While innovative in concept, RUNOFF's interface was far from intuitive. Users had to intersperse their text with cryptic commands, a method that, while powerful, was accessible only to those willing to climb a steep learning curve.

Consider this example of RUNOFF markup (from Wikipedia):

.nf

When you're ready to order,

call us at our toll free number:

.sp

.ce

1-800-555-xxxx

.sp

Your order will be processed

within two working days and shippedTo the uninitiated, this looks more like a coded message than a simple advertisement. Yet, for nearly two decades, this command-based approach dominated digital document creation.

Despite its complexity, RUNOFF offered capabilities that typewriters couldn't match. It allowed for global changes to documents, automatic line length adjustments, and the creation of multiple copies without retyping. These advantages kept it in use, much like how today's AI chatbots, despite their limitations, are finding widespread adoption due to their powerful capabilities.

The word processing landscape changed dramatically in 1984 with the introduction of Apple's MacWrite. This software pioneered the consumer-scale WYSIWYG (What You See Is What You Get) interface, where the on-screen display closely matched the printed output. Suddenly, creating a document on a computer felt intuitive, almost magical. Bold text looked bold on screen, italics slanted before your eyes, and centering text was as simple as clicking a button.

This transformation didn't just change how we wrote; it changed who could write. The barriers to entry for creating professional-looking documents plummeted. Small businesses could produce marketing materials that rivaled those of larger corporations. Students could turn in papers that looked polished and professional. The democratization of document design had begun.

The parallels to our current AI landscape are striking. Today's AI interfaces, primarily chatbots, are akin to the early command-line word processors. They're powerful and useful, but they require a specific skill set to use effectively. Just as early word processor users needed to learn markup languages, today's AI users must master the art of prompt engineering to get the best results.

But what if we could make AI as intuitive and accessible as WYSIWYG made word processing? What if interacting with AI could be as natural as interacting with the documents on our screens? This is the promise of the next generation of AI interfaces, which we'll explore in the following sections.

While this promise of intuitive AI interaction is tantalizing, the reality of our current AI interfaces falls short of this vision. To appreciate the potential impact of future innovations, we must first understand the limitations of today's AI interaction paradigm, which in many ways mirrors the complexities of early word processing systems.

The Chatbot Conundrum: Today's AI Interface Paradigm

Our current AI interaction model bears a resemblance to the early days of computing, specifically the era of punch cards. Back then, programmers meticulously planned their code, carefully punched it into cards using specialized machines, and then waited their turn to feed these cards into the computer. This process was fraught with anxiety—a single misplaced hole could derail the entire program. The feedback loop was slow, and iteration was a time-consuming process.

Today's AI interactions follow a similar pattern. We craft our prompts, input them into a chat interface, and hope we've provided enough context for the AI to produce a useful answer. This approach works well for universal queries like "Write a blog on choosing a healthcare plan." However, it falters when dealing with more nuanced, context-dependent tasks.

Consider replying to an email thread. You might paste parts of the conversation into the AI, but it lacks the full context you possess. It doesn't know your relationship with the other parties, the unwritten subtext, or the broader implications of the discussion. The AI, in essence, is operating in a vacuum, missing the rich tapestry of information that informs human communication.

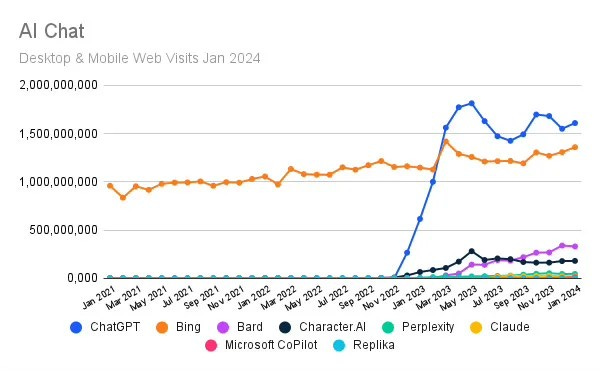

For patient and methodical users, the results can be impressive. Power users spend hours refining prompt libraries and employing various tools to pass context to the Language Model. However, this level of expertise is not universal, and it's not surprising that ChatGPT's usage appears to be declining.

According to an article in The Wrap, ChatGPT's usage peaked in May of 2023 and hasn't recovered to those highs. These statistics suggest that while AI chatbots have captured public imagination, their current interface may be limiting their long-term adoption and utility.

Voice interfaces have emerged as an attempt to simplify interaction, reducing the need for typing. However, they still suffer from the same fundamental limitation - a lack of contextual awareness. Whether through text or voice, we're still constrained by what we explicitly tell the AI, rather than what it can understand from our broader digital environment.

This limitation brings us to a crucial question: How can we evolve AI interfaces to be more intuitive, context-aware, and seamlessly integrated into our digital lives? In the following sections, we'll explore emerging paradigms that hint at a future where AI understands what we see and do in our digital workspaces.

The Dawn of Context-Aware AI: From Screen Recording to Intelligent Assistance

In November 2022, just before the LLM boom took the world by storm, a company called Rewind launched with a $10 million investment from Andreessen Horowitz (A16Z). Their innovative approach was ahead of its time: create software that continuously records and catalogs everything on a user's screen, including automatic transcription of video meetings. Rewind's founder envisioned giving users a near-perfect memory of their digital lives.

Initially, this technology, while unique, had limitations. Users would need to manually scrub through recordings to find specific information or rely on basic search functionality. However, the landscape shifted with the rise of advanced Language Models like ChatGPT.

The advent of powerful LLMs transformed Rewind's concept from a sophisticated recording tool into an intelligent, context-aware assistant. Suddenly, users could interact with their digital history using natural language queries. Instead of manually searching for a document discussed in last week's meeting, one could simply ask, "What was that report we talked about in Tuesday's team call?" The AI, having 'seen' everything on the screen, could provide not just the document, but also relevant context from the meeting.

This synergy between screen recording and LLMs represents a new direction in human-computer interaction. Before LLMs, the value of recorded data was limited by the user's ability to search and recall. You had to remember when you might have seen something before you could get back to it. Now, the AI can sift through vast amounts of recorded information, understanding context to provide relevant answers and insights.

The potential of this approach quickly caught the attention of tech giants. Microsoft is incorporating a similar concept into Windows 11 with a feature called "Recall". According to The Verge, Recall is designed to be an AI-powered Assistant for Windows 11. It will allow users to search through their documents, browsing history, and even conversations using natural language queries. For instance, you could ask, "What was that recipe I looked at last week?" and Recall would find it for you. The feature is expected to use local processing for privacy reasons and will be deeply integrated into the Windows 11 experience.

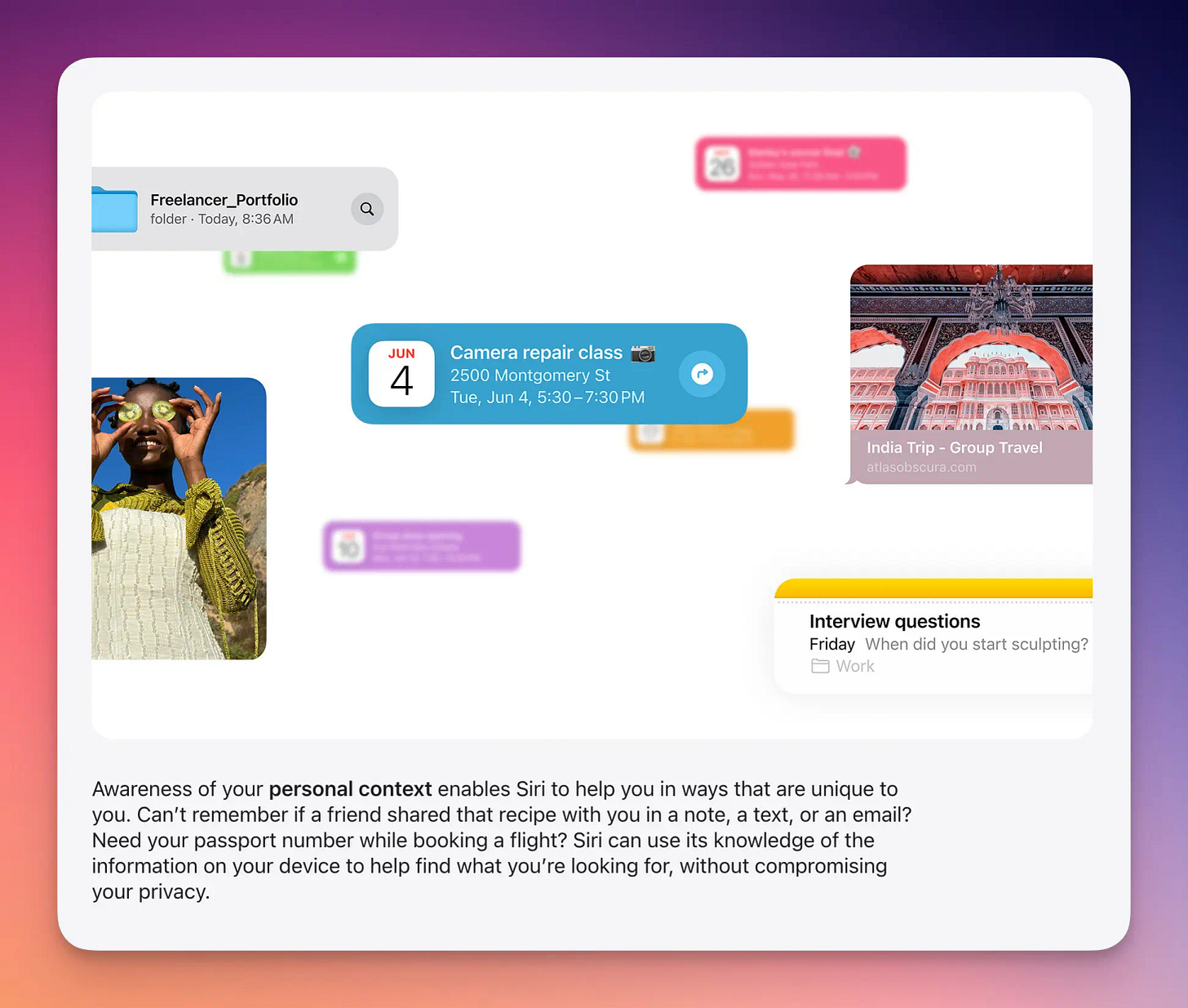

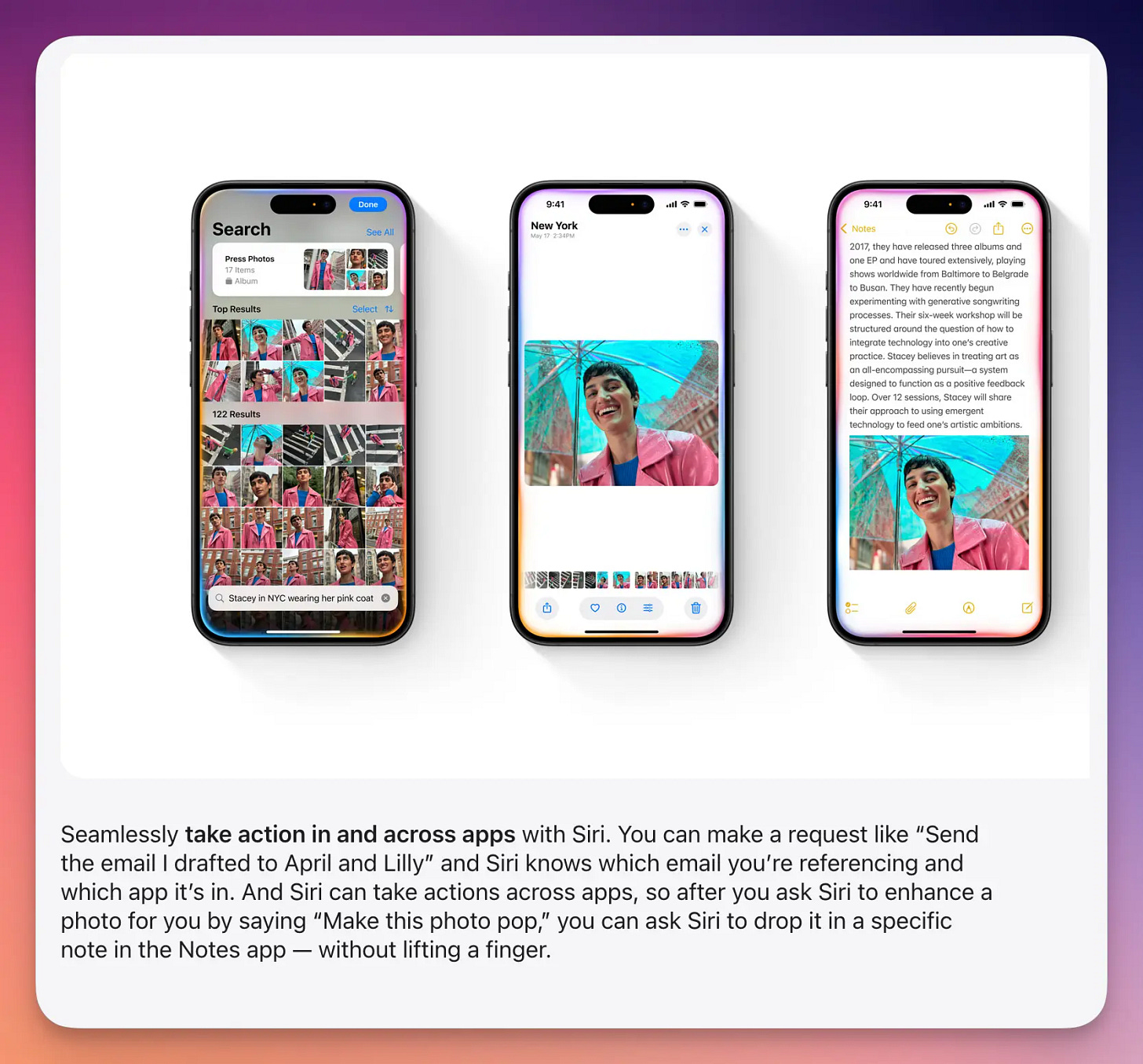

Apple, too, is venturing into this space with "Siri onScreen Awareness", aiming to make Siri cognizant of what's on your screen and use that context to take action. This move by major players underscores the potential impact of context-aware AI assistants.

As we move towards this new paradigm of AI interaction, we're shifting from a "What You Say Is What You Get" model to a "What You See Is What It Sees" approach. This evolution promises to make AI assistance more intuitive, context-aware, and seamlessly integrated into our lives.

But why stop at the digital realm? The logical progression of this technology extends into the physical world. Companies like Humane AI and even Rewind itself are developing wearable devices that aim to bring this contextual awareness into our everyday environments. Imagine an AI assistant that not only knows your digital history but also understands your physical surroundings and interactions.

This shift represents as significant a leap in human-computer interaction as the move from command-line interfaces to graphical user interfaces. We're moving towards a future where our AI assistants aren't just reactive tools, but proactive partners that understand our full context - both digital and physical.

However, this powerful technology also brings new challenges. Privacy concerns, data security, and the ethical implications of AI systems with access to so much personal information are critical issues that need to be addressed.

The journey from Rewind's initial concept to the current landscape of context-aware AI illustrates how rapidly the field is evolving. It also underscores a crucial point: sometimes, the most transformative technologies emerge not from a single breakthrough, but from the synergy of multiple innovations. In this case, the marriage of comprehensive data capture with advanced language models has opened up new possibilities that were hard to imagine just a few years ago.

The WYSIWYG Moment for AI

As we stand on the cusp of this new era in AI interfaces, it's worth reflecting on the parallels with the word processing revolution. In the days of RUNOFF power users were undoubtedly impressed by the capabilities these systems offered, much as we marvel at today's AI chatbots. However, the true democratization of word processing only occurred when WYSIWYG interfaces made the technology accessible to the masses. Similarly, while current AI interfaces have captured our imagination, they represent only the beginning. The leap from "What You Say Is What You Get" to "What You See Is What It Sees" may be as transformative as the shift from markup to WYSIWYG editing. While it's too early to predict exactly what form these new interfaces will take, it's clear that the future of AI interaction will extend far beyond mere typing.