AI's Aha Moment

When a Machine Learned to Doubt

In the midst of solving a complex mathematical equation, an AI model did something interesting - it stopped itself mid-calculation and said: "Wait, wait. Wait. That's an aha moment." Then, recognizing its approach wasn't working, it began to reevaluate its steps, methodically working through the problem until it found the right answer.

This wasn't just another AI solving a math problem. It was a moment that revealed something about how machines might learn to think. The model hadn't been programmed to doubt itself or show its work. Instead, through a simple reward system that encouraged thinking, it had discovered these behaviors on its own - behaviors that could change how we develop AI.

The model was DeepSeek's R0 (a precursor to the famous R1 model), and its approach to AI development has captured attention not just for matching the performance of much larger models at a fraction of the cost, but for how it achieved these results: by focusing on the process of thinking itself.

The Power of Play

This story starts with something we all understand: learning through play. Watch a child learning to walk: they try, fall, adjust, and try again. A basketball player practicing free throws: each shot provides feedback, each miss leads to subtle adjustments. Through thousands of repetitions, complex skills emerge naturally from this cycle of attempt and feedback.

DeepMind tapped into this same pattern when developing AlphaGo. Instead of programming it with human strategies, they let it play millions of games against itself. Each game was an experiment, each move a variation, each win or loss a piece of feedback. This led to moments that stunned the Go community, like Move 37 in its match against Lee Sedol - a play that seemed wrong to human experts but proved brilliant. The machine hadn't been taught this move; it discovered it through play.

How AI Models Learn

Most AI language models, however, follow a different path. They start with what's called a base transformer model - an AI system that learns to understand language by predicting what word comes next in a sequence, much like how we might guess the next word in an unfinished sentence. Through unsupervised training on vast amounts of text, these models develop a basic understanding of language patterns. Then comes supervised learning, where the model is shown examples of good responses and learns to mimic them. Finally, many models undergo reinforcement learning from human feedback (RLHF), where human raters help refine the model's outputs.

This approach has produced impressive results, but it has a limitation: models tend to focus on generating answers rather than thinking through problems. They might give you the right response, but they often skip the reasoning process that humans use to solve complex problems.

OpenAI's O1 model was one of the first to demonstrate sophisticated reasoning capabilities, but they kept their methods secret. They even restricted access to the model's thinking steps, treating their approach to machine reasoning as proprietary technology.

Then DeepSeek published their paper.

DeepSeek's Innovation

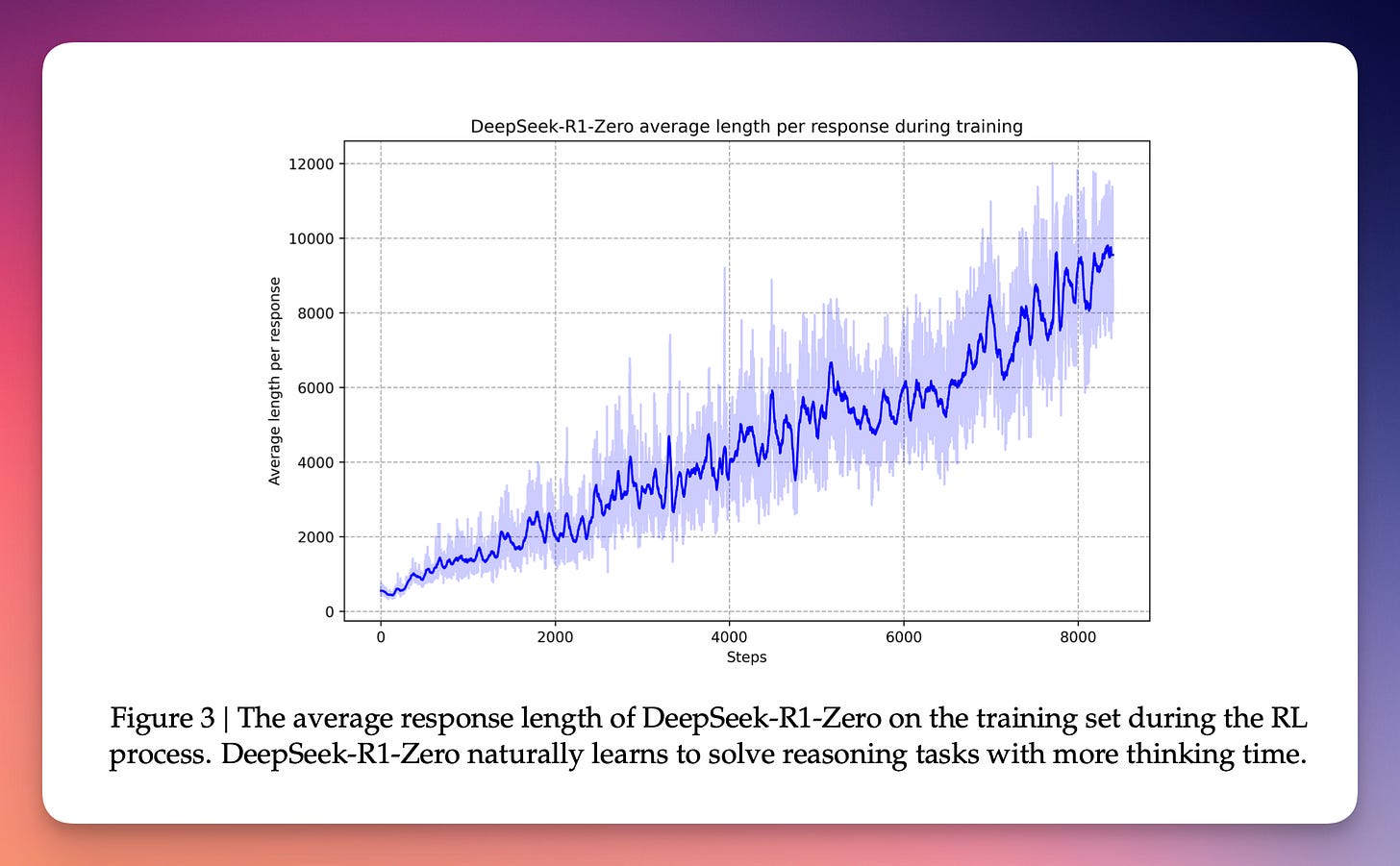

DeepSeek took a different approach. Instead of following the standard training path, they started with their base model and went straight to reinforcement learning. Their innovation was remarkably simple: they created a reward system with just two components - accuracy of answers and showing the reasoning process.

The results were remarkable. DeepSeek achieved a 79.8% success rate on the AIME 2024 mathematics benchmark - a notoriously difficult test designed to challenge the brightest high school mathematicians in America. What made this even more impressive was that they did it with a relatively modest model. While modern AI systems can have trillions of parameters (the individual settings that determine how the model processes information), DeepSeek used just 37 billion active parameters out of a total of 671 billion - like solving complex problems while only using a fraction of the available computing power. This efficiency meant they could match the performance of much larger, more expensive models at a fraction of the cost.

But perhaps most importantly, they shared everything. While other companies treated their breakthroughs as trade secrets, DeepSeek published their complete methodology, believing that openness would accelerate progress in AI development.

The Power of Feedback Loops

What makes this approach so effective? We can see a similar pattern at work in one of science's longest-running experiments. Since 1988, Richard Lenski and his team have been watching evolution happen in real-time. They started with twelve identical populations of E. coli bacteria, each living in the same controlled environment. Over tens of thousands of generations, they've observed as random mutations and environmental feedback produced remarkable adaptations. Most dramatically, one population evolved the ability to consume citrate - a completely new trait that emerged through this cycle of variation and feedback.

This pattern of variation and feedback appears everywhere. Languages evolve through the same mechanism - new expressions emerge naturally, and those that resonate survive and spread. Markets follow this pattern too, with companies introducing new products and services, and customer behavior providing the feedback that determines what survives.

But what makes modern AI systems so powerful is their ability to compress these evolutionary timescales. While Lenski's bacteria needed decades to evolve new abilities, AI systems can run through millions of variations and feedback cycles in hours.

We're likely standing at the beginning of a new wave in AI development. As research labs worldwide adopt and refine these techniques, we'll see models that don't just answer questions, but think through problems. DeepSeek's innovation isn't just about making smarter AI - it's about understanding how intelligence itself emerges from the simple cycle of try, learn, and adapt.