AI Regulation: A Patchwork Quilt Around the World

This article surveys the global approaches to regulating AI technology, from the EU's risk-based rules to China's ideological controls to the US's fragmented state and federal efforts.

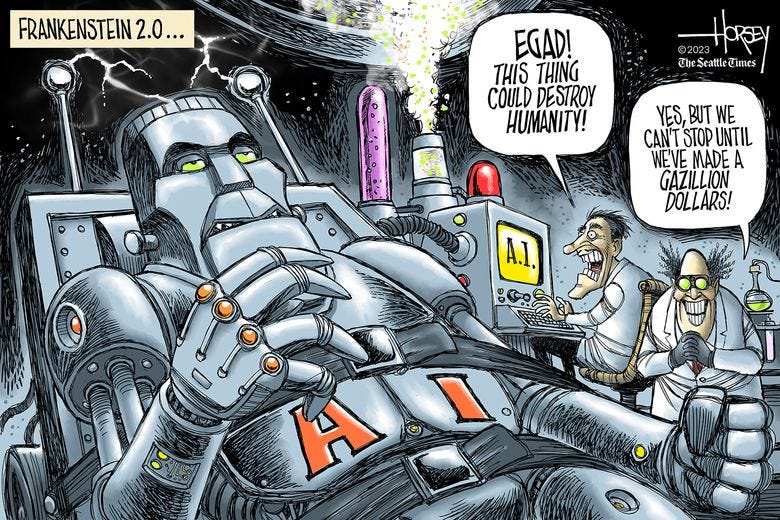

AI is too important not to regulate, and too important not regulate well.

Sundar Pichai, CEO of Google

“I think if this technology goes wrong, it can go quite wrong. And we want to be vocal about that. We want to work with the government to prevent that from happening.”

Sam Altman, CEO OpenAI

In March 2023, ChatGPT was abruptly blocked in Italy over data privacy concerns, setting off a new game of cat and mouse between regulators and the tech companies they regulate.

When Garante, Italy's privacy watchdog, scrutinized ChatGPT, they didn't like what they saw. Or, more precisely, what they didn't see: clarity around how OpenAI would use the prompts entered into their chatbot. In a provisional order, Garante banned the bot, arguing users should be able to opt out of having their data used to train AI systems. The blockade was short-lived, lifted once OpenAI tweaked ChatGPT to include options around data use. But the crackdown highlighted the Sisyphean challenge of regulation trying to keep pace with galloping technological change.

In this article, we'll survey the current state of AI related regulations.

European Union

The EU is no stranger to defining broad regulations to rein in technology. In the case of AI, they classify it into four risk categories to determine the applicable rules. The final draft revealed in January 2024 uses the following levels of risk.

Minimal/no risk AI: No new obligations beyond potential voluntary industry codes of conduct. This includes AI with minimal potential harm.

Transparency obligations: Systems like those manipulating media must be transparent to spot deepfakes.

High-risk AI: Requires mandatory assessments before release for AI like self-driving cars or in critical infrastructure. Providers must register these systems in an EU database.

Unacceptable risk: Systems enabling activities like social scoring or real-time biometric tracking are prohibited with few exceptions.

Across categories, fines of up to 6% of revenue await those flouting the law. To ease the transition, the EU's AI Pact encourages voluntary early adoption of the principles by companies.

United States

The United States is, as usual, taking a fragmented approach to AI governance, with a patchwork of state and federal actions rather than a unified national strategy. Several states are enacting laws to regulate aspects of AI. New York City, for example, now requires companies using AI in hiring to disclose their use, have their systems independently audited, and check annually for algorithmic bias. California mandates that bots be disclosed to users interacting with them. Colorado became the first state to regulate how AI can be used in insurance pricing.

In 2023 alone, over 25 states introduced bills related to AI, with some, like Connecticut and Texas, establishing organizations to monitor AI development. The piecemeal efforts will continue the tradition of offering a confusing array of rules for companies to follow.

The White House also issued a blueprint for an AI Bill of Rights which asks for:

Safe and effective systems: AI should be safe, secure and perform as intended. Harmful outcomes should be minimized.

Algorithmic discrimination: AI systems should not result in unlawful, unfair, misleading or discriminatory treatment.

Data privacy: People should control personal data collection, use and retention. Consent must be clear.

Notice and explanation: People should be notified of AI use and how outcomes are reached.

Human alternatives: People should be able to opt out of AI when appropriate and choose a person instead.

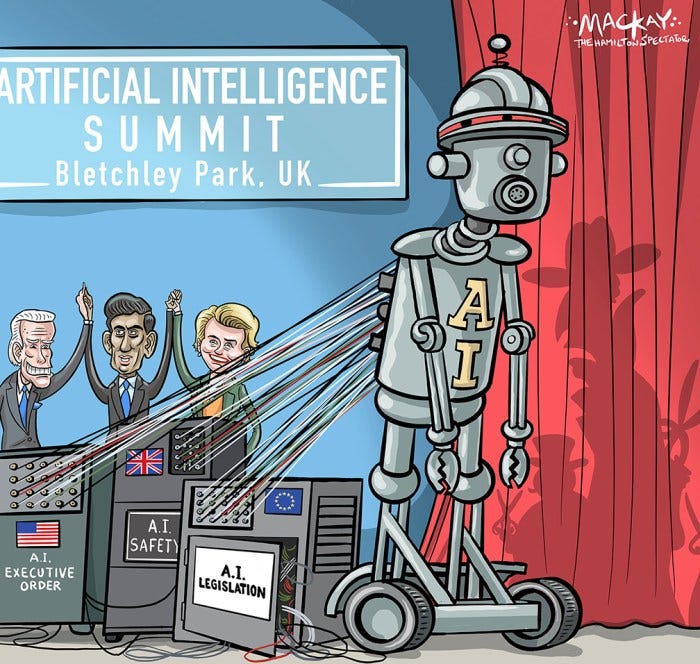

The blueprint is a suggestion at this point, and without action by Congress, it's unlikely to go anywhere, about as binding as a "Have a Nice Day" sign at a fast food restaurant. In the meantime, the Biden administration did issue an executive order to set policy direction for federal agencies and encourage AI companies to collaborate on safe AI development.

China

China has modeled some of its AI governance after regulations enacted abroad. The country's privacy law, the Personal Information Protection Law, borrows from EU's GDPR. China's internet regulator, the Cyberspace Administration of China (CAC), has established rules for AI that require registration and allow user opt-outs, similar to other nations.

The CAC has enacted a series of AI regulations in quick succession: rules for recommendation algorithms in 2022, restrictions on synthesized content later that year, and proposals for governing generative AI in early 2023 following ChatGPT's release.

But this being China, there is a twist. The algorithms need to be reviewed in advance by the state and “should adhere to the core socialist values”

Wrangling the AI Wild West

And so it goes with governing new technology. As AI zooms ahead, countries are falling into familiar regulatory patterns. The EU obsesses over risk levels to shape nuanced rules. China swiftly imposes state control and ideological obedience. The US sticks to its fragmented patchwork "approach." The formulas vary, but the urgency feels greater this time.

Maybe it's the dizzying pace of progress. Or an awareness that AI could spin out of control absent oversight. Whatever the case, governments are scrambling to rein in this bucking bronco. But finding the sweet spot between unfettered invention and protection from harm remains an elusive aim. For now, countries are testing an array of regulatory lassos, hoping one successfully wrangles this new beast.