A Glimpse into the Future: Reflecting on OpenAI's 2023 Demo Day

Examining OpenAI's 2023 Demo Day reveals impressive technological strides, but their path to long-term dominance faces steep obstacles as rival AI systems rapidly advance.

It was not an Apple event, but at times, it certainly felt like one. The sleek presentation, coupled with carefully orchestrated reveals of new “products,” conjured memories of Steve Jobs theatrically unveiling the latest gadget on stage. But this was OpenAI’s 2023 Demo Day, providing a glimpse into the future through the lens of AI.

The event last week offered something for both developers and consumers. New toolkits and API access for programmers hinted at the increased integration of AI into software applications, while whiz-bang conversational demos provided a taste of interfaces to come. In this article, I’ll share my observations and analyses from OpenAI’s Demo Day, reflecting on a their progress foretells for the state of AI and its evolution in the years ahead.

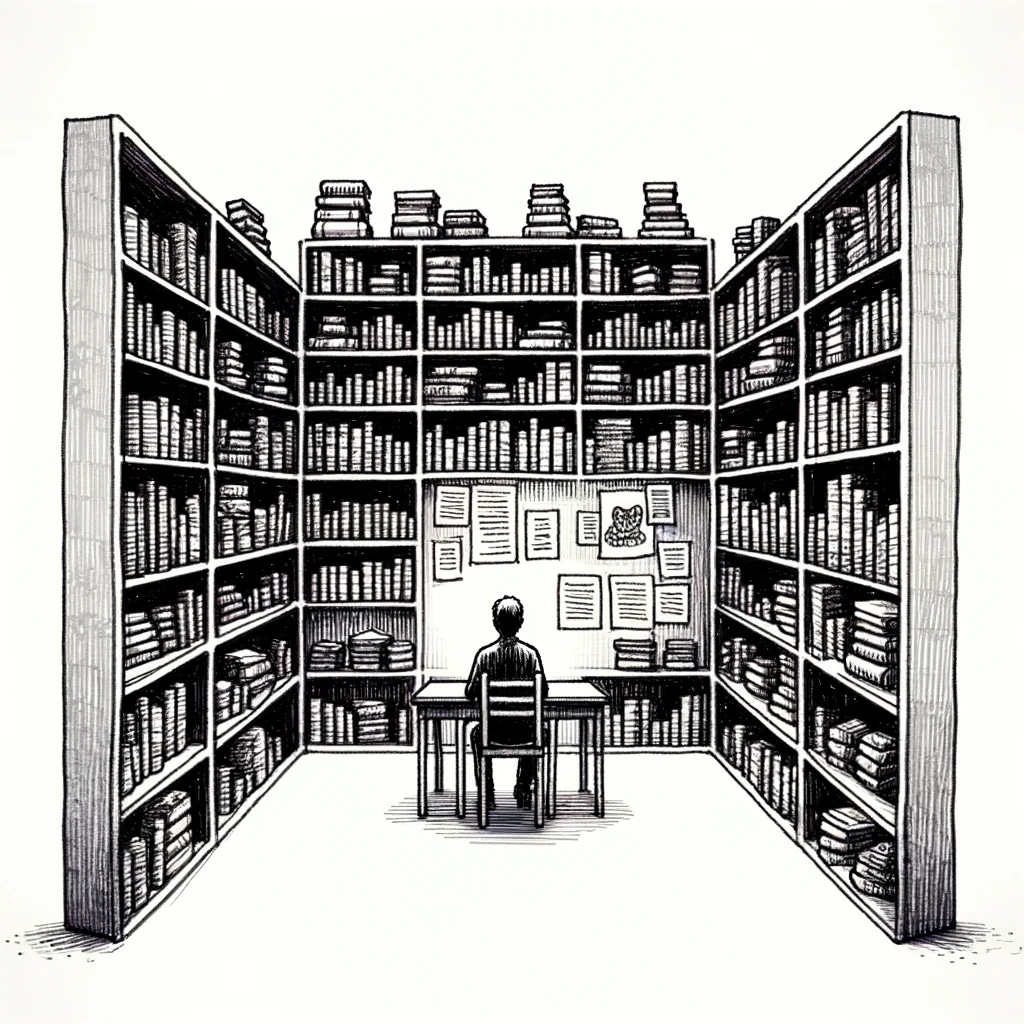

Beyond the 'Closed Room': Keeping Language Models Current

Last year, it was common to compare an LLM to someone locked in a room in September 2021, armed only with a finite set of books and information. When asked a question requiring external knowledge, they would occasionally try to cobble together an answer by hallucinating based on their limited data. Last week, Open AI announced that it has updated its LLM's information to April 2023 and promises to keep the model current.

Even more impressively, new capabilities like browsing the web, generating code, and calling functions are directly integrated into the LLMs themselves. For instance, when asked about Barcelona's weather, the model first writes a Python script to determine the current date range - potentially overkill, but illustrating OpenAI's thoughtful approach. Rather than just providing the dates, they've trained the LLM to write its own code to get concrete answers. This simple example demonstrates a key step forward - pairing an LLM with a safe code engine to handle any task. Now, queries that require external knowledge can be answered definitively without the model needing to speculate.

The Rise of Multi-Modality and Product Consolidation

Last year, OpenAI took a model-specific approach to its offerings. Customers would use Whisper for speech-to-text, GPT-3 and Codex for text completion, DALL-E 2 for image generation, and separate embedding models. Each had distinct pricing and interfaces.

However, OpenAI is now consolidating these disparate models into a unified product experience. The new system demonstrated at Demo Day combines multiple capabilities - through one single interface.

Owning the User Experience: OpenAI's Play for the Top of the AI Stack

As I've explored in previous articles, companies over the past year have been trying to integrate AI models as a feature within existing apps, like Poe.com wrapping bots in a common interface. However, OpenAI aims to flip that dynamic and control the user experience, making other services a feature of their platform.

With the new ability for users to build custom "GPTs", OpenAI wants to become the primary portal through which people interact with AI.

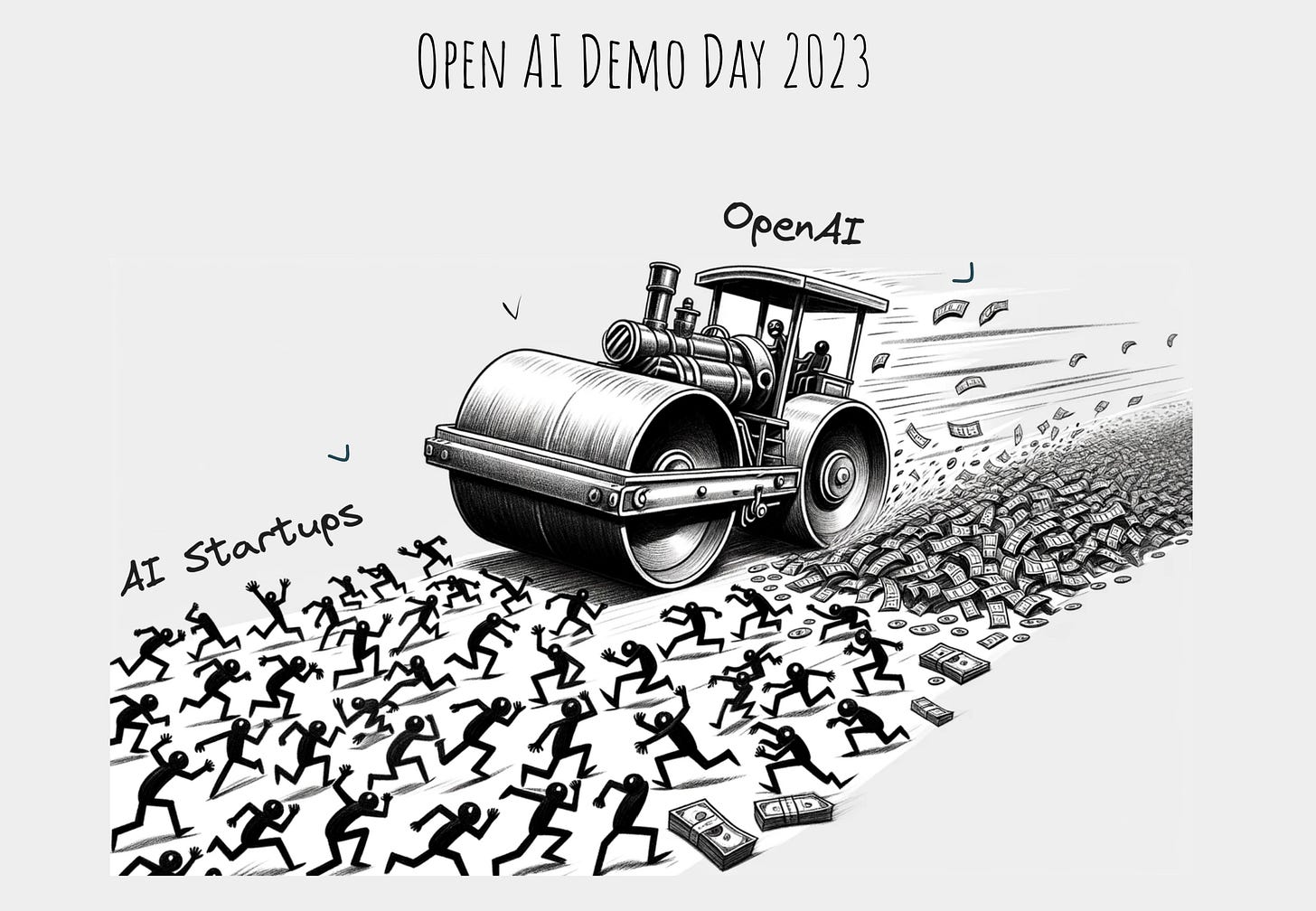

The AI Arms Race: Challenges to OpenAI's Dominance

OpenAI currently leads in large language model capabilities, but maintaining this dominance long-term will be difficult. Just as Apple overtook Tile in location tracking, OpenAI risks being overshadowed as bigger rivals replicate their capabilities.

Tile was previously the most popular Bluetooth tracker, but Apple integrated similar functionality into AirTags and the FindMy network. With millions more potential users through iPhones, Apple swiftly eliminated Tile's first-mover advantage.

Similarly, OpenAI's rivals are quickly catching up in areas like accessible language models. Both the open source community and private companies are quickly eroding OpenAI's time advantage. It's currently hard to see how OpenAI will maintain its lead.